Lahiru Jayasinghe

A Hybrid Deep Learning Model-based Remaining Useful Life Estimation for Reed Relay with Degradation Pattern Clustering

Sep 14, 2022

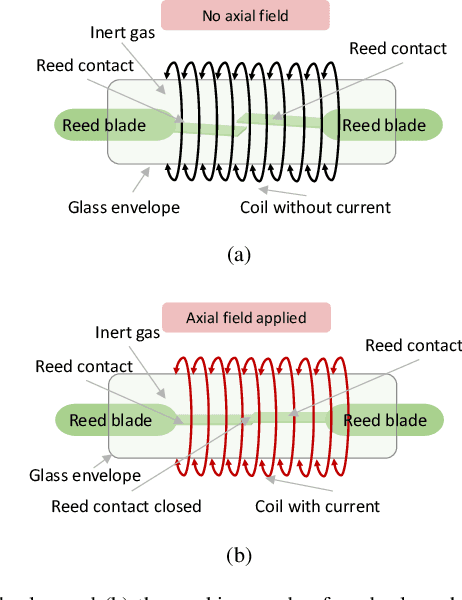

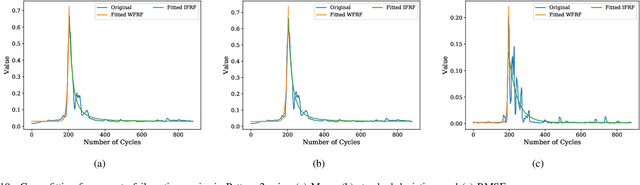

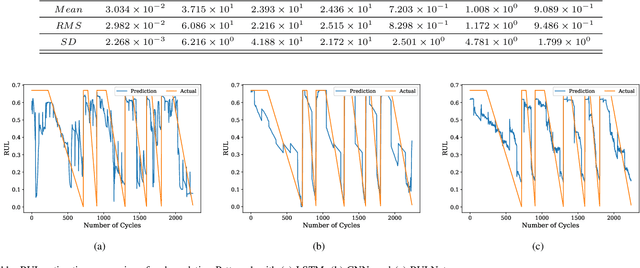

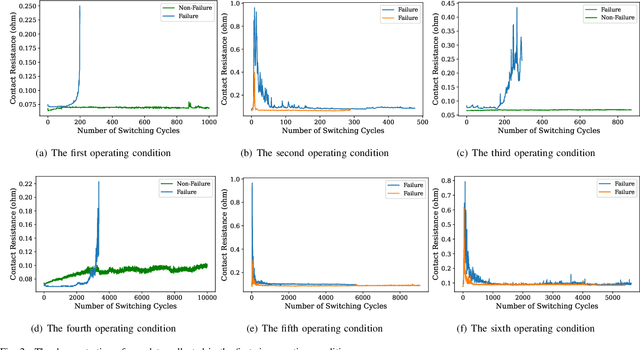

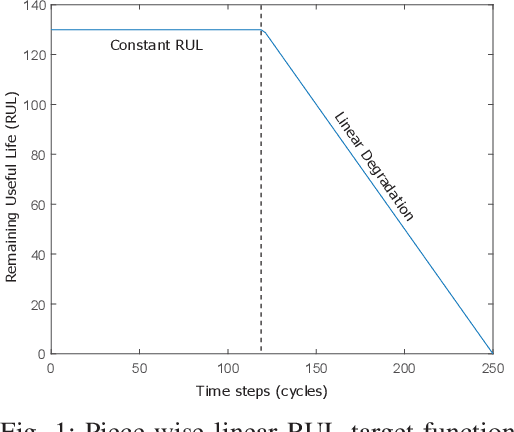

Abstract:Reed relay serves as the fundamental component of functional testing, which closely relates to the successful quality inspection of electronics. To provide accurate remaining useful life (RUL) estimation for reed relay, a hybrid deep learning network with degradation pattern clustering is proposed based on the following three considerations. First, multiple degradation behaviors are observed for reed relay, and hence a dynamic time wrapping-based $K$-means clustering is offered to distinguish degradation patterns from each other. Second, although proper selections of features are of great significance, few studies are available to guide the selection. The proposed method recommends operational rules for easy implementation purposes. Third, a neural network for remaining useful life estimation (RULNet) is proposed to address the weakness of the convolutional neural network (CNN) in capturing temporal information of sequential data, which incorporates temporal correlation ability after high-level feature representation of convolutional operation. In this way, three variants of RULNet are constructed with health indicators, features with self-organizing map, or features with curve fitting. Ultimately, the proposed hybrid model is compared with the typical baseline models, including CNN and long short-term memory network (LSTM), through a practical reed relay dataset with two distinct degradation manners. The results from both degradation cases demonstrate that the proposed method outperforms CNN and LSTM regarding the index root mean squared error.

Time-Series Regeneration with Convolutional Recurrent Generative Adversarial Network for Remaining Useful Life Estimation

Jan 11, 2021

Abstract:For health prognostic task, ever-increasing efforts have been focused on machine learning-based methods, which are capable of yielding accurate remaining useful life (RUL) estimation for industrial equipment or components without exploring the degradation mechanism. A prerequisite ensuring the success of these methods depends on a wealth of run-to-failure data, however, run-to-failure data may be insufficient in practice. That is, conducting a substantial amount of destructive experiments not only is high costs, but also may cause catastrophic consequences. Out of this consideration, an enhanced RUL framework focusing on data self-generation is put forward for both non-cyclic and cyclic degradation patterns for the first time. It is designed to enrich data from a data-driven way, generating realistic-like time-series to enhance current RUL methods. First, high-quality data generation is ensured through the proposed convolutional recurrent generative adversarial network (CR-GAN), which adopts a two-channel fusion convolutional recurrent neural network. Next, a hierarchical framework is proposed to combine generated data into current RUL estimation methods. Finally, the efficacy of the proposed method is verified through both non-cyclic and cyclic degradation systems. With the enhanced RUL framework, an aero-engine system following non-cyclic degradation has been tested using three typical RUL models. State-of-art RUL estimation results are achieved by enhancing capsule network with generated time-series. Specifically, estimation errors evaluated by the index score function have been reduced by 21.77%, and 32.67% for the two employed operating conditions, respectively. Besides, the estimation error is reduced to zero for the Lithium-ion battery system, which presents cyclic degradation.

Cluster Pruning: An Efficient Filter Pruning Method for Edge AI Vision Applications

Mar 05, 2020

Abstract:Even though the Convolutional Neural Networks (CNN) has shown superior results in the field of computer vision, it is still a challenging task to implement computer vision algorithms in real-time at the edge, especially using a low-cost IoT device due to high memory consumption and computation complexities in a CNN. Network compression methodologies such as weight pruning, filter pruning, and quantization are used to overcome the above mentioned problem. Even though filter pruning methodology has shown better performances compared to other techniques, irregularity of the number of filters pruned across different layers of a CNN might not comply with majority of the neural computing hardware architectures. In this paper, a novel greedy approach called cluster pruning has been proposed, which provides a structured way of removing filters in a CNN by considering the importance of filters and the underlying hardware architecture. The proposed methodology is compared with the conventional filter pruning algorithm on Pascal-VOC open dataset, and Head-Counting dataset, which is our own dataset developed to detect and count people entering a room. We benchmark our proposed method on three hardware architectures, namely CPU, GPU, and Intel Movidius Neural Computer Stick (NCS) using the popular SSD-MobileNet and SSD-SqueezeNet neural network architectures used for edge-AI vision applications. Results demonstrate that our method outperforms the conventional filter pruning methodology, using both datasets on above mentioned hardware architectures. Furthermore, a low cost IoT hardware setup consisting of an Intel Movidius-NCS is proposed to deploy an edge-AI application using our proposed pruning methodology.

DCASE 2018 Challenge: Solution for Task 5

Dec 11, 2018

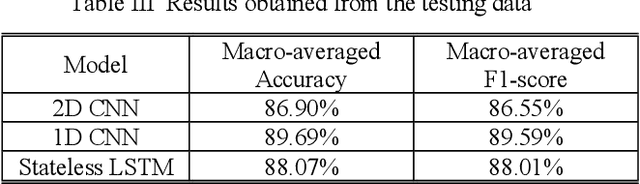

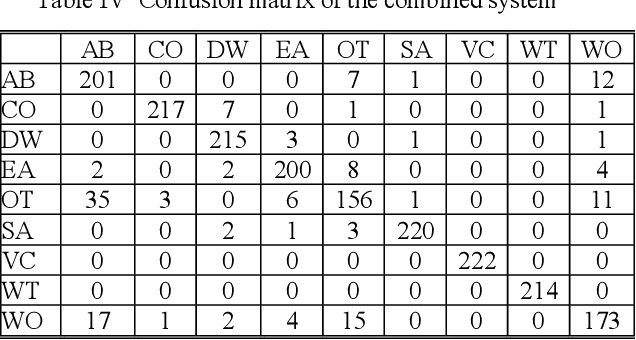

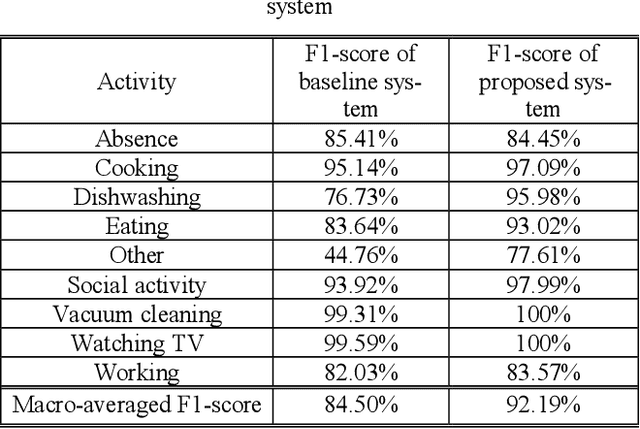

Abstract:To address Task 5 in the Detection and Classification of Acoustic Scenes and Events (DCASE) 2018 challenge, in this paper, we propose an ensemble learning system. The proposed system consists of three different models, based on convolutional neural network and long short memory recurrent neural network. With extracted features such as spectrogram and mel-frequency cepstrum coefficients from different channels, the proposed system can classify different domestic activities effectively. Experimental results obtained from the provided development dataset show that good performance with F1-score of 92.19% can be achieved. Compared with the baseline system, our proposed system significantly improves the performance of F1-score by 7.69%.

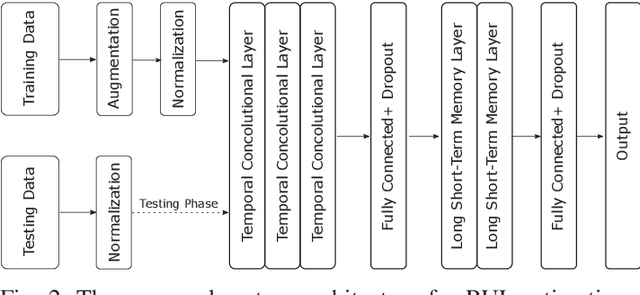

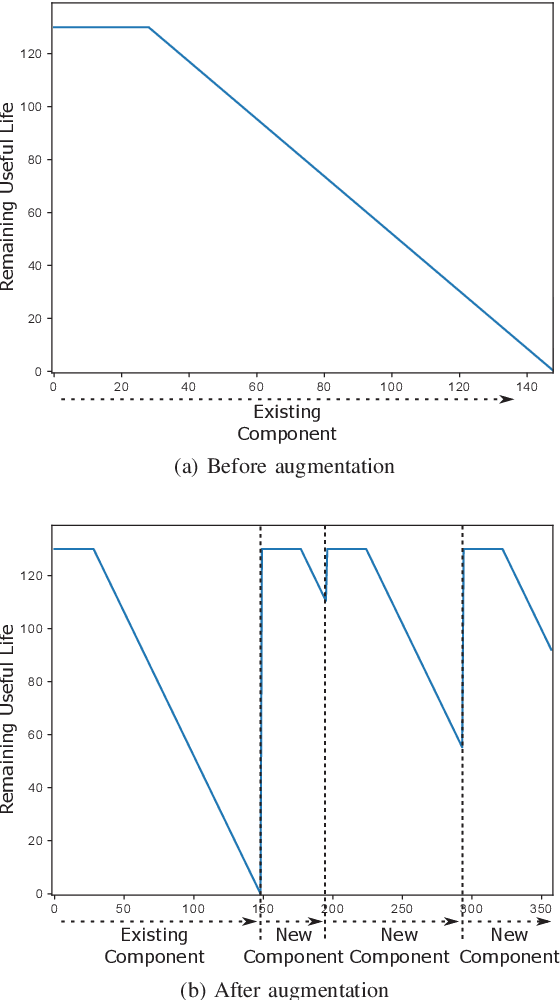

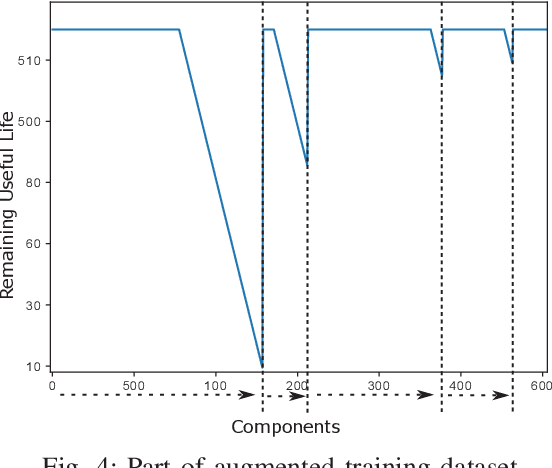

Temporal Convolutional Memory Networks for Remaining Useful Life Estimation of Industrial Machinery

Oct 12, 2018

Abstract:Accurately estimating the remaining useful life (RUL) of industrial machinery is beneficial in many real-world applications. Estimation techniques have mainly utilized linear models or neural network based approaches with a focus on short term time dependencies. This paper introduces a system model that incorporates temporal convolutions with both long term and short term time dependencies. The proposed network learns salient features and complex temporal variations in sensor values, and predicts the RUL. A data augmentation method is used for increased accuracy. The proposed method is compared with several state-of-the-art algorithms on publicly available datasets. It demonstrates promising results, with superior results for datasets obtained from complex environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge