Kwang-Ting

Tim

One-Shot Imitation Filming of Human Motion Videos

Dec 23, 2019

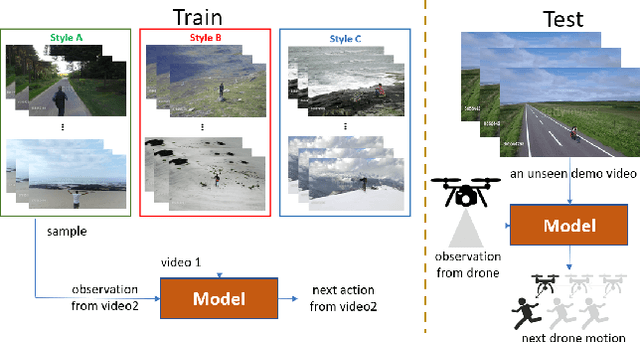

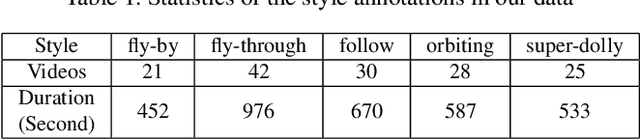

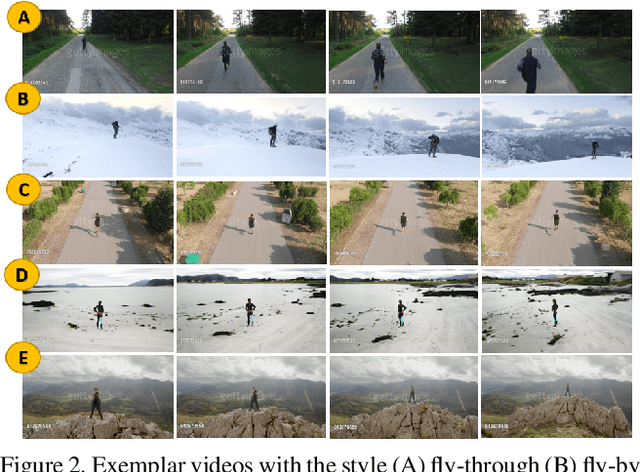

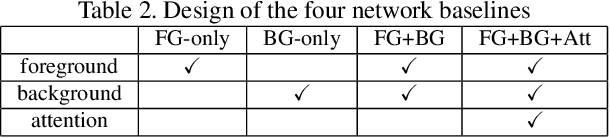

Abstract:Imitation learning has been applied to mimic the operation of a human cameraman in several autonomous cinematography systems. To imitate different filming styles, existing methods train multiple models, where each model handles a particular style and requires a significant number of training samples. As a result, existing methods can hardly generalize to unseen styles. In this paper, we propose a framework, which can imitate a filming style by "seeing" only a single demonstration video of the same style, i.e., one-shot imitation filming. This is done by two key enabling techniques: 1) feature extraction of the filming style from the demo video, and 2) filming style transfer from the demo video to the new situation. We implement the approach with deep neural network and deploy it to a 6 degrees of freedom (DOF) real drone cinematography system by first predicting the future camera motions, and then converting them to the drone's control commands via an odometer. Our experimental results on extensive datasets and showcases exhibit significant improvements in our approach over conventional baselines and our approach can successfully mimic the footage with an unseen style.

REDBEE: A Visual-Inertial Drone System for Real-Time Moving Object Detection

Dec 26, 2017

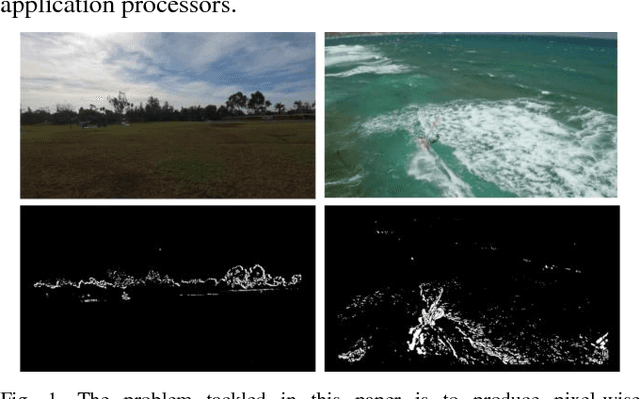

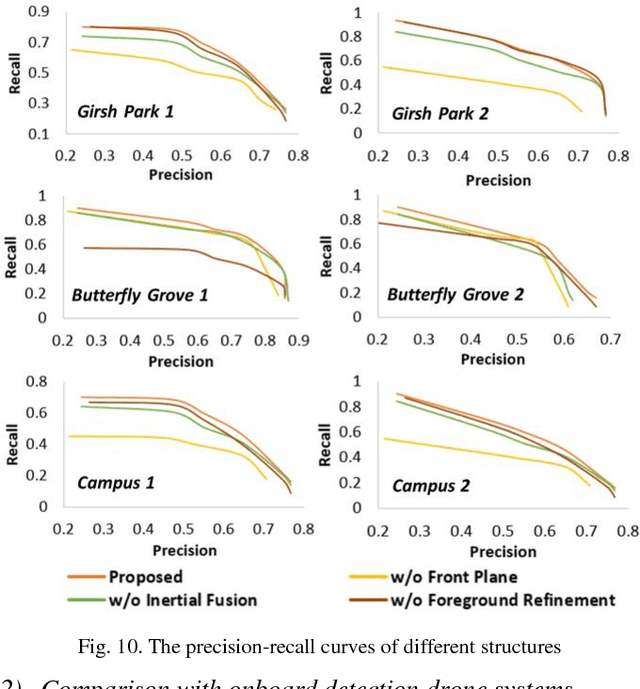

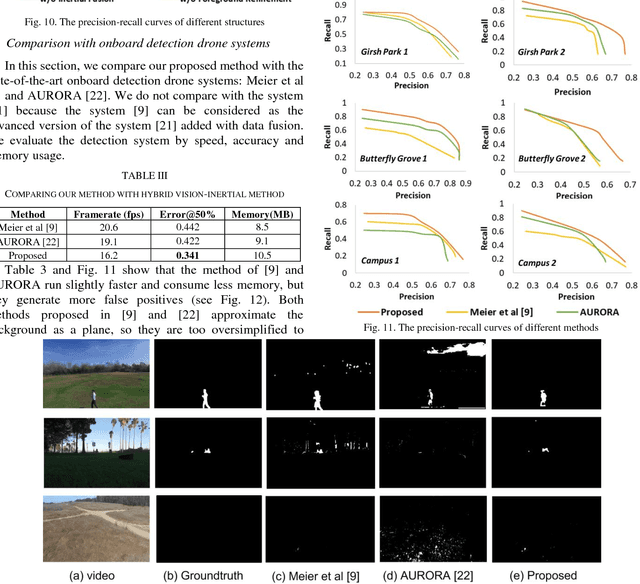

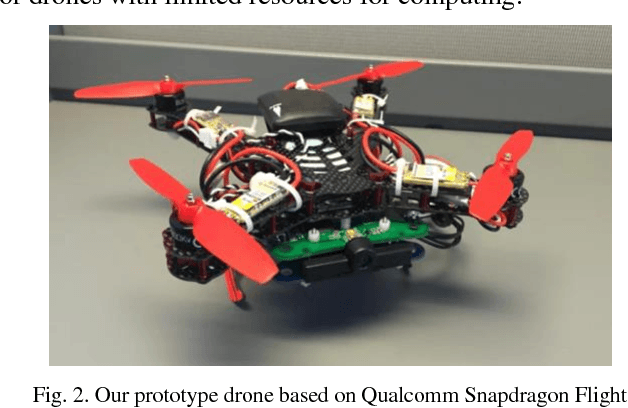

Abstract:Aerial surveillance and monitoring demand both real-time and robust motion detection from a moving camera. Most existing techniques for drones involve sending a video data streams back to a ground station with a high-end desktop computer or server. These methods share one major drawback: data transmission is subjected to considerable delay and possible corruption. Onboard computation can not only overcome the data corruption problem but also increase the range of motion. Unfortunately, due to limited weight-bearing capacity, equipping drones with computing hardware of high processing capability is not feasible. Therefore, developing a motion detection system with real-time performance and high accuracy for drones with limited computing power is highly desirable. In this paper, we propose a visual-inertial drone system for real-time motion detection, namely REDBEE, that helps overcome challenges in shooting scenes with strong parallax and dynamic background. REDBEE, which can run on the state-of-the-art commercial low-power application processor (e.g. Snapdragon Flight board used for our prototype drone), achieves real-time performance with high detection accuracy. The REDBEE system overcomes obstacles in shooting scenes with strong parallax through an inertial-aided dual-plane homography estimation; it solves the issues in shooting scenes with dynamic background by distinguishing the moving targets through a probabilistic model based on spatial, temporal, and entropy consistency. The experiments are presented which demonstrate that our system obtains greater accuracy when detecting moving targets in outdoor environments than the state-of-the-art real-time onboard detection systems.

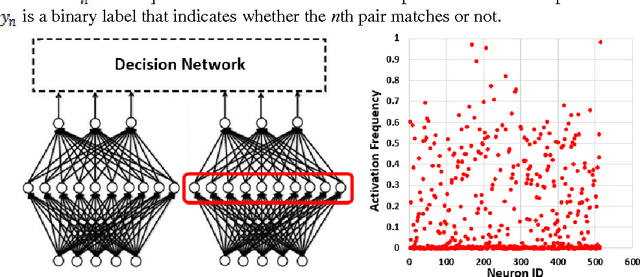

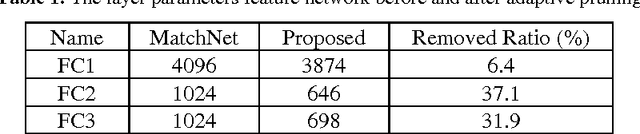

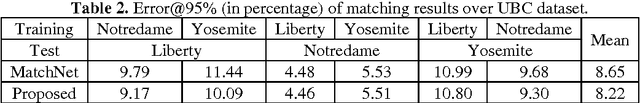

Local Feature Descriptor Learning with Adaptive Siamese Network

Jun 16, 2017

Abstract:Although the recent progress in the deep neural network has led to the development of learnable local feature descriptors, there is no explicit answer for estimation of the necessary size of a neural network. Specifically, the local feature is represented in a low dimensional space, so the neural network should have more compact structure. The small networks required for local feature descriptor learning may be sensitive to initial conditions and learning parameters and more likely to become trapped in local minima. In order to address the above problem, we introduce an adaptive pruning Siamese Architecture based on neuron activation to learn local feature descriptors, making the network more computationally efficient with an improved recognition rate over more complex networks. Our experiments demonstrate that our learned local feature descriptors outperform the state-of-art methods in patch matching.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge