Kunhan Lu

DaDiff: Domain-aware Diffusion Model for Nighttime UAV Tracking

Oct 16, 2024Abstract:Domain adaptation is an inspiring solution to the misalignment issue of day/night image features for nighttime UAV tracking. However, the one-step adaptation paradigm is inadequate in addressing the prevalent difficulties posed by low-resolution (LR) objects when viewed from the UAVs at night, owing to the blurry edge contour and limited detail information. Moreover, these approaches struggle to perceive LR objects disturbed by nighttime noise. To address these challenges, this work proposes a novel progressive alignment paradigm, named domain-aware diffusion model (DaDiff), aligning nighttime LR object features to the daytime by virtue of progressive and stable generations. The proposed DaDiff includes an alignment encoder to enhance the detail information of nighttime LR objects, a tracking-oriented layer designed to achieve close collaboration with tracking tasks, and a successive distribution discriminator presented to distinguish different feature distributions at each diffusion timestep successively. Furthermore, an elaborate nighttime UAV tracking benchmark is constructed for LR objects, namely NUT-LR, consisting of 100 annotated sequences. Exhaustive experiments have demonstrated the robustness and feature alignment ability of the proposed DaDiff. The source code and video demo are available at https://github.com/vision4robotics/DaDiff.

Enhancing Nighttime UAV Tracking with Light Distribution Suppression

Sep 25, 2024Abstract:Visual object tracking has boosted extensive intelligent applications for unmanned aerial vehicles (UAVs). However, the state-of-the-art (SOTA) enhancers for nighttime UAV tracking always neglect the uneven light distribution in low-light images, inevitably leading to excessive enhancement in scenarios with complex illumination. To address these issues, this work proposes a novel enhancer, i.e., LDEnhancer, enhancing nighttime UAV tracking with light distribution suppression. Specifically, a novel image content refinement module is developed to decompose the light distribution information and image content information in the feature space, allowing for the targeted enhancement of the image content information. Then this work designs a new light distribution generation module to capture light distribution effectively. The features with light distribution information and image content information are fed into the different parameter estimation modules, respectively, for the parameter map prediction. Finally, leveraging two parameter maps, an innovative interweave iteration adjustment is proposed for the collaborative pixel-wise adjustment of low-light images. Additionally, a challenging nighttime UAV tracking dataset with uneven light distribution, namely NAT2024-2, is constructed to provide a comprehensive evaluation, which contains 40 challenging sequences with over 74K frames in total. Experimental results on the authoritative UAV benchmarks and the proposed NAT2024-2 demonstrate that LDEnhancer outperforms other SOTA low-light enhancers for nighttime UAV tracking. Furthermore, real-world tests on a typical UAV platform with an NVIDIA Orin NX confirm the practicality and efficiency of LDEnhancer. The code is available at https://github.com/vision4robotics/LDEnhancer.

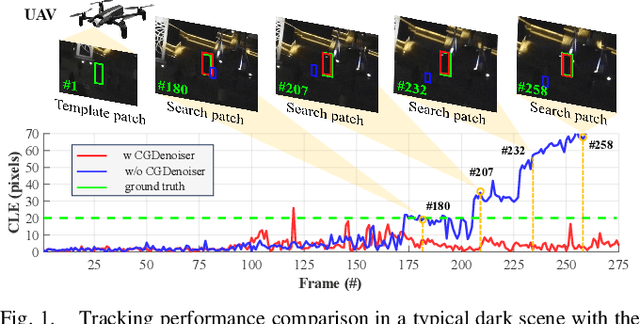

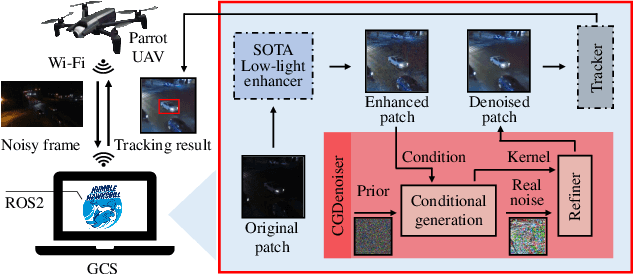

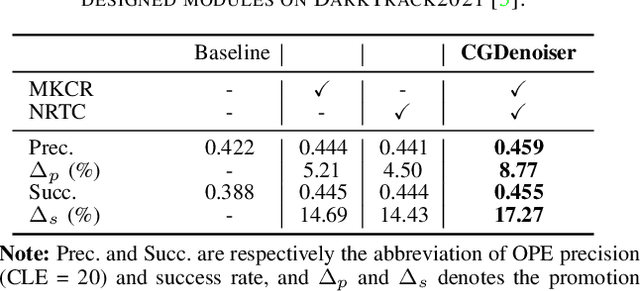

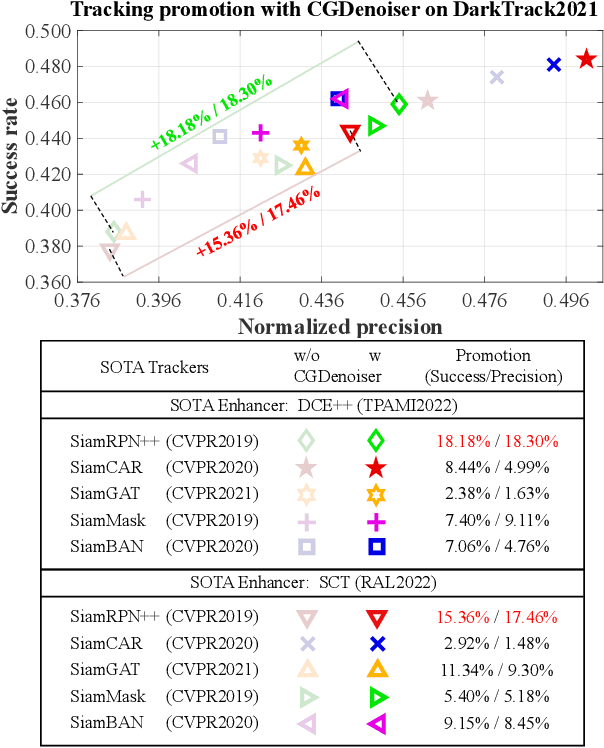

Conditional Generative Denoiser for Nighttime UAV Tracking

Sep 25, 2024

Abstract:State-of-the-art (SOTA) visual object tracking methods have significantly enhanced the autonomy of unmanned aerial vehicles (UAVs). However, in low-light conditions, the presence of irregular real noise from the environments severely degrades the performance of these SOTA methods. Moreover, existing SOTA denoising techniques often fail to meet the real-time processing requirements when deployed as plug-and-play denoisers for UAV tracking. To address this challenge, this work proposes a novel conditional generative denoiser (CGDenoiser), which breaks free from the limitations of traditional deterministic paradigms and generates the noise conditioning on the input, subsequently removing it. To better align the input dimensions and accelerate inference, a novel nested residual Transformer conditionalizer is developed. Furthermore, an innovative multi-kernel conditional refiner is designed to pertinently refine the denoised output. Extensive experiments show that CGDenoiser promotes the tracking precision of the SOTA tracker by 18.18\% on DarkTrack2021 whereas working 5.8 times faster than the second well-performed denoiser. Real-world tests with complex challenges also prove the effectiveness and practicality of CGDenoiser. Code, video demo and supplementary proof for CGDenoier are now available at: \url{https://github.com/vision4robotics/CGDenoiser}.

Continuity-Aware Latent Interframe Information Mining for Reliable UAV Tracking

Mar 08, 2023Abstract:Unmanned aerial vehicle (UAV) tracking is crucial for autonomous navigation and has broad applications in robotic automation fields. However, reliable UAV tracking remains a challenging task due to various difficulties like frequent occlusion and aspect ratio change. Additionally, most of the existing work mainly focuses on explicit information to improve tracking performance, ignoring potential interframe connections. To address the above issues, this work proposes a novel framework with continuity-aware latent interframe information mining for reliable UAV tracking, i.e., ClimRT. Specifically, a new efficient continuity-aware latent interframe information mining network (ClimNet) is proposed for UAV tracking, which can generate highly-effective latent frame between two adjacent frames. Besides, a novel location-continuity Transformer (LCT) is designed to fully explore continuity-aware spatial-temporal information, thereby markedly enhancing UAV tracking. Extensive qualitative and quantitative experiments on three authoritative aerial benchmarks strongly validate the robustness and reliability of ClimRT in UAV tracking performance. Furthermore, real-world tests on the aerial platform validate its practicability and effectiveness. The code and demo materials are released at https://github.com/vision4robotics/ClimRT.

Siamese Object Tracking for Unmanned Aerial Vehicle: A Review and Comprehensive Analysis

May 09, 2022

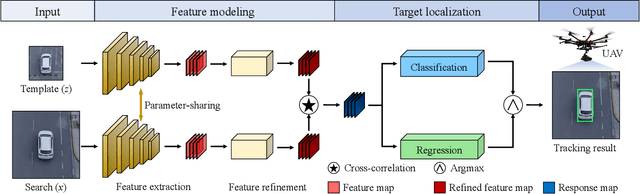

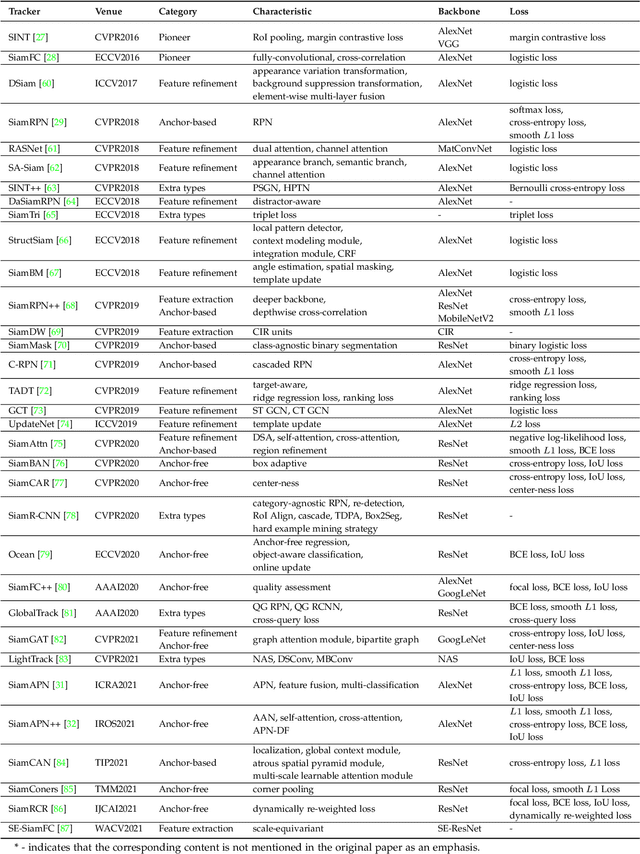

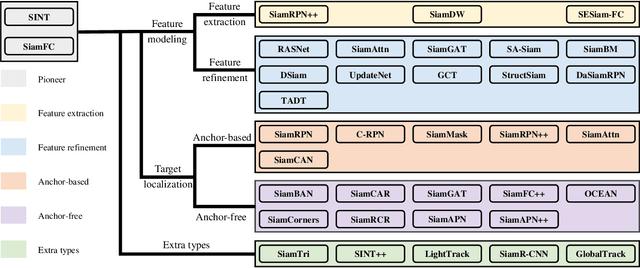

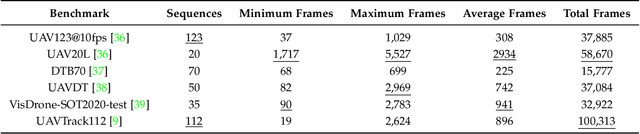

Abstract:Unmanned aerial vehicle (UAV)-based visual object tracking has enabled a wide range of applications and attracted increasing attention in the field of remote sensing because of its versatility and effectiveness. As a new force in the revolutionary trend of deep learning, Siamese networks shine in visual object tracking with their promising balance of accuracy, robustness, and speed. Thanks to the development of embedded processors and the gradual optimization of deep neural networks, Siamese trackers receive extensive research and realize preliminary combinations with UAVs. However, due to the UAV's limited onboard computational resources and the complex real-world circumstances, aerial tracking with Siamese networks still faces severe obstacles in many aspects. To further explore the deployment of Siamese networks in UAV tracking, this work presents a comprehensive review of leading-edge Siamese trackers, along with an exhaustive UAV-specific analysis based on the evaluation using a typical UAV onboard processor. Then, the onboard tests are conducted to validate the feasibility and efficacy of representative Siamese trackers in real-world UAV deployment. Furthermore, to better promote the development of the tracking community, this work analyzes the limitations of existing Siamese trackers and conducts additional experiments represented by low-illumination evaluations. In the end, prospects for the development of Siamese UAV tracking in the remote sensing field are discussed. The unified framework of leading-edge Siamese trackers, i.e., code library, and the results of their experimental evaluations are available at https://github.com/vision4robotics/SiameseTracking4UAV .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge