Kuan-Ting Chen

Team NYCU at Defactify4: Robust Detection and Source Identification of AI-Generated Images Using CNN and CLIP-Based Models

Mar 13, 2025Abstract:With the rapid advancement of generative AI, AI-generated images have become increasingly realistic, raising concerns about creativity, misinformation, and content authenticity. Detecting such images and identifying their source models has become a critical challenge in ensuring the integrity of digital media. This paper tackles the detection of AI-generated images and identifying their source models using CNN and CLIP-ViT classifiers. For the CNN-based classifier, we leverage EfficientNet-B0 as the backbone and feed with RGB channels, frequency features, and reconstruction errors, while for CLIP-ViT, we adopt a pretrained CLIP image encoder to extract image features and SVM to perform classification. Evaluated on the Defactify 4 dataset, our methods demonstrate strong performance in both tasks, with CLIP-ViT showing superior robustness to image perturbations. Compared to baselines like AEROBLADE and OCC-CLIP, our approach achieves competitive results. Notably, our method ranked Top-3 overall in the Defactify 4 competition, highlighting its effectiveness and generalizability. All of our implementations can be found in https://github.com/uuugaga/Defactify_4

TailorGAN: Making User-Defined Fashion Designs

Jan 20, 2020

Abstract:Attribute editing has become an important and emerging topic of computer vision. In this paper, we consider a task: given a reference garment image A and another image B with target attribute (collar/sleeve), generate a photo-realistic image which combines the texture from reference A and the new attribute from reference B. The highly convoluted attributes and the lack of paired data are the main challenges to the task. To overcome those limitations, we propose a novel self-supervised model to synthesize garment images with disentangled attributes (e.g., collar and sleeves) without paired data. Our method consists of a reconstruction learning step and an adversarial learning step. The model learns texture and location information through reconstruction learning. And, the model's capability is generalized to achieve single-attribute manipulation by adversarial learning. Meanwhile, we compose a new dataset, named GarmentSet, with annotation of landmarks of collars and sleeves on clean garment images. Extensive experiments on this dataset and real-world samples demonstrate that our method can synthesize much better results than the state-of-the-art methods in both quantitative and qualitative comparisons.

* fashion

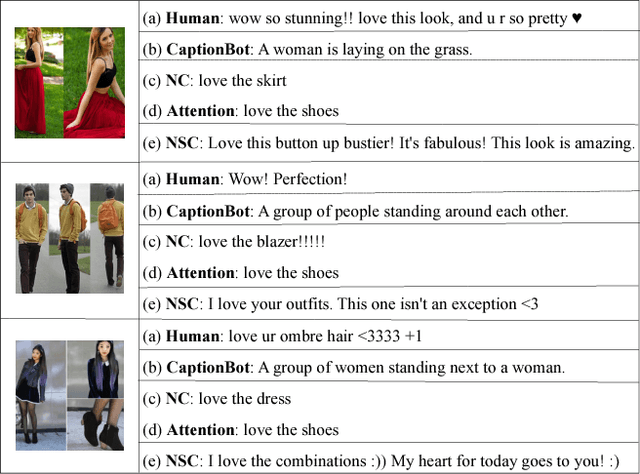

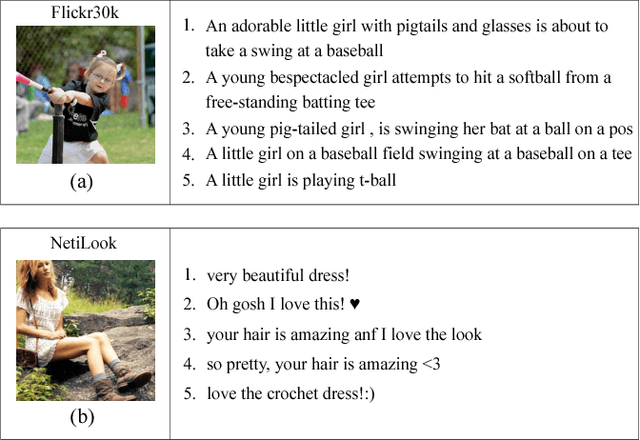

Netizen-Style Commenting on Fashion Photos: Dataset and Diversity Measures

Jan 31, 2018

Abstract:Recently, deep neural network models have achieved promising results in image captioning task. Yet, "vanilla" sentences, only describing shallow appearances (e.g., types, colors), generated by current works are not satisfied netizen style resulting in lacking engagements, contexts, and user intentions. To tackle this problem, we propose Netizen Style Commenting (NSC), to automatically generate characteristic comments to a user-contributed fashion photo. We are devoted to modulating the comments in a vivid "netizen" style which reflects the culture in a designated social community and hopes to facilitate more engagement with users. In this work, we design a novel framework that consists of three major components: (1) We construct a large-scale clothing dataset named NetiLook, which contains 300K posts (photos) with 5M comments to discover netizen-style comments. (2) We propose three unique measures to estimate the diversity of comments. (3) We bring diversity by marrying topic models with neural networks to make up the insufficiency of conventional image captioning works. Experimenting over Flickr30k and our NetiLook datasets, we demonstrate our proposed approaches benefit fashion photo commenting and improve image captioning tasks both in accuracy and diversity.

When Fashion Meets Big Data: Discriminative Mining of Best Selling Clothing Features

Feb 22, 2017

Abstract:With the prevalence of e-commence websites and the ease of online shopping, consumers are embracing huge amounts of various options in products. Undeniably, shopping is one of the most essential activities in our society and studying consumer's shopping behavior is important for the industry as well as sociology and psychology. Indisputable, one of the most popular e-commerce categories is clothing business. There arises the needs for analysis of popular and attractive clothing features which could further boost many emerging applications, such as clothing recommendation and advertising. In this work, we design a novel system that consists of three major components: 1) exploring and organizing a large-scale clothing dataset from a online shopping website, 2) pruning and extracting images of best-selling products in clothing item data and user transaction history, and 3) utilizing a machine learning based approach to discovering fine-grained clothing attributes as the representative and discriminative characteristics of popular clothing style elements. Through the experiments over a large-scale online clothing shopping dataset, we demonstrate the effectiveness of our proposed system, and obtain useful insights on clothing consumption trends and profitable clothing features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge