Kristine Aavild Juhl

Signed Distance Field based Segmentation and Statistical Shape Modelling of the Left Atrial Appendage

Feb 12, 2024Abstract:Patients with atrial fibrillation have a 5-7 fold increased risk of having an ischemic stroke. In these cases, the most common site of thrombus localization is inside the left atrial appendage (LAA) and studies have shown a correlation between the LAA shape and the risk of ischemic stroke. These studies make use of manual measurement and qualitative assessment of shape and are therefore prone to large inter-observer discrepancies, which may explain the contradictions between the conclusions in different studies. We argue that quantitative shape descriptors are necessary to robustly characterize LAA morphology and relate to other functional parameters and stroke risk. Deep Learning methods are becoming standardly available for segmenting cardiovascular structures from high resolution images such as computed tomography (CT), but only few have been tested for LAA segmentation. Furthermore, the majority of segmentation algorithms produces non-smooth 3D models that are not ideal for further processing, such as statistical shape analysis or computational fluid modelling. In this paper we present a fully automatic pipeline for image segmentation, mesh model creation and statistical shape modelling of the LAA. The LAA anatomy is implicitly represented as a signed distance field (SDF), which is directly regressed from the CT image using Deep Learning. The SDF is further used for registering the LAA shapes to a common template and build a statistical shape model (SSM). Based on 106 automatically segmented LAAs, the built SSM reveals that the LAA shape can be quantified using approximately 5 PCA modes and allows the identification of two distinct shape clusters corresponding to the so-called chicken-wing and non-chicken-wing morphologies.

EyeLoveGAN: Exploiting domain-shifts to boost network learning with cycleGANs

Mar 10, 2022

Abstract:This paper presents our contribution to the REFUGE challenge 2020. The challenge consisted of three tasks based on a dataset of retinal images: Segmentation of optic disc and cup, classification of glaucoma, and localization of fovea. We propose employing convolutional neural networks for all three tasks. Segmentation is performed using a U-Net, classification is performed by a pre-trained InceptionV3 network, and fovea detection is performed by employing stacked hour-glass for heatmap prediction. The challenge dataset contains images from three different data sources. To enhance performance, cycleGANs were utilized to create a domain-shift between the data sources. These cycleGANs move images across domains, thus creating artificial images which can be used for training.

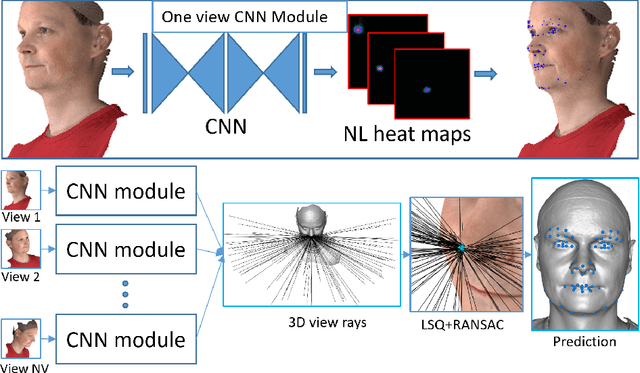

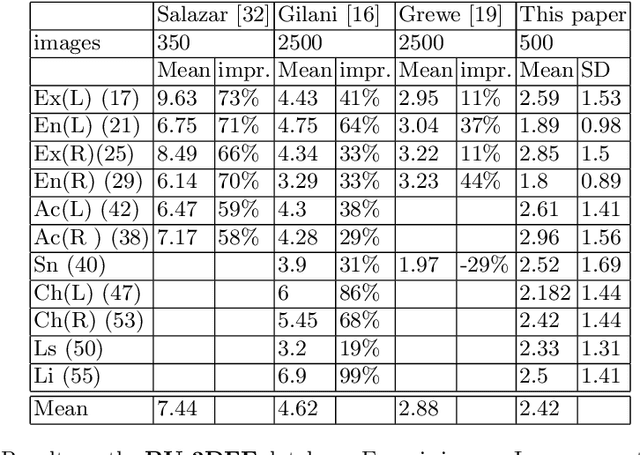

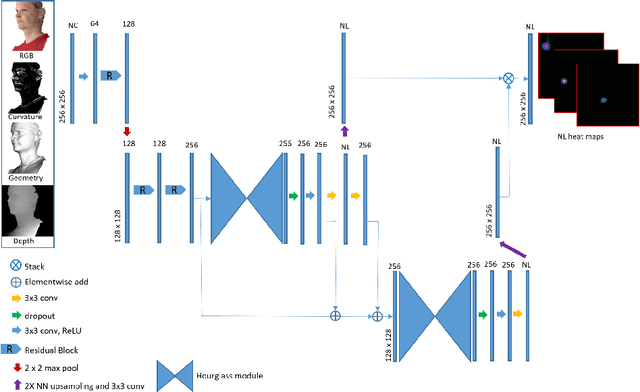

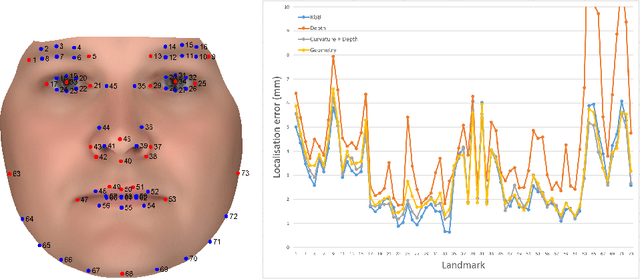

Multi-view consensus CNN for 3D facial landmark placement

Oct 14, 2019

Abstract:The rapid increase in the availability of accurate 3D scanning devices has moved facial recognition and analysis into the 3D domain. 3D facial landmarks are often used as a simple measure of anatomy and it is crucial to have accurate algorithms for automatic landmark placement. The current state-of-the-art approaches have yet to gain from the dramatic increase in performance reported in human pose tracking and 2D facial landmark placement due to the use of deep convolutional neural networks (CNN). Development of deep learning approaches for 3D meshes has given rise to the new subfield called geometric deep learning, where one topic is the adaptation of meshes for the use of deep CNNs. In this work, we demonstrate how methods derived from geometric deep learning, namely multi-view CNNs, can be combined with recent advances in human pose tracking. The method finds 2D landmark estimates and propagates this information to 3D space, where a consensus method determines the accurate 3D face landmark position. We utilise the method on a standard 3D face dataset and show that it outperforms current methods by a large margin. Further, we demonstrate how models trained on 3D range scans can be used to accurately place anatomical landmarks in magnetic resonance images.

* This is a pre-print of an article published in proceedings of the asian conference on computer vision 2018 (LNCS 11361). The final authenticated version is available online at: https://doi.org/10.1007/978-3-030-20887-5_44

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge