Kory W Mathewson

Over-communicate no more: Situated RL agents learn concise communication protocols

Nov 02, 2022

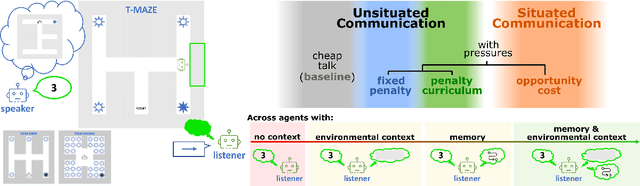

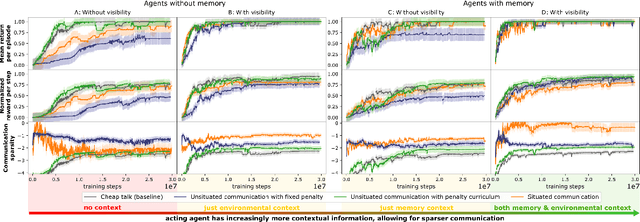

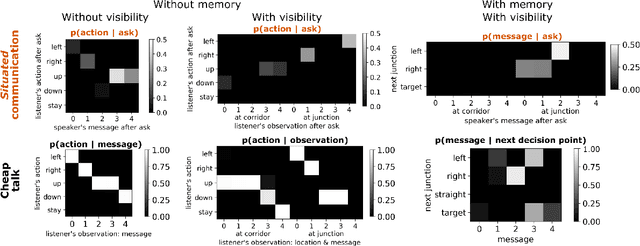

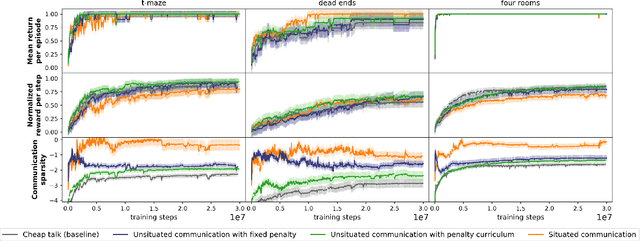

Abstract:While it is known that communication facilitates cooperation in multi-agent settings, it is unclear how to design artificial agents that can learn to effectively and efficiently communicate with each other. Much research on communication emergence uses reinforcement learning (RL) and explores unsituated communication in one-step referential tasks -- the tasks are not temporally interactive and lack time pressures typically present in natural communication. In these settings, agents may successfully learn to communicate, but they do not learn to exchange information concisely -- they tend towards over-communication and an inefficient encoding. Here, we explore situated communication in a multi-step task, where the acting agent has to forgo an environmental action to communicate. Thus, we impose an opportunity cost on communication and mimic the real-world pressure of passing time. We compare communication emergence under this pressure against learning to communicate with a cost on articulation effort, implemented as a per-message penalty (fixed and progressively increasing). We find that while all tested pressures can disincentivise over-communication, situated communication does it most effectively and, unlike the cost on effort, does not negatively impact emergence. Implementing an opportunity cost on communication in a temporally extended environment is a step towards embodiment, and might be a pre-condition for incentivising efficient, human-like communication.

#ContextMatters: Advantages and Limitations of Using Machine Learning to Support Women in Politics

Oct 10, 2021

Abstract:The United Nations identified gender equality as a Sustainable Development Goal in 2015, recognizing the underrepresentation of women in politics as a specific barrier to achieving gender equality. Political systems around the world experience gender inequality across all levels of elected government as fewer women run for office than men. This is due in part to online abuse, particularly on social media platforms like Twitter, where women seeking or in power tend to be targeted with more toxic maltreatment than their male counterparts. In this paper, we present reflections on ParityBOT - the first natural language processing-based intervention designed to affect online discourse for women in politics for the better, at scale. Deployed across elections in Canada, the United States and New Zealand, ParityBOT was used to analyse and classify more than 12 million tweets directed at women candidates and counter toxic tweets with supportive ones. From these elections we present three case studies highlighting the current limitations of, and future research and application opportunities for, using a natural language processing-based system to detect online toxicity, specifically with regards to contextually important microaggressions. We examine the rate of false negatives, where ParityBOT failed to pick up on insults directed at specific high profile women, which would be obvious to human users. We examine the unaddressed harms of microaggressions and the potential of yet unseen damage they cause for women in these communities, and for progress towards gender equality overall, in light of these technological blindspots. This work concludes with a discussion on the benefits of partnerships between nonprofit social groups and technology experts to develop responsible, socially impactful approaches to addressing online hate.

Women, politics and Twitter: Using machine learning to change the discourse

Nov 25, 2019

Abstract:Including diverse voices in political decision-making strengthens our democratic institutions. Within the Canadian political system, there is gender inequality across all levels of elected government. Online abuse, such as hateful tweets, leveled at women engaged in politics contributes to this inequity, particularly tweets focusing on their gender. In this paper, we present ParityBOT: a Twitter bot which counters abusive tweets aimed at women in politics by sending supportive tweets about influential female leaders and facts about women in public life. ParityBOT is the first artificial intelligence-based intervention aimed at affecting online discourse for women in politics for the better. The goal of this project is to: $1$) raise awareness of issues relating to gender inequity in politics, and $2$) positively influence public discourse in politics. The main contribution of this paper is a scalable model to classify and respond to hateful tweets with quantitative and qualitative assessments. The ParityBOT abusive classification system was validated on public online harassment datasets. We conclude with analysis of the impact of ParityBOT, drawing from data gathered during interventions in both the $2019$ Alberta provincial and $2019$ Canadian federal elections.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge