Kirill Lukyanov

ISP RAS Research Center for Trusted Artificial Intelligence, Ivannikov Institute for System Programming of the Russian Academy of Sciences, Moscow Institute of Physics and Technology

Framework GNN-AID: Graph Neural Network Analysis Interpretation and Defense

May 06, 2025

Abstract:The growing need for Trusted AI (TAI) highlights the importance of interpretability and robustness in machine learning models. However, many existing tools overlook graph data and rarely combine these two aspects into a single solution. Graph Neural Networks (GNNs) have become a popular approach, achieving top results across various tasks. We introduce GNN-AID (Graph Neural Network Analysis, Interpretation, and Defense), an open-source framework designed for graph data to address this gap. Built as a Python library, GNN-AID supports advanced trust methods and architectural layers, allowing users to analyze graph datasets and GNN behavior using attacks, defenses, and interpretability methods. GNN-AID is built on PyTorch-Geometric, offering preloaded datasets, models, and support for any GNNs through customizable interfaces. It also includes a web interface with tools for graph visualization and no-code features like an interactive model builder, simplifying the exploration and analysis of GNNs. The framework also supports MLOps techniques, ensuring reproducibility and result versioning to track and revisit analyses efficiently. GNN-AID is a flexible tool for developers and researchers. It helps developers create, analyze, and customize graph models, while also providing access to prebuilt datasets and models for quick experimentation. Researchers can use the framework to explore advanced topics on the relationship between interpretability and robustness, test defense strategies, and combine methods to protect against different types of attacks. We also show how defenses against evasion and poisoning attacks can conflict when applied to graph data, highlighting the complex connections between defense strategies. GNN-AID is available at \href{https://github.com/ispras/GNN-AID}{github.com/ispras/GNN-AID}

Robustness questions the interpretability of graph neural networks: what to do?

May 05, 2025Abstract:Graph Neural Networks (GNNs) have become a cornerstone in graph-based data analysis, with applications in diverse domains such as bioinformatics, social networks, and recommendation systems. However, the interplay between model interpretability and robustness remains poorly understood, especially under adversarial scenarios like poisoning and evasion attacks. This paper presents a comprehensive benchmark to systematically analyze the impact of various factors on the interpretability of GNNs, including the influence of robustness-enhancing defense mechanisms. We evaluate six GNN architectures based on GCN, SAGE, GIN, and GAT across five datasets from two distinct domains, employing four interpretability metrics: Fidelity, Stability, Consistency, and Sparsity. Our study examines how defenses against poisoning and evasion attacks, applied before and during model training, affect interpretability and highlights critical trade-offs between robustness and interpretability. The framework will be published as open source. The results reveal significant variations in interpretability depending on the chosen defense methods and model architecture characteristics. By establishing a standardized benchmark, this work provides a foundation for developing GNNs that are both robust to adversarial threats and interpretable, facilitating trust in their deployment in sensitive applications.

Model Mimic Attack: Knowledge Distillation for Provably Transferable Adversarial Examples

Oct 21, 2024

Abstract:The vulnerability of artificial neural networks to adversarial perturbations in the black-box setting is widely studied in the literature. The majority of attack methods to construct these perturbations suffer from an impractically large number of queries required to find an adversarial example. In this work, we focus on knowledge distillation as an approach to conduct transfer-based black-box adversarial attacks and propose an iterative training of the surrogate model on an expanding dataset. This work is the first, to our knowledge, to provide provable guarantees on the success of knowledge distillation-based attack on classification neural networks: we prove that if the student model has enough learning capabilities, the attack on the teacher model is guaranteed to be found within the finite number of distillation iterations.

Adversarial Attacks and Defenses in Automated Control Systems: A Comprehensive Benchmark

Mar 21, 2024Abstract:Integrating machine learning into Automated Control Systems (ACS) enhances decision-making in industrial process management. One of the limitations to the widespread adoption of these technologies in industry is the vulnerability of neural networks to adversarial attacks. This study explores the threats in deploying deep learning models for fault diagnosis in ACS using the Tennessee Eastman Process dataset. By evaluating three neural networks with different architectures, we subject them to six types of adversarial attacks and explore five different defense methods. Our results highlight the strong vulnerability of models to adversarial samples and the varying effectiveness of defense strategies. We also propose a novel protection approach by combining multiple defense methods and demonstrate it's efficacy. This research contributes several insights into securing machine learning within ACS, ensuring robust fault diagnosis in industrial processes.

Graph Neural Network for Crawling Target Nodes in Social Networks

Mar 20, 2024

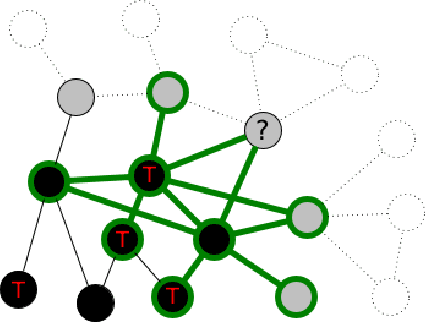

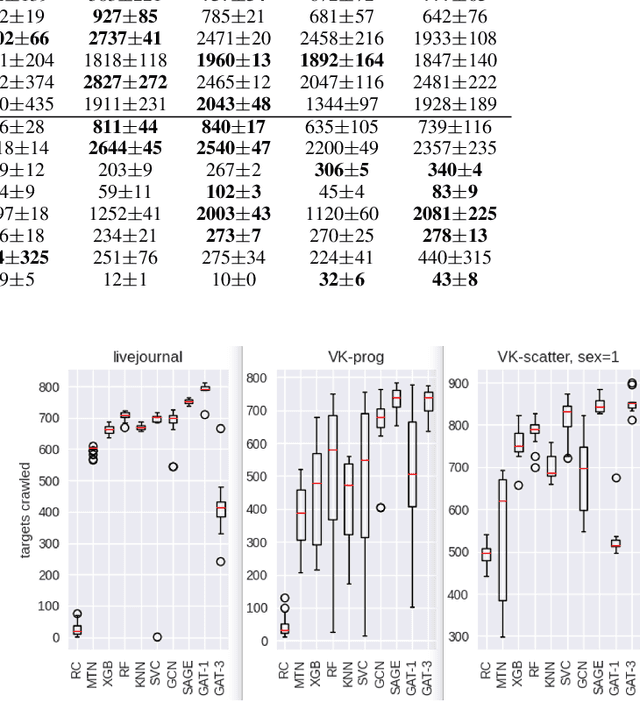

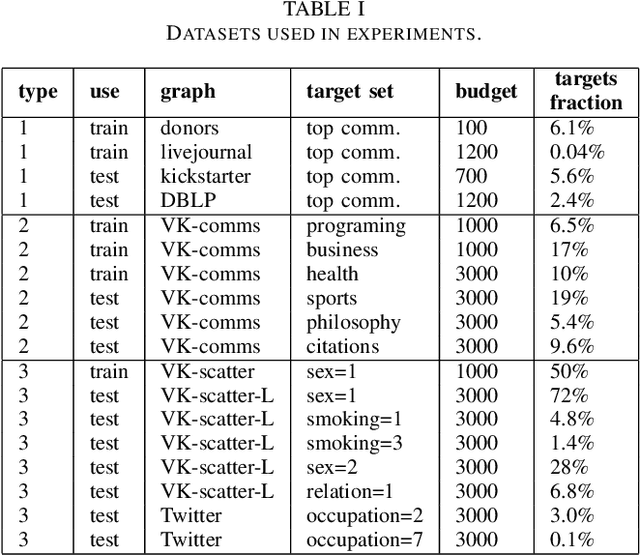

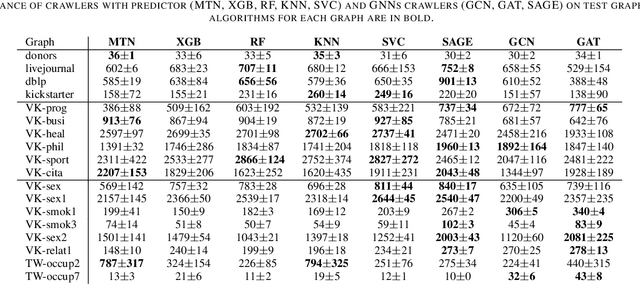

Abstract:Social networks crawling is in the focus of active research the last years. One of the challenging task is to collect target nodes in an initially unknown graph given a budget of crawling steps. Predicting a node property based on its partially known neighbourhood is at the heart of a successful crawler. In this paper we adopt graph neural networks for this purpose and show they are competitive to traditional classifiers and are better for individual cases. Additionally we suggest a training sample boosting technique, which helps to diversify the training set at early stages of crawling and thus improves the predictor quality. The experimental study on three types of target set topology indicates GNN based approach has a potential in crawling task, especially in the case of distributed target nodes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge