Kh Tohidul Islam

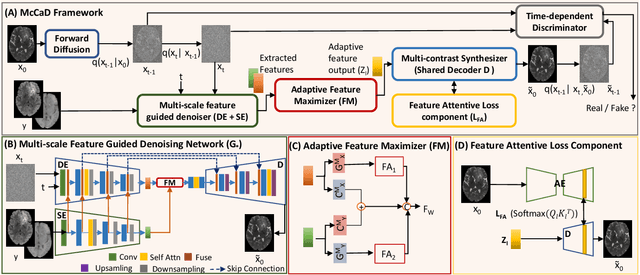

McCaD: Multi-Contrast MRI Conditioned, Adaptive Adversarial Diffusion Model for High-Fidelity MRI Synthesis

Sep 01, 2024

Abstract:Magnetic Resonance Imaging (MRI) is instrumental in clinical diagnosis, offering diverse contrasts that provide comprehensive diagnostic information. However, acquiring multiple MRI contrasts is often constrained by high costs, long scanning durations, and patient discomfort. Current synthesis methods, typically focused on single-image contrasts, fall short in capturing the collective nuances across various contrasts. Moreover, existing methods for multi-contrast MRI synthesis often fail to accurately map feature-level information across multiple imaging contrasts. We introduce McCaD (Multi-Contrast MRI Conditioned Adaptive Adversarial Diffusion), a novel framework leveraging an adversarial diffusion model conditioned on multiple contrasts for high-fidelity MRI synthesis. McCaD significantly enhances synthesis accuracy by employing a multi-scale, feature-guided mechanism, incorporating denoising and semantic encoders. An adaptive feature maximization strategy and a spatial feature-attentive loss have been introduced to capture more intrinsic features across multiple contrasts. This facilitates a precise and comprehensive feature-guided denoising process. Extensive experiments on tumor and healthy multi-contrast MRI datasets demonstrated that the McCaD outperforms state-of-the-art baselines quantitively and qualitatively. The code is provided with supplementary materials.

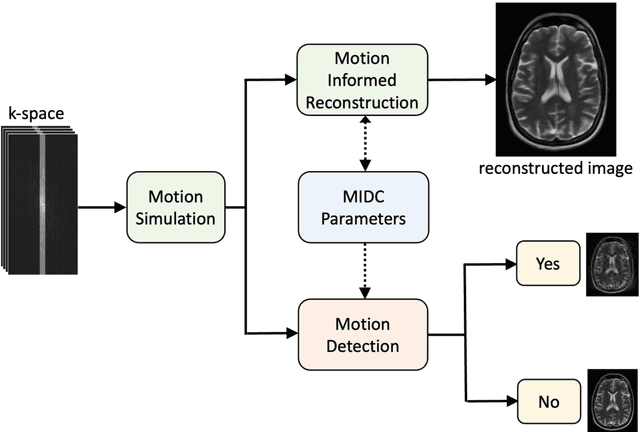

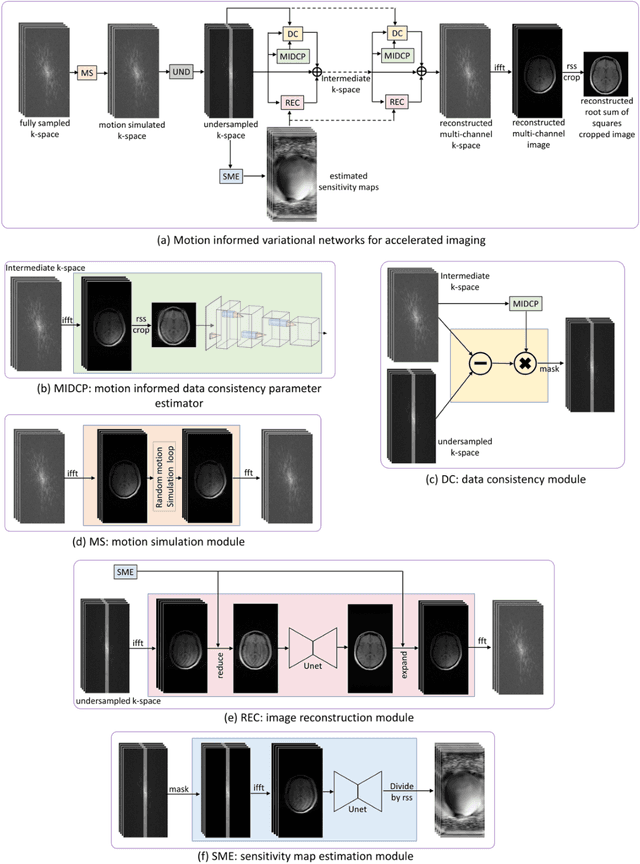

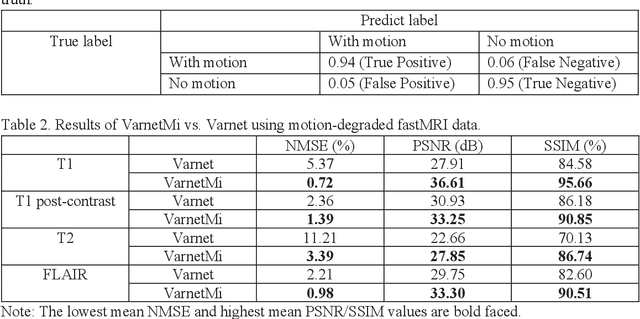

Motion-Informed Deep Learning for Brain MR Image Reconstruction Framework

May 28, 2024

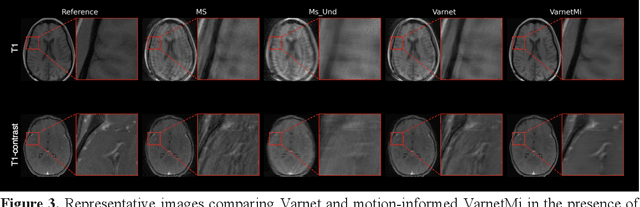

Abstract:Motion artifacts in Magnetic Resonance Imaging (MRI) are one of the frequently occurring artifacts due to patient movements during scanning. Motion is estimated to be present in approximately 30% of clinical MRI scans; however, motion has not been explicitly modeled within deep learning image reconstruction models. Deep learning (DL) algorithms have been demonstrated to be effective for both the image reconstruction task and the motion correction task, but the two tasks are considered separately. The image reconstruction task involves removing undersampling artifacts such as noise and aliasing artifacts, whereas motion correction involves removing artifacts including blurring, ghosting, and ringing. In this work, we propose a novel method to simultaneously accelerate imaging and correct motion. This is achieved by integrating a motion module into the deep learning-based MRI reconstruction process, enabling real-time detection and correction of motion. We model motion as a tightly integrated auxiliary layer in the deep learning model during training, making the deep learning model 'motion-informed'. During inference, image reconstruction is performed from undersampled raw k-space data using a trained motion-informed DL model. Experimental results demonstrate that the proposed motion-informed deep learning image reconstruction network outperformed the conventional image reconstruction network for motion-degraded MRI datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge