Kenneth Kreutz-Delgado

A Nonparametric Framework for Quantifying Generative Inference on Neuromorphic Systems

Feb 18, 2016

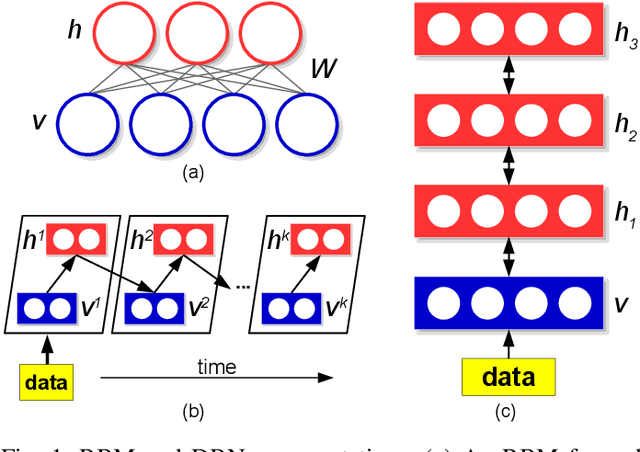

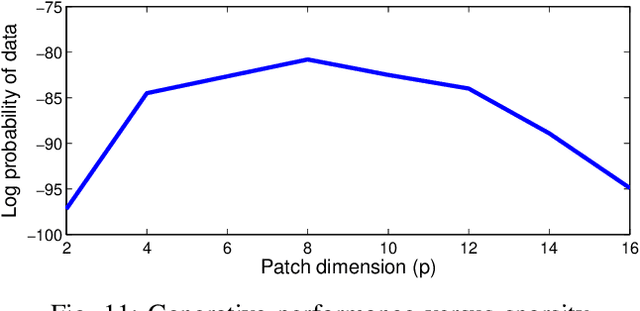

Abstract:Restricted Boltzmann Machines and Deep Belief Networks have been successfully used in probabilistic generative model applications such as image occlusion removal, pattern completion and motion synthesis. Generative inference in such algorithms can be performed very efficiently on hardware using a Markov Chain Monte Carlo procedure called Gibbs sampling, where stochastic samples are drawn from noisy integrate and fire neurons implemented on neuromorphic substrates. Currently, no satisfactory metrics exist for evaluating the generative performance of such algorithms implemented on high-dimensional data for neuromorphic platforms. This paper demonstrates the application of nonparametric goodness-of-fit testing to both quantify the generative performance as well as provide decision-directed criteria for choosing the parameters of the neuromorphic Gibbs sampler and optimizing usage of hardware resources used during sampling.

Mapping Generative Models onto a Network of Digital Spiking Neurons

Oct 09, 2015

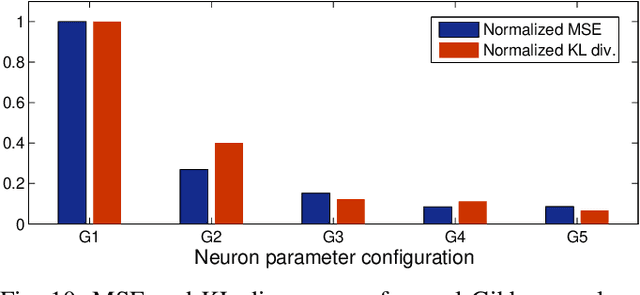

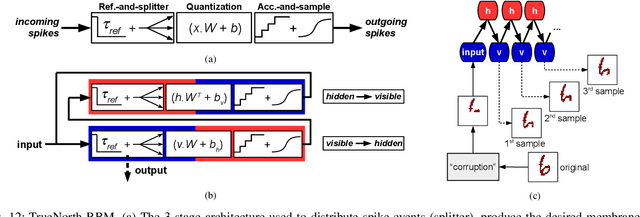

Abstract:Stochastic neural networks such as Restricted Boltzmann Machines (RBMs) have been successfully used in applications ranging from speech recognition to image classification. Inference and learning in these algorithms use a Markov Chain Monte Carlo procedure called Gibbs sampling, where a logistic function forms the kernel of this sampler. On the other side of the spectrum, neuromorphic systems have shown great promise for low-power and parallelized cognitive computing, but lack well-suited applications and automation procedures. In this work, we propose a systematic method for bridging the RBM algorithm and digital neuromorphic systems, with a generative pattern completion task as proof of concept. For this, we first propose a method of producing the Gibbs sampler using bio-inspired digital noisy integrate-and-fire neurons. Next, we describe the process of mapping generative RBMs trained offline onto the IBM TrueNorth neurosynaptic processor -- a low-power digital neuromorphic VLSI substrate. Mapping these algorithms onto neuromorphic hardware presents unique challenges in network connectivity and weight and bias quantization, which, in turn, require architectural and design strategies for the physical realization. Generative performance metrics are analyzed to validate the neuromorphic requirements and to best select the neuron parameters for the model. Lastly, we describe a design automation procedure which achieves optimal resource usage, accounting for the novel hardware adaptations. This work represents the first implementation of generative RBM inference on a neuromorphic VLSI substrate.

Event-Driven Contrastive Divergence for Spiking Neuromorphic Systems

Dec 09, 2013

Abstract:Restricted Boltzmann Machines (RBMs) and Deep Belief Networks have been demonstrated to perform efficiently in a variety of applications, such as dimensionality reduction, feature learning, and classification. Their implementation on neuromorphic hardware platforms emulating large-scale networks of spiking neurons can have significant advantages from the perspectives of scalability, power dissipation and real-time interfacing with the environment. However the traditional RBM architecture and the commonly used training algorithm known as Contrastive Divergence (CD) are based on discrete updates and exact arithmetics which do not directly map onto a dynamical neural substrate. Here, we present an event-driven variation of CD to train a RBM constructed with Integrate & Fire (I&F) neurons, that is constrained by the limitations of existing and near future neuromorphic hardware platforms. Our strategy is based on neural sampling, which allows us to synthesize a spiking neural network that samples from a target Boltzmann distribution. The recurrent activity of the network replaces the discrete steps of the CD algorithm, while Spike Time Dependent Plasticity (STDP) carries out the weight updates in an online, asynchronous fashion. We demonstrate our approach by training an RBM composed of leaky I&F neurons with STDP synapses to learn a generative model of the MNIST hand-written digit dataset, and by testing it in recognition, generation and cue integration tasks. Our results contribute to a machine learning-driven approach for synthesizing networks of spiking neurons capable of carrying out practical, high-level functionality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge