Kemal Davaslioglu

Coordinated Anti-Jamming Resilience in Swarm Networks via Multi-Agent Reinforcement Learning

Dec 18, 2025

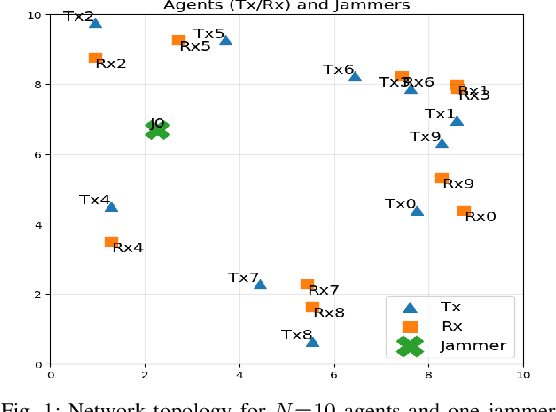

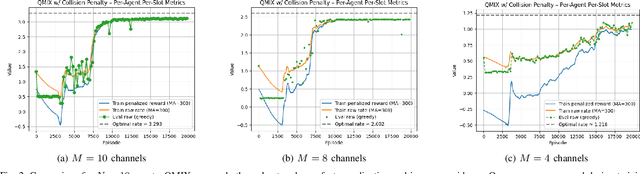

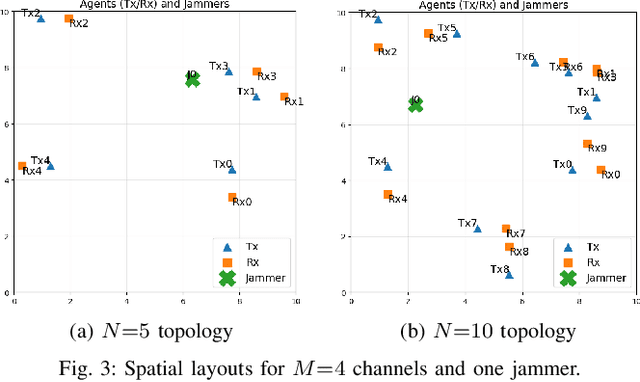

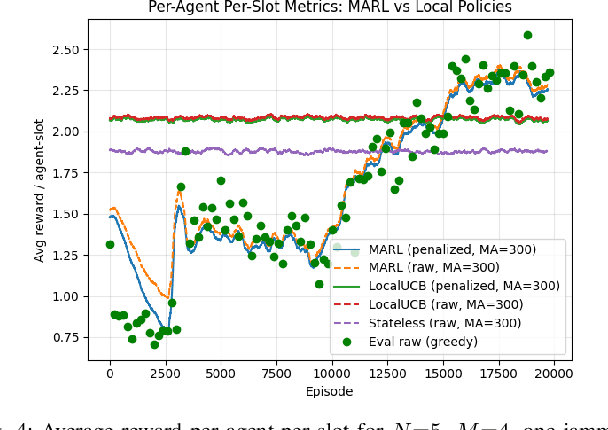

Abstract:Reactive jammers pose a severe security threat to robotic-swarm networks by selectively disrupting inter-agent communications and undermining formation integrity and mission success. Conventional countermeasures such as fixed power control or static channel hopping are largely ineffective against such adaptive adversaries. This paper presents a multi-agent reinforcement learning (MARL) framework based on the QMIX algorithm to improve the resilience of swarm communications under reactive jamming. We consider a network of multiple transmitter-receiver pairs sharing channels while a reactive jammer with Markovian threshold dynamics senses aggregate power and reacts accordingly. Each agent jointly selects transmit frequency (channel) and power, and QMIX learns a centralized but factorizable action-value function that enables coordinated yet decentralized execution. We benchmark QMIX against a genie-aided optimal policy in a no-channel-reuse setting, and against local Upper Confidence Bound (UCB) and a stateless reactive policy in a more general fading regime with channel reuse enabled. Simulation results show that QMIX rapidly converges to cooperative policies that nearly match the genie-aided bound, while achieving higher throughput and lower jamming incidence than the baselines, thereby demonstrating MARL's effectiveness for securing autonomous swarms in contested environments.

How to Combat Reactive and Dynamic Jamming Attacks with Reinforcement Learning

Oct 02, 2025Abstract:This paper studies the problem of mitigating reactive jamming, where a jammer adopts a dynamic policy of selecting channels and sensing thresholds to detect and jam ongoing transmissions. The transmitter-receiver pair learns to avoid jamming and optimize throughput over time (without prior knowledge of channel conditions or jamming strategies) by using reinforcement learning (RL) to adapt transmit power, modulation, and channel selection. Q-learning is employed for discrete jamming-event states, while Deep Q-Networks (DQN) are employed for continuous states based on received power. Through different reward functions and action sets, the results show that RL can adapt rapidly to spectrum dynamics and sustain high rates as channels and jamming policies change over time.

MULTI-SCOUT: Multistatic Integrated Sensing and Communications in 5G and Beyond for Moving Target Detection, Positioning, and Tracking

Jul 03, 2025Abstract:This paper presents a complete signal-processing chain for multistatic integrated sensing and communications (ISAC) using 5G Positioning Reference Signal (PRS). We consider a distributed architecture in which one gNB transmits a periodic OFDM-PRS waveform while multiple spatially separated receivers exploit the same signal for target detection, parameter estimation and tracking. A coherent cross-ambiguity function (CAF) is evaluated to form a range-Doppler map from which the bistatic delay and radial velocity are extracted for every target. For a single target, the resulting bistatic delays are fused through nonlinear least-squares trilateration, yielding a geometric position estimate, and a regularized linear inversion of the radial-speed equations yields a two-dimensional velocity vector, where speed and heading are obtained. The approach is applied to 2D and 3D settings, extended to account for time synchronization bias, and generalized to multiple targets by resolving target association. The sequence of position-velocity estimates is then fed to standard and extended Kalman filters to obtain smoothed tracks. Our results show high-fidelity moving-target detection, positioning, and tracking using 5G PRS signals for multistatic ISAC.

Augmenting Training Data with Vector-Quantized Variational Autoencoder for Classifying RF Signals

Oct 23, 2024

Abstract:Radio frequency (RF) communication has been an important part of civil and military communication for decades. With the increasing complexity of wireless environments and the growing number of devices sharing the spectrum, it has become critical to efficiently manage and classify the signals that populate these frequencies. In such scenarios, the accurate classification of wireless signals is essential for effective spectrum management, signal interception, and interference mitigation. However, the classification of wireless RF signals often faces challenges due to the limited availability of labeled training data, especially under low signal-to-noise ratio (SNR) conditions. To address these challenges, this paper proposes the use of a Vector-Quantized Variational Autoencoder (VQ-VAE) to augment training data, thereby enhancing the performance of a baseline wireless classifier. The VQ-VAE model generates high-fidelity synthetic RF signals, increasing the diversity and fidelity of the training dataset by capturing the complex variations inherent in RF communication signals. Our experimental results show that incorporating VQ-VAE-generated data significantly improves the classification accuracy of the baseline model, particularly in low SNR conditions. This augmentation leads to better generalization and robustness of the classifier, overcoming the constraints imposed by limited real-world data. By improving RF signal classification, the proposed approach enhances the efficacy of wireless communication in both civil and tactical settings, ensuring reliable and secure operations. This advancement supports critical decision-making and operational readiness in environments where communication fidelity is essential.

Continual Deep Reinforcement Learning to Prevent Catastrophic Forgetting in Jamming Mitigation

Oct 14, 2024

Abstract:Deep Reinforcement Learning (DRL) has been highly effective in learning from and adapting to RF environments and thus detecting and mitigating jamming effects to facilitate reliable wireless communications. However, traditional DRL methods are susceptible to catastrophic forgetting (namely forgetting old tasks when learning new ones), especially in dynamic wireless environments where jammer patterns change over time. This paper considers an anti-jamming system and addresses the challenge of catastrophic forgetting in DRL applied to jammer detection and mitigation. First, we demonstrate the impact of catastrophic forgetting in DRL when applied to jammer detection and mitigation tasks, where the network forgets previously learned jammer patterns while adapting to new ones. This catastrophic interference undermines the effectiveness of the system, particularly in scenarios where the environment is non-stationary. We present a method that enables the network to retain knowledge of old jammer patterns while learning to handle new ones. Our approach substantially reduces catastrophic forgetting, allowing the anti-jamming system to learn new tasks without compromising its ability to perform previously learned tasks effectively. Furthermore, we introduce a systematic methodology for sequentially learning tasks in the anti-jamming framework. By leveraging continual DRL techniques based on PackNet, we achieve superior anti-jamming performance compared to standard DRL methods. Our proposed approach not only addresses catastrophic forgetting but also enhances the adaptability and robustness of the system in dynamic jamming environments. We demonstrate the efficacy of our method in preserving knowledge of past jammer patterns, learning new tasks efficiently, and achieving superior anti-jamming performance compared to traditional DRL approaches.

I-SCOUT: Integrated Sensing and Communications to Uncover Moving Targets in NextG Networks

Oct 11, 2024Abstract:Integrated Sensing and Communication (ISAC) represents a transformative approach within 5G and beyond, aiming to merge wireless communication and sensing functionalities into a unified network infrastructure. This integration offers enhanced spectrum efficiency, real-time situational awareness, cost and energy reductions, and improved operational performance. ISAC provides simultaneous communication and sensing capabilities, enhancing the ability to detect, track, and respond to spectrum dynamics and potential threats in complex environments. In this paper, we introduce I-SCOUT, an innovative ISAC solution designed to uncover moving targets in NextG networks. We specifically repurpose the Positioning Reference Signal (PRS) of the 5G waveform, exploiting its distinctive autocorrelation characteristics for environment sensing. The reflected signals from moving targets are processed to estimate both the range and velocity of these targets using the cross ambiguity function (CAF). We conduct an in-depth analysis of the tradeoff between sensing and communication functionalities, focusing on the allocation of PRSs for ISAC purposes. Our study reveals that the number of PRSs dedicated to ISAC has a significant impact on the system's performance, necessitating a careful balance to optimize both sensing accuracy and communication efficiency. Our results demonstrate that I-SCOUT effectively leverages ISAC to accurately determine the range and velocity of moving targets. Moreover, I-SCOUT is capable of distinguishing between multiple targets within a group, showcasing its potential for complex scenarios. These findings underscore the viability of ISAC in enhancing the capabilities of NextG networks, for both commercial and tactical applications where precision and reliability are critical.

Deep Reinforcement Learning for Power Control in Next-Generation WiFi Network Systems

Nov 02, 2022Abstract:This paper presents a deep reinforcement learning (DRL) solution for power control in wireless communications, describes its embedded implementation with WiFi transceivers for a WiFi network system, and evaluates the performance with high-fidelity emulation tests. In a multi-hop wireless network, each mobile node measures its link quality and signal strength, and controls its transmit power. As a model-free solution, reinforcement learning allows nodes to adapt their actions by observing the states and maximize their cumulative rewards over time. For each node, the state consists of transmit power, link quality and signal strength; the action adjusts the transmit power; and the reward combines energy efficiency (throughput normalized by energy consumption) and penalty of changing the transmit power. As the state space is large, Q-learning is hard to implement on embedded platforms with limited memory and processing power. By approximating the Q-values with a DQN, DRL is implemented for the embedded platform of each node combining an ARM processor and a WiFi transceiver for 802.11n. Controllable and repeatable emulation tests are performed by inducing realistic channel effects on RF signals. Performance comparison with benchmark schemes of fixed and myopic power allocations shows that power control with DRL provides major improvements to energy efficiency and throughput in WiFi network systems.

* 5 pages, 6 figures, 1 table

Self-Supervised RF Signal Representation Learning for NextG Signal Classification with Deep Learning

Jul 07, 2022

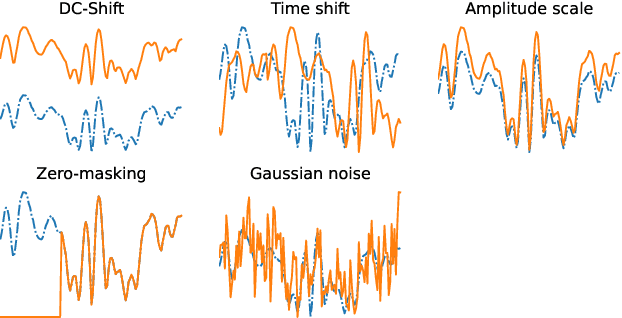

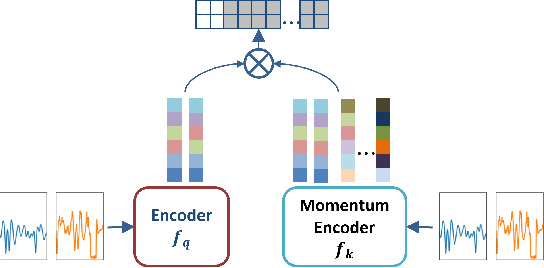

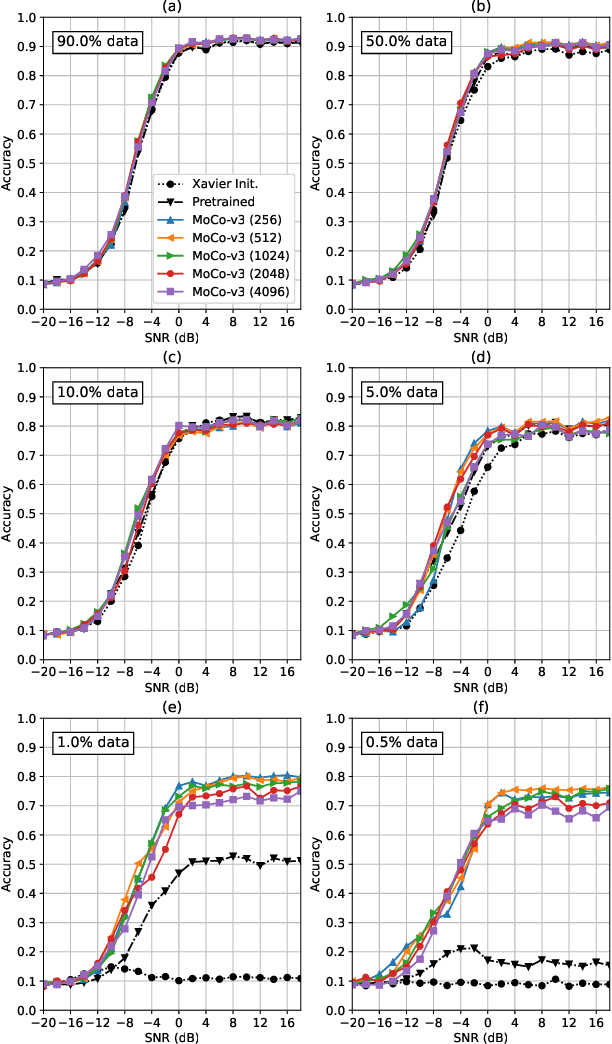

Abstract:Deep learning (DL) finds rich applications in the wireless domain to improve spectrum awareness. Typically, the DL models are either randomly initialized following a statistical distribution or pretrained on tasks from other data domains such as computer vision (in the form of transfer learning) without accounting for the unique characteristics of wireless signals. Self-supervised learning enables the learning of useful representations from Radio Frequency (RF) signals themselves even when only limited training data samples with labels are available. We present the first self-supervised RF signal representation learning model and apply it to the automatic modulation recognition (AMR) task by specifically formulating a set of transformations to capture the wireless signal characteristics. We show that the sample efficiency (the number of labeled samples required to achieve a certain accuracy performance) of AMR can be significantly increased (almost an order of magnitude) by learning signal representations with self-supervised learning. This translates to substantial time and cost savings. Furthermore, self-supervised learning increases the model accuracy compared to the state-of-the-art DL methods and maintains high accuracy even when a small set of training data samples is used.

Machine Learning in NextG Networks via Generative Adversarial Networks

Mar 09, 2022

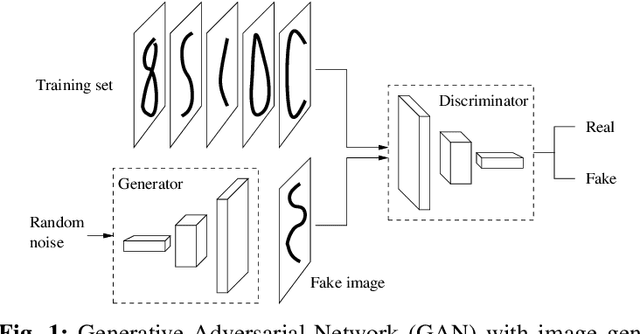

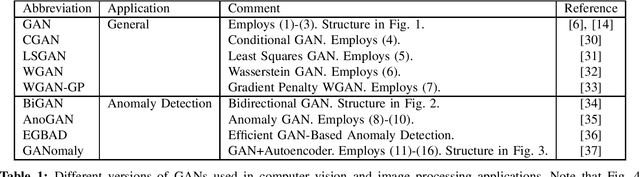

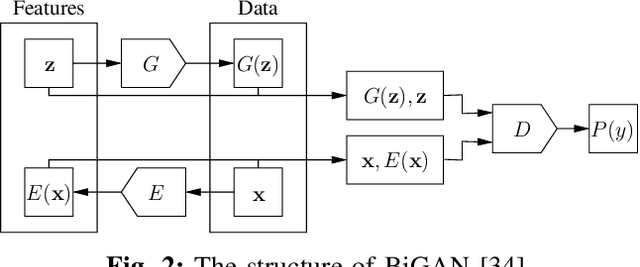

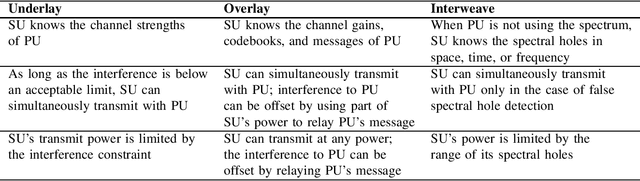

Abstract:Generative Adversarial Networks (GANs) are Machine Learning (ML) algorithms that have the ability to address competitive resource allocation problems together with detection and mitigation of anomalous behavior. In this paper, we investigate their use in next-generation (NextG) communications within the context of cognitive networks to address i) spectrum sharing, ii) detecting anomalies, and iii) mitigating security attacks. GANs have the following advantages. First, they can learn and synthesize field data, which can be costly, time consuming, and nonrepeatable. Second, they enable pre-training classifiers by using semi-supervised data. Third, they facilitate increased resolution. Fourth, they enable the recovery of corrupted bits in the spectrum. The paper provides the basics of GANs, a comparative discussion on different kinds of GANs, performance measures for GANs in computer vision and image processing as well as wireless applications, a number of datasets for wireless applications, performance measures for general classifiers, a survey of the literature on GANs for i)-iii) above, and future research directions. As a use case of GAN for NextG communications, we show that a GAN can be effectively applied for anomaly detection in signal classification (e.g., user authentication) outperforming another state-of-the-art ML technique such as an autoencoder.

End-to-End Autoencoder Communications with Optimized Interference Suppression

Dec 29, 2021

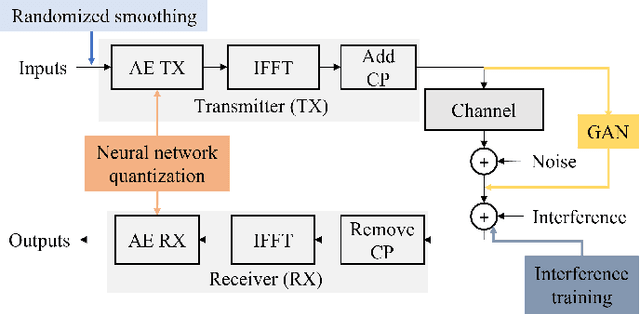

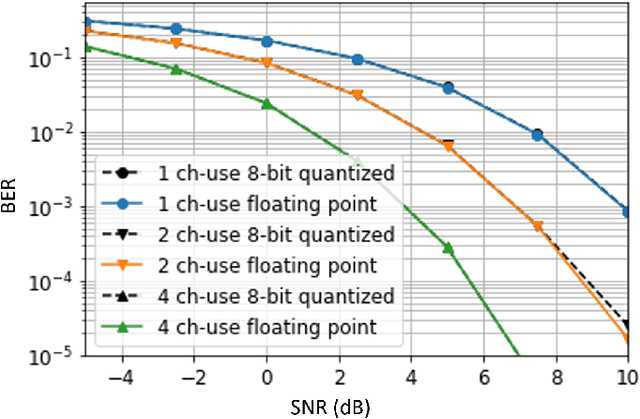

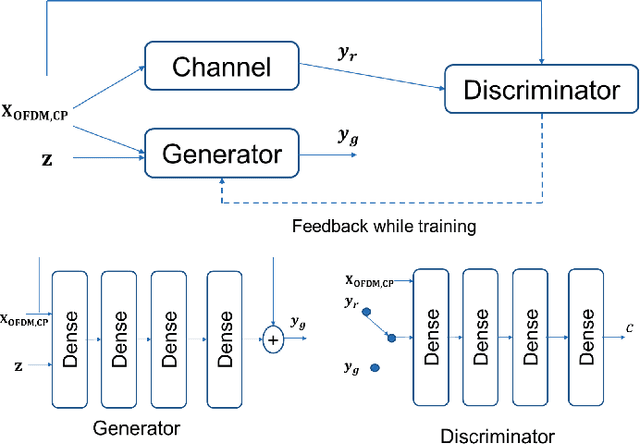

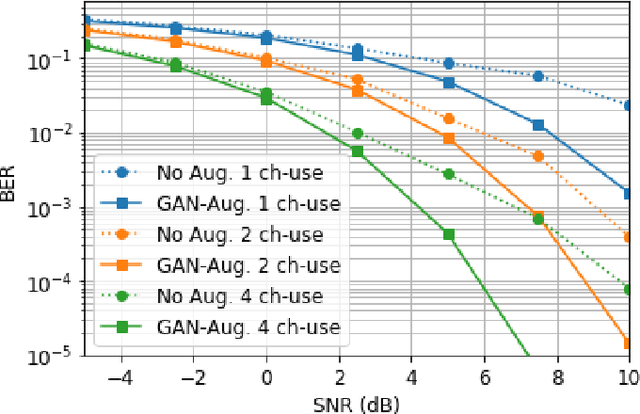

Abstract:An end-to-end communications system based on Orthogonal Frequency Division Multiplexing (OFDM) is modeled as an autoencoder (AE) for which the transmitter (coding and modulation) and receiver (demodulation and decoding) are represented as deep neural networks (DNNs) of the encoder and decoder, respectively. This AE communications approach is shown to outperform conventional communications in terms of bit error rate (BER) under practical scenarios regarding channel and interference effects as well as training data and embedded implementation constraints. A generative adversarial network (GAN) is trained to augment the training data when there is not enough training data available. Also, the performance is evaluated in terms of the DNN model quantization and the corresponding memory requirements for embedded implementation. Then, interference training and randomized smoothing are introduced to train the AE communications to operate under unknown and dynamic interference (jamming) effects on potentially multiple OFDM symbols. Relative to conventional communications, up to 36 dB interference suppression for a channel reuse of four can be achieved by the AE communications with interference training and randomized smoothing. AE communications is also extended to the multiple-input multiple-output (MIMO) case and its BER performance gain with and without interference effects is demonstrated compared to conventional MIMO communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge