Katrin Schulz

Towards an automated workflow in materials science for combining multi-modal simulative and experimental information using data mining and large language models

Feb 18, 2025Abstract:To retrieve and compare scientific data of simulations and experiments in materials science, data needs to be easily accessible and machine readable to qualify and quantify various materials science phenomena. The recent progress in open science leverages the accessibility to data. However, a majority of information is encoded within scientific documents limiting the capability of finding suitable literature as well as material properties. This manuscript showcases an automated workflow, which unravels the encoded information from scientific literature to a machine readable data structure of texts, figures, tables, equations and meta-data, using natural language processing and language as well as vision transformer models to generate a machine-readable database. The machine-readable database can be enriched with local data, as e.g. unpublished or private material data, leading to knowledge synthesis. The study shows that such an automated workflow accelerates information retrieval, proximate context detection and material property extraction from multi-modal input data exemplarily shown for the research field of microstructural analyses of face-centered cubic single crystals. Ultimately, a Retrieval-Augmented Generation (RAG) based Large Language Model (LLM) enables a fast and efficient question answering chat bot.

Deciphering Acoustic Emission with Machine Learning

Nov 25, 2024Abstract:Acoustic emission signals have been shown to accompany avalanche-like events in materials, such as dislocation avalanches in crystalline solids, collapse of voids in porous matter or domain wall movement in ferroics. The data provided by acoustic emission measurements is tremendously rich, but it is rather challenging to precisely connect it to the characteristics of the triggering avalanche. In our work we propose a machine learning based method with which one can infer microscopic details of dislocation avalanches in micropillar compression tests from merely acoustic emission data. As it is demonstrated in the paper, this approach is suitable for the prediction of the force-time response as it can provide outstanding prediction for the temporal location of avalanches and can also predict the magnitude of individual deformation events. Various descriptors (including frequency dependent and independent ones) are utilised in our machine learning approach and their importance in the prediction is analysed. The transferability of the method to other specimen sizes is also demonstrated and the possible application in more generic settings is discussed.

Undesirable biases in NLP: Averting a crisis of measurement

Nov 24, 2022

Abstract:As Natural Language Processing (NLP) technology rapidly develops and spreads into daily life, it becomes crucial to anticipate how its use could harm people. However, our ways of assessing the biases of NLP models have not kept up. While especially the detection of English gender bias in such models has enjoyed increasing research attention, many of the measures face serious problems, as it is often unclear what they actually measure and how much they are subject to measurement error. In this paper, we provide an interdisciplinary approach to discussing the issue of NLP model bias by adopting the lens of psychometrics -- a field specialized in the measurement of concepts like bias that are not directly observable. We pair an introduction of relevant psychometric concepts with a discussion of how they could be used to evaluate and improve bias measures. We also argue that adopting psychometric vocabulary and methodology can make NLP bias research more efficient and transparent.

The Birth of Bias: A case study on the evolution of gender bias in an English language model

Jul 21, 2022

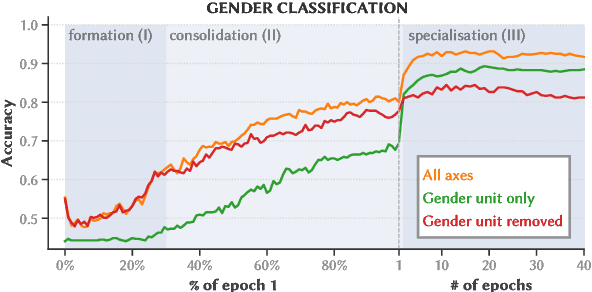

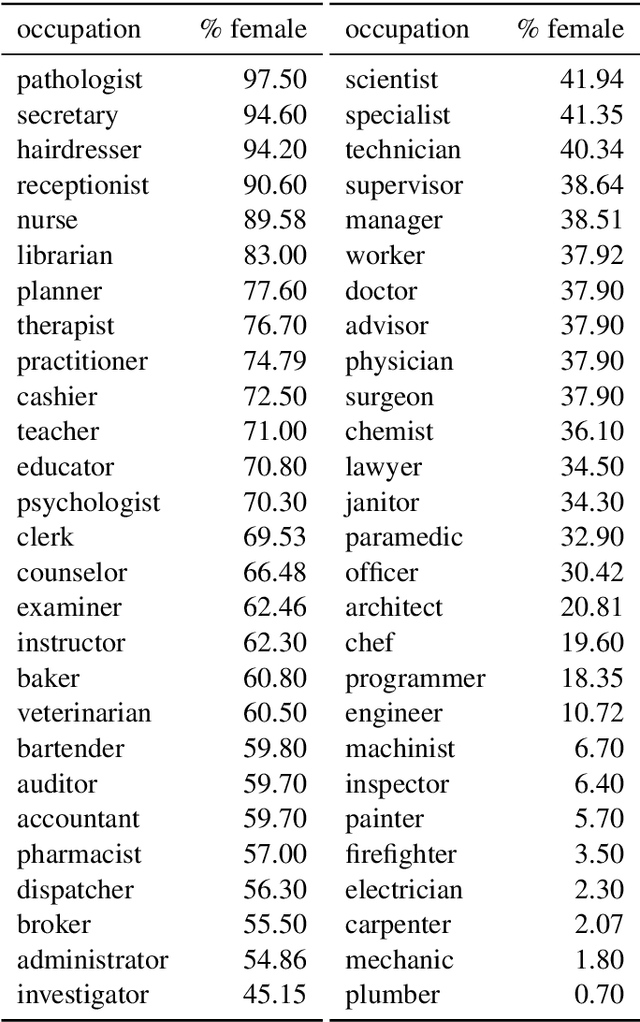

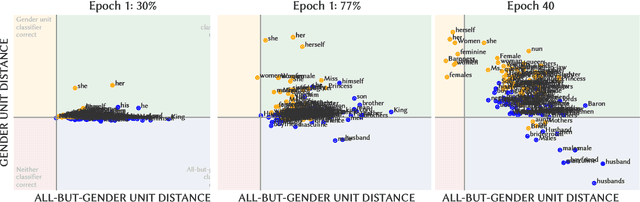

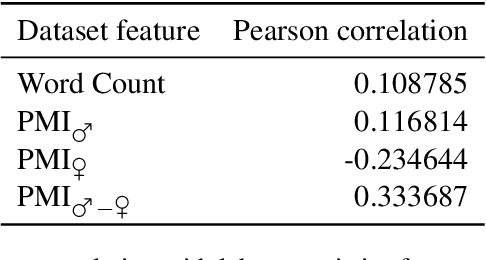

Abstract:Detecting and mitigating harmful biases in modern language models are widely recognized as crucial, open problems. In this paper, we take a step back and investigate how language models come to be biased in the first place. We use a relatively small language model, using the LSTM architecture trained on an English Wikipedia corpus. With full access to the data and to the model parameters as they change during every step while training, we can map in detail how the representation of gender develops, what patterns in the dataset drive this, and how the model's internal state relates to the bias in a downstream task (semantic textual similarity). We find that the representation of gender is dynamic and identify different phases during training. Furthermore, we show that gender information is represented increasingly locally in the input embeddings of the model and that, as a consequence, debiasing these can be effective in reducing the downstream bias. Monitoring the training dynamics, allows us to detect an asymmetry in how the female and male gender are represented in the input embeddings. This is important, as it may cause naive mitigation strategies to introduce new undesirable biases. We discuss the relevance of the findings for mitigation strategies more generally and the prospects of generalizing our methods to larger language models, the Transformer architecture, other languages and other undesirable biases.

Observing Interventions: A logic for thinking about experiments

Dec 01, 2021

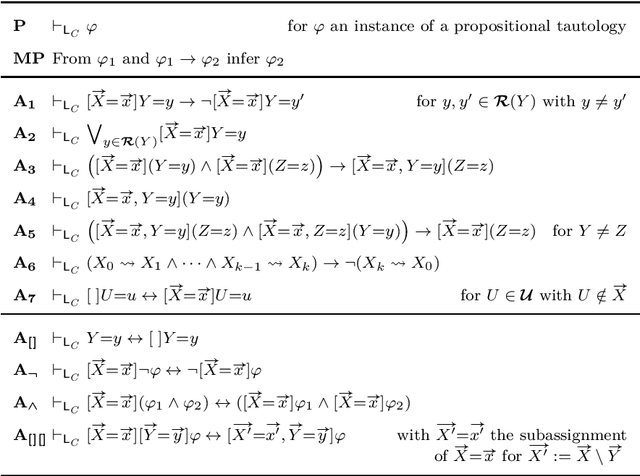

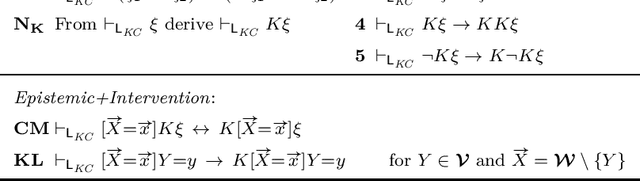

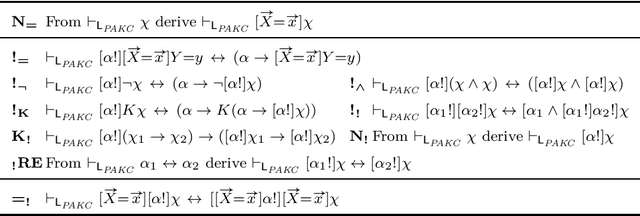

Abstract:This paper makes a first step towards a logic of learning from experiments. For this, we investigate formal frameworks for modeling the interaction of causal and (qualitative) epistemic reasoning. Crucial for our approach is the idea that the notion of an intervention can be used as a formal expression of a (real or hypothetical) experiment. In a first step we extend the well-known causal models with a simple Hintikka-style representation of the epistemic state of an agent. In the resulting setting, one can talk not only about the knowledge of an agent about the values of variables and how interventions affect them, but also about knowledge update. The resulting logic can model reasoning about thought experiments. However, it is unable to account for learning from experiments, which is clearly brought out by the fact that it validates the no learning principle for interventions. Therefore, in a second step, we implement a more complex notion of knowledge that allows an agent to observe (measure) certain variables when an experiment is carried out. This extended system does allow for learning from experiments. For all the proposed logical systems, we provide a sound and complete axiomatization.

Thinking About Causation: A Causal Language with Epistemic Operators

Oct 30, 2020

Abstract:This paper proposes a formal framework for modeling the interaction of causal and (qualitative) epistemic reasoning. To this purpose, we extend the notion of a causal model with a representation of the epistemic state of an agent. On the side of the object language, we add operators to express knowledge and the act of observing new information. We provide a sound and complete axiomatization of the logic, and discuss the relation of this framework to causal team semantics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge