Katie Seaborn

ChatGPT and U(X): A Rapid Review on Measuring the User Experience

Mar 20, 2025

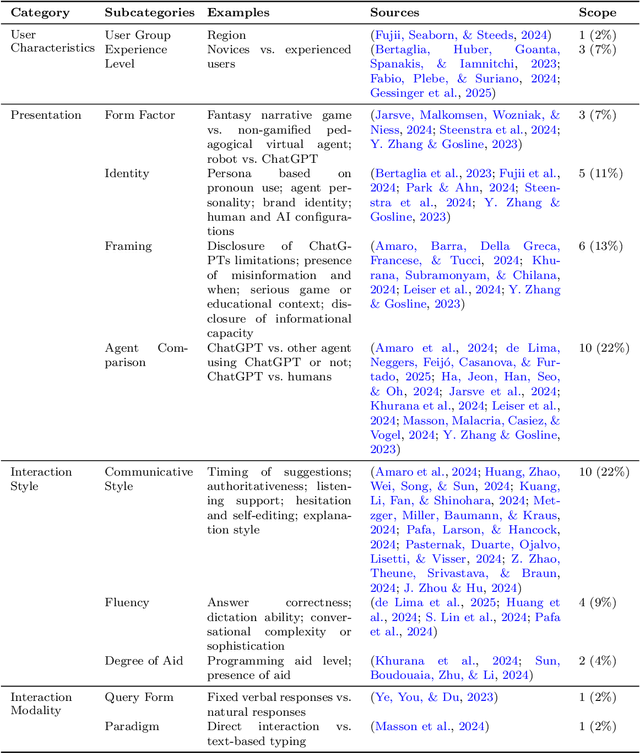

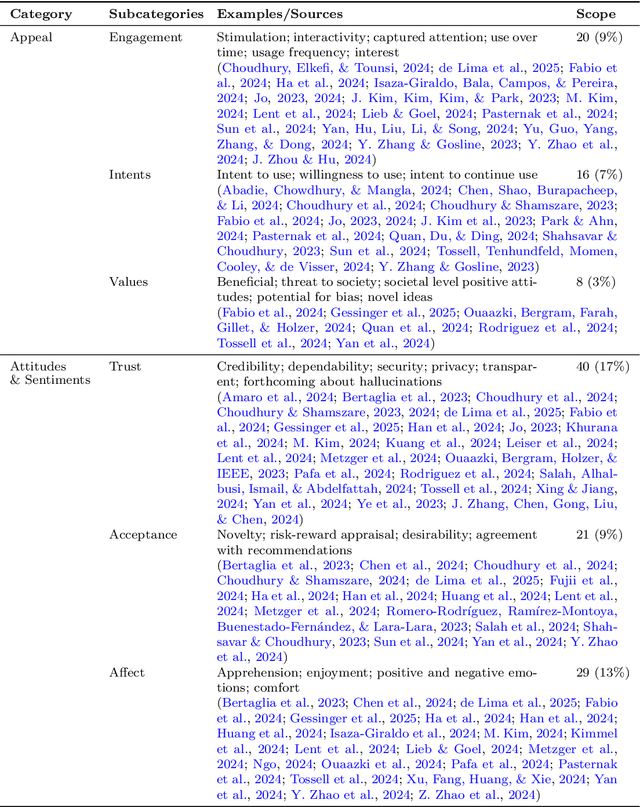

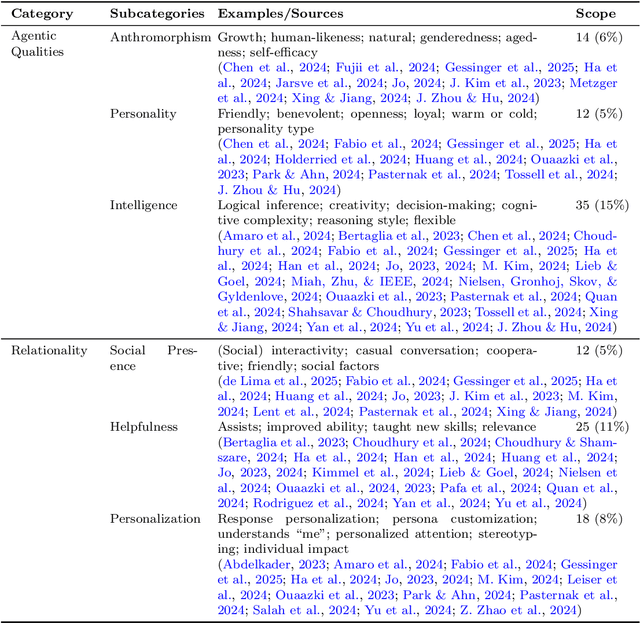

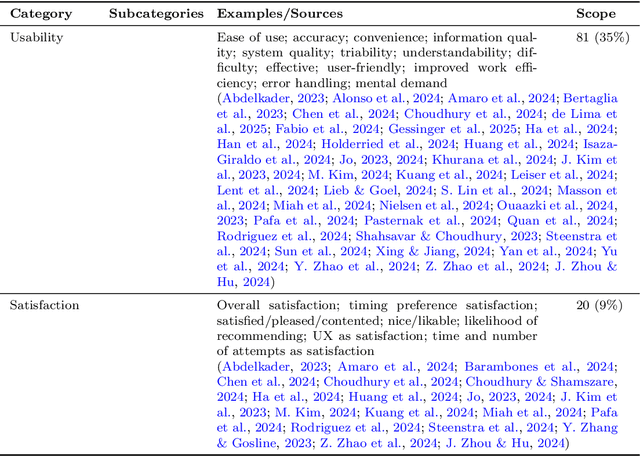

Abstract:ChatGPT, powered by a large language model (LLM), has revolutionized everyday human-computer interaction (HCI) since its 2022 release. While now used by millions around the world, a coherent pathway for evaluating the user experience (UX) ChatGPT offers remains missing. In this rapid review (N = 58), I explored how ChatGPT UX has been approached quantitatively so far. I focused on the independent variables (IVs) manipulated, the dependent variables (DVs) measured, and the methods used for measurement. Findings reveal trends, gaps, and emerging consensus in UX assessments. This work offers a first step towards synthesizing existing approaches to measuring ChatGPT UX, urgent trajectories to advance standardization and breadth, and two preliminary frameworks aimed at guiding future research and tool development. I seek to elevate the field of ChatGPT UX by empowering researchers and practitioners in optimizing user interactions with ChatGPT and similar LLM-based systems.

Bots against Bias: Critical Next Steps for Human-Robot Interaction

Dec 17, 2024Abstract:We humans are biased - and our robotic creations are biased, too. Bias is a natural phenomenon that drives our perceptions and behavior, including when it comes to socially expressive robots that have humanlike features. Recognizing that we embed bias, knowingly or not, within the design of such robots is crucial to studying its implications for people in modern societies. In this chapter, I consider the multifaceted question of bias in the context of humanoid, AI-enabled, and expressive social robots: Where does bias arise, what does it look like, and what can (or should) we do about it. I offer observations on human-robot interaction (HRI) along two parallel tracks: (1) robots designed in bias-conscious ways and (2) robots that may help us tackle bias in the human world. I outline a curated selection of cases for each track drawn from the latest HRI research and positioned against social, legal, and ethical factors. I also propose a set of critical next steps to tackle the challenges and opportunities on bias within HRI research and practice.

Robotic Backchanneling in Online Conversation Facilitation: A Cross-Generational Study

Sep 25, 2024

Abstract:Japan faces many challenges related to its aging society, including increasing rates of cognitive decline in the population and a shortage of caregivers. Efforts have begun to explore solutions using artificial intelligence (AI), especially socially embodied intelligent agents and robots that can communicate with people. Yet, there has been little research on the compatibility of these agents with older adults in various everyday situations. To this end, we conducted a user study to evaluate a robot that functions as a facilitator for a group conversation protocol designed to prevent cognitive decline. We modified the robot to use backchannelling, a natural human way of speaking, to increase receptiveness of the robot and enjoyment of the group conversation experience. We conducted a cross-generational study with young adults and older adults. Qualitative analyses indicated that younger adults perceived the backchannelling version of the robot as kinder, more trustworthy, and more acceptable than the non-backchannelling robot. Finally, we found that the robot's backchannelling elicited nonverbal backchanneling in older participants.

From "Made In" to Mukokuseki: Exploring the Visual Perception of National Identity in Robots

Aug 30, 2024Abstract:People read human characteristics into the design of social robots, a visual process with socio-cultural implications. One factor may be nationality, a complex social characteristic that is linked to ethnicity, culture, and other factors of identity that can be embedded in the visual design of robots. Guided by social identity theory (SIT), we explored the notion of "mukokuseki," a visual design characteristic defined by the absence of visual cues to national and ethnic identity in Japanese cultural exports. In a two-phase categorization study (n=212), American (n=110) and Japanese (n=92) participants rated a random selection of nine robot stimuli from America and Japan, plus multinational Pepper. We found evidence of made-in and two kinds of mukokuseki effects. We offer suggestions for the visual design of mukokuseki robots that may interact with people from diverse backgrounds. Our findings have implications for robots and social identity, the viability of robotic exports, and the use of robots internationally.

* 35 pages

Silver-Tongued and Sundry: Exploring Intersectional Pronouns with ChatGPT

May 13, 2024Abstract:ChatGPT is a conversational agent built on a large language model. Trained on a significant portion of human output, ChatGPT can mimic people to a degree. As such, we need to consider what social identities ChatGPT simulates (or can be designed to simulate). In this study, we explored the case of identity simulation through Japanese first-person pronouns, which are tightly connected to social identities in intersectional ways, i.e., intersectional pronouns. We conducted a controlled online experiment where people from two regions in Japan (Kanto and Kinki) witnessed interactions with ChatGPT using ten sets of first-person pronouns. We discovered that pronouns alone can evoke perceptions of social identities in ChatGPT at the intersections of gender, age, region, and formality, with caveats. This work highlights the importance of pronoun use for social identity simulation, provides a language-based methodology for culturally-sensitive persona development, and advances the potential of intersectional identities in intelligent agents.

* Honorable Mention award (top 5%) at CHI '24

Qualitative Approaches to Voice UX

Apr 23, 2024Abstract:Voice is a natural mode of expression offered by modern computer-based systems. Qualitative perspectives on voice-based user experiences (voice UX) offer rich descriptions of complex interactions that numbers alone cannot fully represent. We conducted a systematic review of the literature on qualitative approaches to voice UX, capturing the nature of this body of work in a systematic map and offering a qualitative synthesis of findings. We highlight the benefits of qualitative methods for voice UX research, identify opportunities for increasing rigour in methods and outcomes, and distill patterns of experience across a diversity of devices and modes of qualitative praxis.

Coimagining the Future of Voice Assistants with Cultural Sensitivity

Mar 26, 2024Abstract:Voice assistants (VAs) are becoming a feature of our everyday life. Yet, the user experience (UX) is often limited, leading to underuse, disengagement, and abandonment. Co-designing interactions for VAs with potential end-users can be useful. Crowdsourcing this process online and anonymously may add value. However, most work has been done in the English-speaking West on dialogue data sets. We must be sensitive to cultural differences in language, social interactions, and attitudes towards technology. Our aims were to explore the value of co-designing VAs in the non-Western context of Japan and demonstrate the necessity of cultural sensitivity. We conducted an online elicitation study (N = 135) where Americans (n = 64) and Japanese people (n = 71) imagined dialogues (N = 282) and activities (N = 73) with future VAs. We discuss the implications for coimagining interactions with future VAs, offer design guidelines for the Japanese and English-speaking US contexts, and suggest opportunities for cultural plurality in VA design and scholarship.

* 21 pages

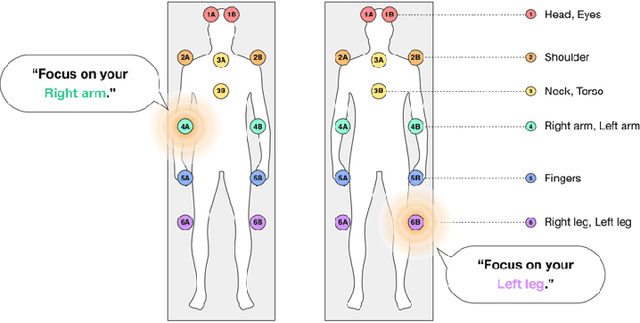

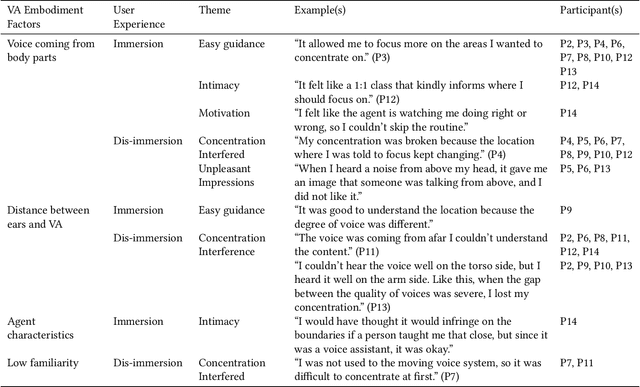

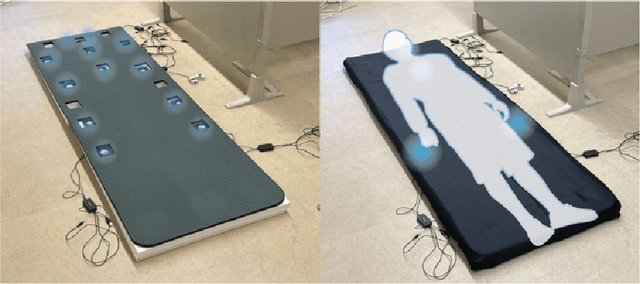

Dis/Immersion in Mindfulness Meditation with a Wandering Voice Assistant

Apr 22, 2023

Abstract:Mindfulness meditation is a validated means of helping people manage stress. Voice-based virtual assistants (VAs) in smart speakers, smartphones, and smart environments can assist people in carrying out mindfulness meditation through guided experiences. However, the common fixed location embodiment of VAs makes it difficult to provide intuitive support. In this work, we explored the novel embodiment of a "wandering voice" that is co-located with the user and "moves" with the task. We developed a multi-speaker VA embedded in a yoga mat that changes location along the body according to the meditation experience. We conducted a qualitative user study in two sessions, comparing a typical fixed smart speaker to the wandering VA embodiment. Thick descriptions from interviews with twelve people revealed sometimes simultaneous experiences of immersion and dis-immersion. We offer design implications for "wandering voices" and a new paradigm for VA embodiment that may extend to guidance tasks in other contexts.

* 6 pages

"I'm" Lost in Translation: Pronoun Missteps in Crowdsourced Data Sets

Apr 22, 2023

Abstract:As virtual assistants continue to be taken up globally, there is an ever-greater need for these speech-based systems to communicate naturally in a variety of languages. Crowdsourcing initiatives have focused on multilingual translation of big, open data sets for use in natural language processing (NLP). Yet, language translation is often not one-to-one, and biases can trickle in. In this late-breaking work, we focus on the case of pronouns translated between English and Japanese in the crowdsourced Tatoeba database. We found that masculine pronoun biases were present overall, even though plurality in language was accounted for in other ways. Importantly, we detected biases in the translation process that reflect nuanced reactions to the presence of feminine, neutral, and/or non-binary pronouns. We raise the issue of translation bias for pronouns and offer a practical solution to embed plurality in NLP data sets.

* 6 pages

Nonverbal Cues in Human-Robot Interaction: A Communication Studies Perspective

Apr 22, 2023Abstract:Communication between people is characterized by a broad range of nonverbal cues. Transferring these cues into the design of robots and other artificial agents that interact with people may foster more natural, inviting, and accessible experiences. In this position paper, we offer a series of definitive nonverbal codes for human-robot interaction (HRI) that address the five human sensory systems (visual, auditory, haptic, olfactory, gustatory) drawn from the field of communication studies. We discuss how these codes can be translated into design patterns for HRI using a curated sample of the communication studies and HRI literatures. As nonverbal codes are an essential mode in human communication, we argue that integrating robotic nonverbal codes in HRI will afford robots a feeling of "aliveness" or "social agency" that would otherwise be missing. We end with suggestions for research directions to stimulate work on nonverbal communication within the field of HRI and improve communication between human and robots.

* 21 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge