Shruti Chandra

Transcending the "Male Code": Implicit Masculine Biases in NLP Contexts

Apr 22, 2023

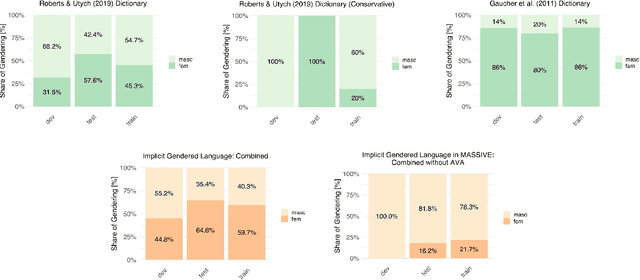

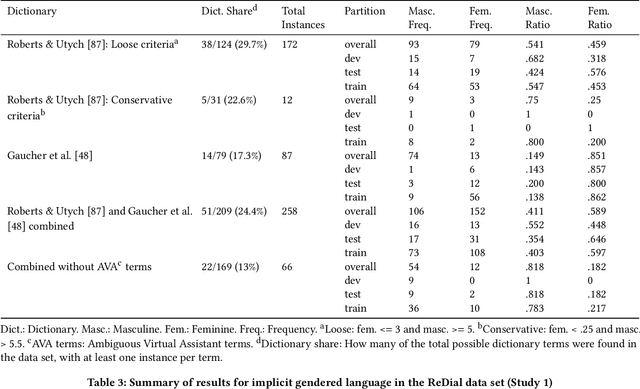

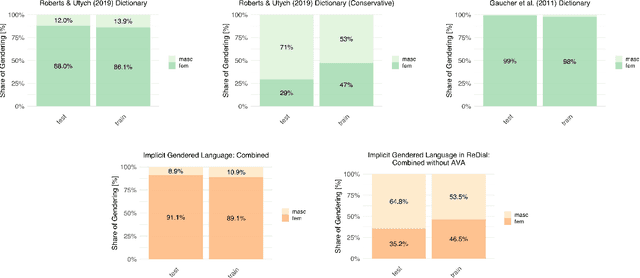

Abstract:Critical scholarship has elevated the problem of gender bias in data sets used to train virtual assistants (VAs). Most work has focused on explicit biases in language, especially against women, girls, femme-identifying people, and genderqueer folk; implicit associations through word embeddings; and limited models of gender and masculinities, especially toxic masculinities, conflation of sex and gender, and a sex/gender binary framing of the masculine as diametric to the feminine. Yet, we must also interrogate how masculinities are "coded" into language and the assumption of "male" as the linguistic default: implicit masculine biases. To this end, we examined two natural language processing (NLP) data sets. We found that when gendered language was present, so were gender biases and especially masculine biases. Moreover, these biases related in nuanced ways to the NLP context. We offer a new dictionary called AVA that covers ambiguous associations between gendered language and the language of VAs.

* 19 pages

Not Only WEIRD but "Uncanny"? A Systematic Review of Diversity in Human-Robot Interaction Research

Apr 18, 2023Abstract:Critical voices within and beyond the scientific community have pointed to a grave matter of concern regarding who is included in research and who is not. Subsequent investigations have revealed an extensive form of sampling bias across a broad range of disciplines that conduct human subjects research called "WEIRD": Western, Educated, Industrial, Rich, and Democratic. Recent work has indicated that this pattern exists within human-computer interaction (HCI) research, as well. How then does human-robot interaction (HRI) fare? And could there be other patterns of sampling bias at play, perhaps those especially relevant to this field of study? We conducted a systematic review of the premier ACM/IEEE International Conference on Human-Robot Interaction (2006-2022) to discover whether and how WEIRD HRI research is. Importantly, we expanded our purview to other factors of representation highlighted by critical work on inclusion and intersectionality as potentially underreported, overlooked, and even marginalized factors of human diversity. Findings from 827 studies across 749 papers confirm that participants in HRI research also tend to be drawn from WEIRD populations. Moreover, we find evidence of limited, obscured, and possible misrepresentation in participant sampling and reporting along key axes of diversity: sex and gender, race and ethnicity, age, sexuality and family configuration, disability, body type, ideology, and domain expertise. We discuss methodological and ethical implications for recruitment, analysis, and reporting, as well as the significance for HRI as a base of knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge