Karthik Sundaresan

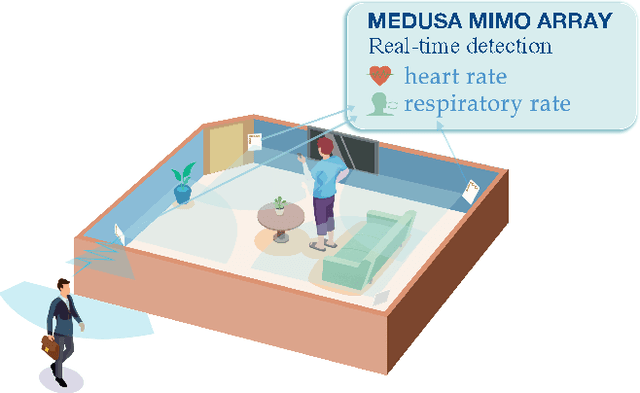

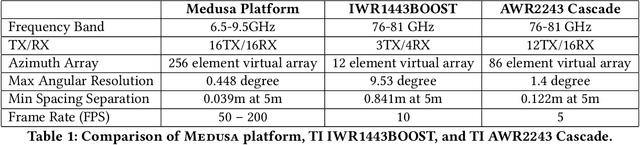

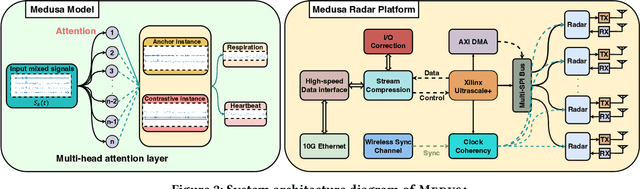

MEDUSA: Scalable Biometric Sensing in the Wild through Distributed MIMO Radars

Oct 10, 2023

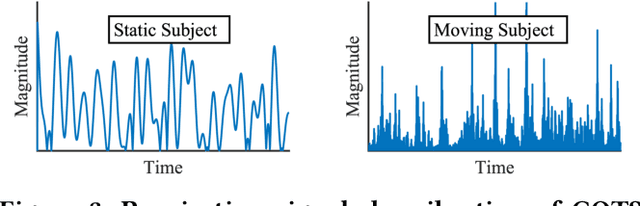

Abstract:Radar-based techniques for detecting vital signs have shown promise for continuous contactless vital sign sensing and healthcare applications. However, real-world indoor environments face significant challenges for existing vital sign monitoring systems. These include signal blockage in non-line-of-sight (NLOS) situations, movement of human subjects, and alterations in location and orientation. Additionally, these existing systems failed to address the challenge of tracking multiple targets simultaneously. To overcome these challenges, we present MEDUSA, a novel coherent ultra-wideband (UWB) based distributed multiple-input multiple-output (MIMO) radar system, especially it allows users to customize and disperse the $16 \times 16$ into sub-arrays. MEDUSA takes advantage of the diversity benefits of distributed yet wirelessly synchronized MIMO arrays to enable robust vital sign monitoring in real-world and daily living environments where human targets are moving and surrounded by obstacles. We've developed a scalable, self-supervised contrastive learning model which integrates seamlessly with our hardware platform. Each attention weight within the model corresponds to a specific antenna pair of Tx and Rx. The model proficiently recovers accurate vital sign waveforms by decomposing and correlating the mixed received signals, including comprising human motion, mobility, noise, and vital signs. Through extensive evaluations involving 21 participants and over 200 hours of collected data (3.75 TB in total, with 1.89 TB for static subjects and 1.86 TB for moving subjects), MEDUSA's performance has been validated, showing an average gain of 20% compared to existing systems employing COTS radar sensors. This demonstrates MEDUSA's spatial diversity gain for real-world vital sign monitoring, encompassing target and environmental dynamics in familiar and unfamiliar indoor environments.

ARES: Accurate, Autonomous, Near Real-time 3D Reconstruction using Drones

Apr 20, 2021

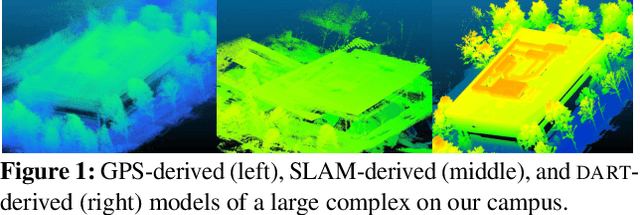

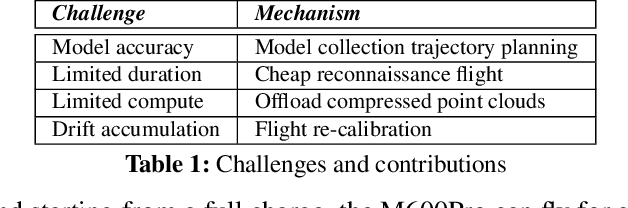

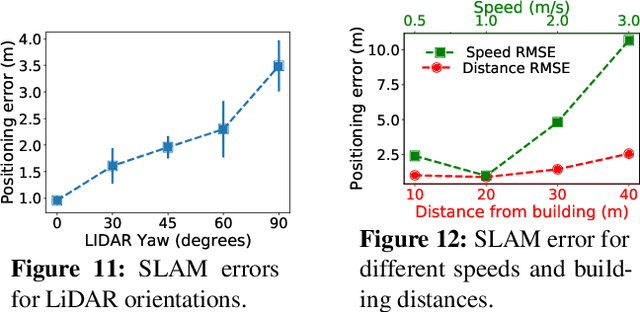

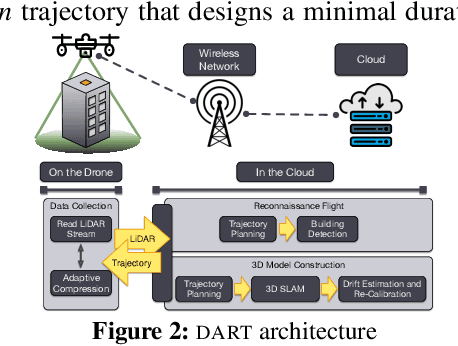

Abstract:Drones will revolutionize 3D modeling. A 3D model represents an accurate reconstruction of an object or structure. This paper explores the design and implementation of ARES, which provides near real-time, accurate reconstruction of 3D models using a drone-mounted LiDAR; such a capability can be useful to document construction or check aircraft integrity between flights. Accurate reconstruction requires high drone positioning accuracy, and, because GPS can be in accurate, ARES uses SLAM. However, in doing so it must deal with several competing constraints: drone battery and compute resources, SLAM error accumulation, and LiDAR resolution. ARES uses careful trajectory design to find a sweet spot in this constraint space, a fast reconnaissance flight to narrow the search area for structures, and offloads expensive computations to the cloud by streaming compressed LiDAR data over LTE. ARES reconstructs large structures to within 10s of cms and incurs less than 100 ms compute latency.

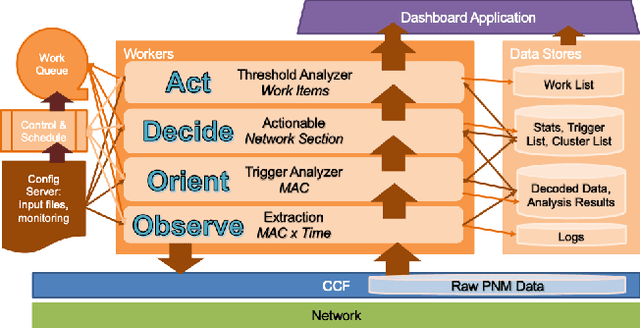

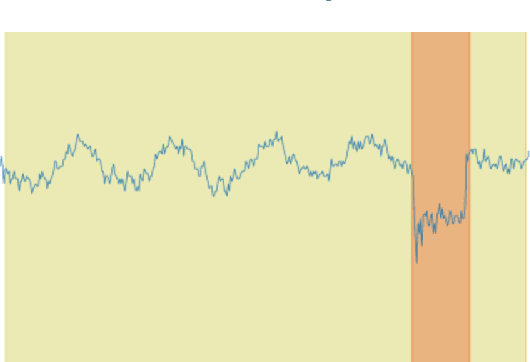

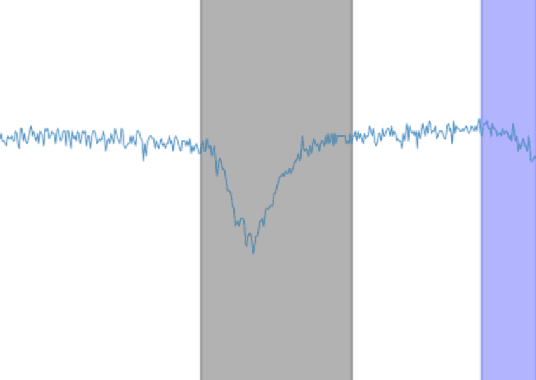

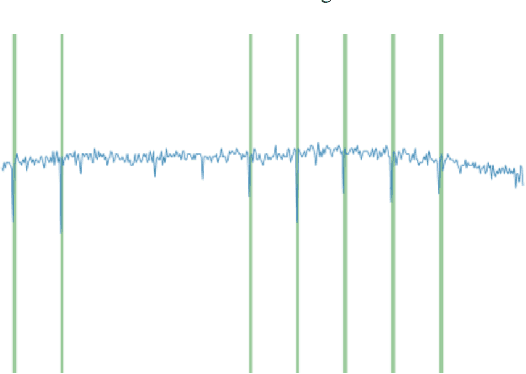

Proactive Network Maintenance using Fast, Accurate Anomaly Localization and Classification on 1-D Data Series

Jul 17, 2020

Abstract:Proactive network maintenance (PNM) is the concept of using data from a network to identify and locate network faults, many or all of which could worsen to become service failures. The separation between the network fault and the service failure affords early detection of problems in the network to allow PNM to take place. Consequently, PNM is a form of prognostics and health management (PHM). The problem of localizing and classifying anomalies on 1-dimensional data series has been under research for years. We introduce a new algorithm that leverages Deep Convolutional Neural Networks to efficiently and accurately detect anomalies and events on data series, and it reaches 97.82% mean average precision (mAP) in our evaluation.

Monitoring Browsing Behavior of Customers in Retail Stores via RFID Imaging

Jul 07, 2020

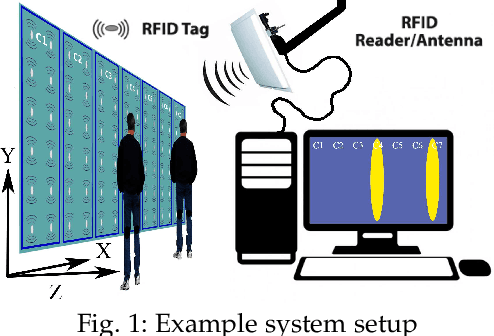

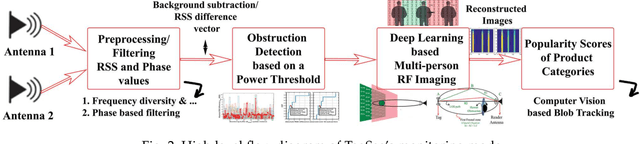

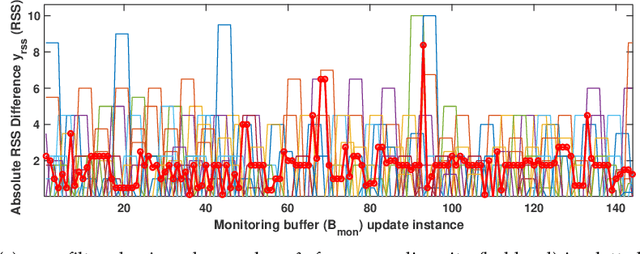

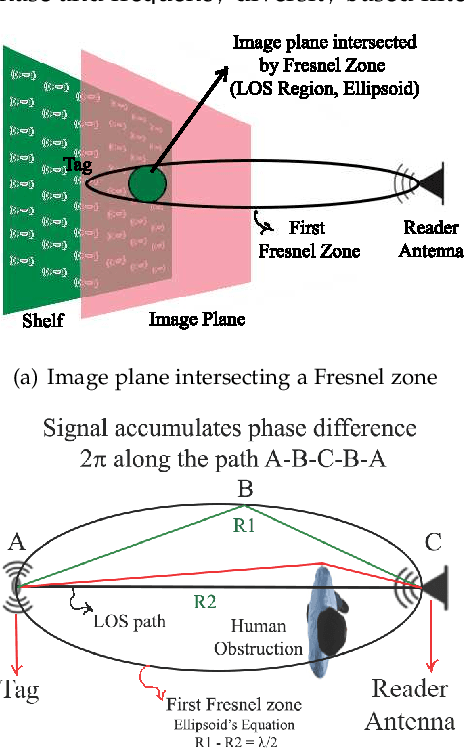

Abstract:In this paper, we propose to use commercial off-the-shelf (COTS) monostatic RFID devices (i.e. which use a single antenna at a time for both transmitting and receiving RFID signals to and from the tags) to monitor browsing activity of customers in front of display items in places such as retail stores. To this end, we propose TagSee, a multi-person imaging system based on monostatic RFID imaging. TagSee is based on the insight that when customers are browsing the items on a shelf, they stand between the tags deployed along the boundaries of the shelf and the reader, which changes the multi-paths that the RFID signals travel along, and both the RSS and phase values of the RFID signals that the reader receives change. Based on these variations observed by the reader, TagSee constructs a coarse grained image of the customers. Afterwards, TagSee identifies the items that are being browsed by the customers by analyzing the constructed images. The key novelty of this paper is on achieving browsing behavior monitoring of multiple customers in front of display items by constructing coarse grained images via robust, analytical model-driven deep learning based, RFID imaging. To achieve this, we first mathematically formulate the problem of imaging humans using monostatic RFID devices and derive an approximate analytical imaging model that correlates the variations caused by human obstructions in the RFID signals. Based on this model, we then develop a deep learning framework to robustly image customers with high accuracy. We implement TagSee scheme using a Impinj Speedway R420 reader and SMARTRAC DogBone RFID tags. TagSee can achieve a TPR of more than ~90% and a FPR of less than ~10% in multi-person scenarios using training data from just 3-4 users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge