Kang L. Wang

University of California, Los Angeles

Spintronic Bayesian Hardware Driven by Stochastic Magnetic Domain Wall Dynamics

Jul 23, 2025Abstract:As artificial intelligence (AI) advances into diverse applications, ensuring reliability of AI models is increasingly critical. Conventional neural networks offer strong predictive capabilities but produce deterministic outputs without inherent uncertainty estimation, limiting their reliability in safety-critical domains. Probabilistic neural networks (PNNs), which introduce randomness, have emerged as a powerful approach for enabling intrinsic uncertainty quantification. However, traditional CMOS architectures are inherently designed for deterministic operation and actively suppress intrinsic randomness. This poses a fundamental challenge for implementing PNNs, as probabilistic processing introduces significant computational overhead. To address this challenge, we introduce a Magnetic Probabilistic Computing (MPC) platform-an energy-efficient, scalable hardware accelerator that leverages intrinsic magnetic stochasticity for uncertainty-aware computing. This physics-driven strategy utilizes spintronic systems based on magnetic domain walls (DWs) and their dynamics to establish a new paradigm of physical probabilistic computing for AI. The MPC platform integrates three key mechanisms: thermally induced DW stochasticity, voltage controlled magnetic anisotropy (VCMA), and tunneling magnetoresistance (TMR), enabling fully electrical and tunable probabilistic functionality at the device level. As a representative demonstration, we implement a Bayesian Neural Network (BNN) inference structure and validate its functionality on CIFAR-10 classification tasks. Compared to standard 28nm CMOS implementations, our approach achieves a seven orders of magnitude improvement in the overall figure of merit, with substantial gains in area efficiency, energy consumption, and speed. These results underscore the MPC platform's potential to enable reliable and trustworthy physical AI systems.

Unitary Multi-Margin BERT for Robust Natural Language Processing

Oct 16, 2024

Abstract:Recent developments in adversarial attacks on deep learning leave many mission-critical natural language processing (NLP) systems at risk of exploitation. To address the lack of computationally efficient adversarial defense methods, this paper reports a novel, universal technique that drastically improves the robustness of Bidirectional Encoder Representations from Transformers (BERT) by combining the unitary weights with the multi-margin loss. We discover that the marriage of these two simple ideas amplifies the protection against malicious interference. Our model, the unitary multi-margin BERT (UniBERT), boosts post-attack classification accuracies significantly by 5.3% to 73.8% while maintaining competitive pre-attack accuracies. Furthermore, the pre-attack and post-attack accuracy tradeoff can be adjusted via a single scalar parameter to best fit the design requirements for the target applications.

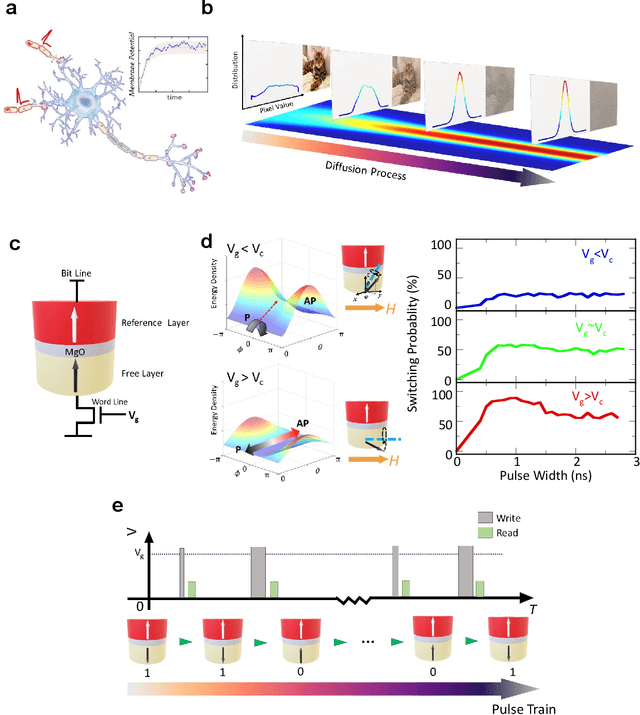

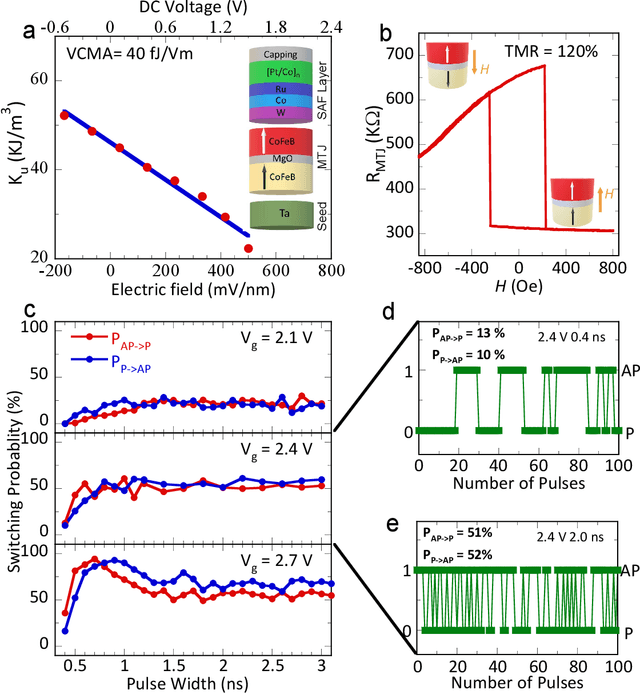

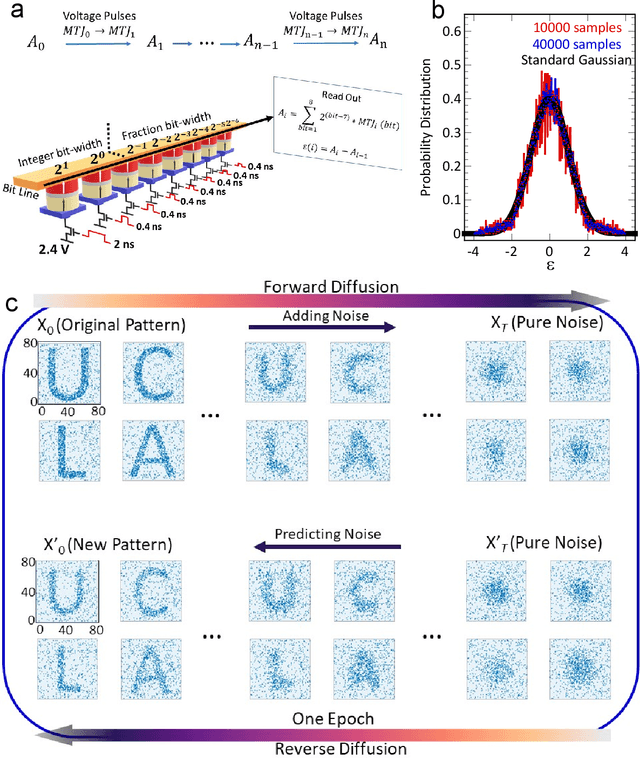

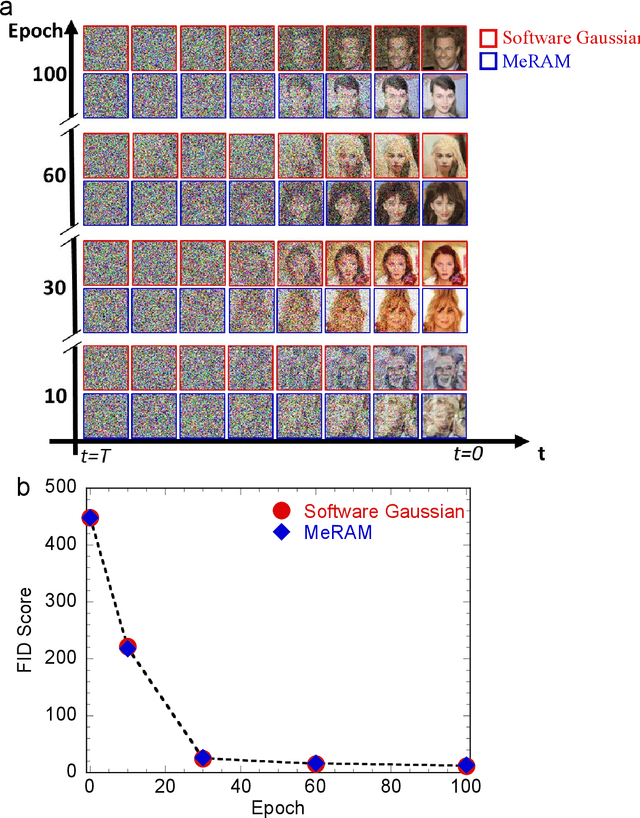

Voltage-Controlled Magnetoelectric Devices for Neuromorphic Diffusion Process

Jul 17, 2024

Abstract:Stochastic diffusion processes are pervasive in nature, from the seemingly erratic Brownian motion to the complex interactions of synaptically-coupled spiking neurons. Recently, drawing inspiration from Langevin dynamics, neuromorphic diffusion models were proposed and have become one of the major breakthroughs in the field of generative artificial intelligence. Unlike discriminative models that have been well developed to tackle classification or regression tasks, diffusion models as well as other generative models such as ChatGPT aim at creating content based upon contexts learned. However, the more complex algorithms of these models result in high computational costs using today's technologies, creating a bottleneck in their efficiency, and impeding further development. Here, we develop a spintronic voltage-controlled magnetoelectric memory hardware for the neuromorphic diffusion process. The in-memory computing capability of our spintronic devices goes beyond current Von Neumann architecture, where memory and computing units are separated. Together with the non-volatility of magnetic memory, we can achieve high-speed and low-cost computing, which is desirable for the increasing scale of generative models in the current era. We experimentally demonstrate that the hardware-based true random diffusion process can be implemented for image generation and achieve comparable image quality to software-based training as measured by the Frechet inception distance (FID) score, achieving ~10^3 better energy-per-bit-per-area over traditional hardware.

Max and Coincidence Neurons in Neural Networks

Oct 04, 2021

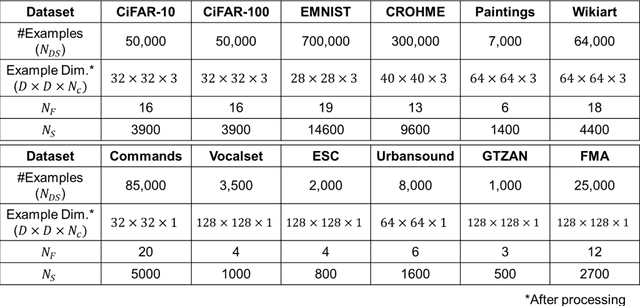

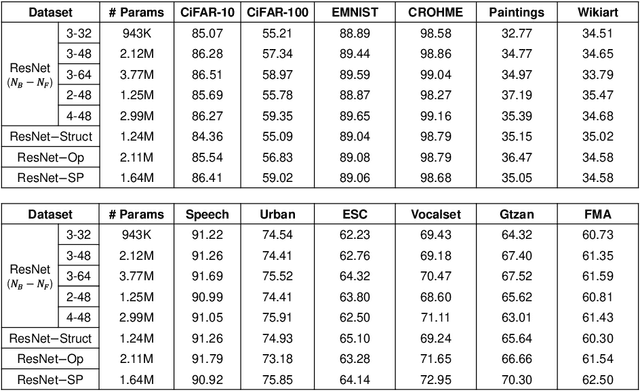

Abstract:Network design has been a central topic in machine learning. Large amounts of effort have been devoted towards creating efficient architectures through manual exploration as well as automated neural architecture search. However, todays architectures have yet to consider the diversity of neurons and the existence of neurons with specific processing functions. In this work, we optimize networks containing models of the max and coincidence neurons using neural architecture search, and analyze the structure, operations, and neurons of optimized networks to develop a signal-processing ResNet. The developed network achieves an average of 2% improvement in accuracy and a 25% improvement in network size across a variety of datasets, demonstrating the importance of neuronal functions in creating compact, efficient networks.

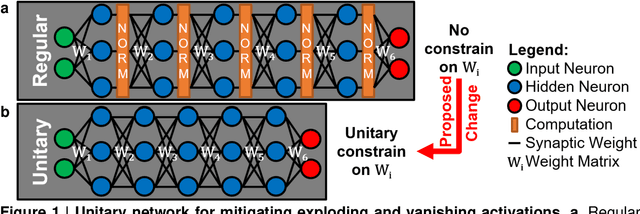

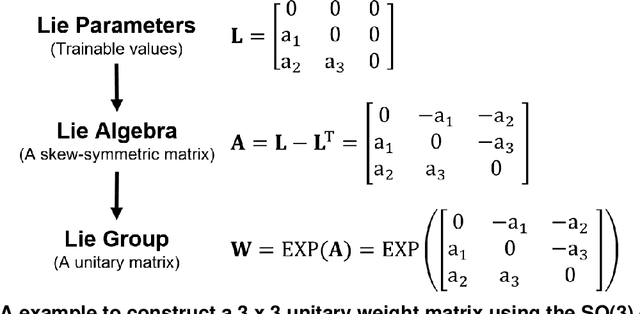

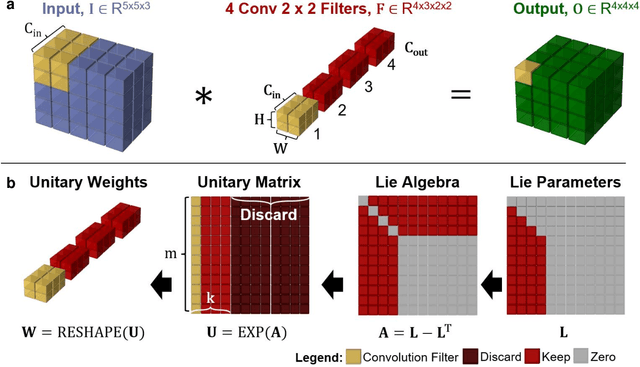

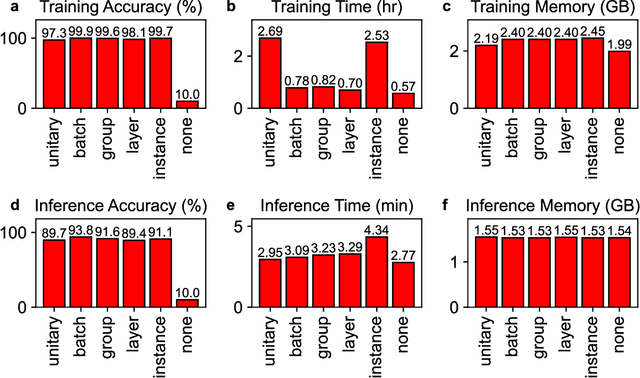

Deep Convolutional Neural Networks with Unitary Weights

Feb 23, 2021

Abstract:While normalizations aim to fix the exploding and vanishing gradient problem in deep neural networks, they have drawbacks in speed or accuracy because of their dependency on the data set statistics. This work is a comprehensive study of a novel method based on unitary synaptic weights derived from Lie Group to construct intrinsically stable neural systems. Here we show that unitary convolutional neural networks deliver up to 32% faster inference speeds while maintaining competitive prediction accuracy. Unlike prior arts restricted to square synaptic weights, we expand the unitary networks to weights of any size and dimension.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge