Max and Coincidence Neurons in Neural Networks

Paper and Code

Oct 04, 2021

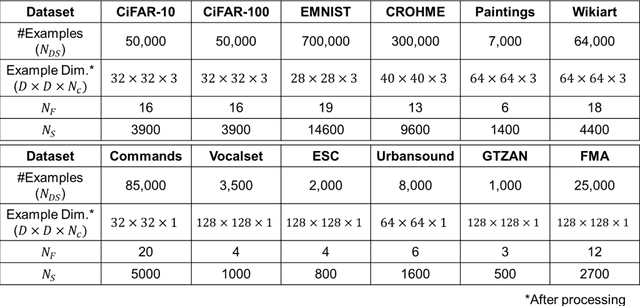

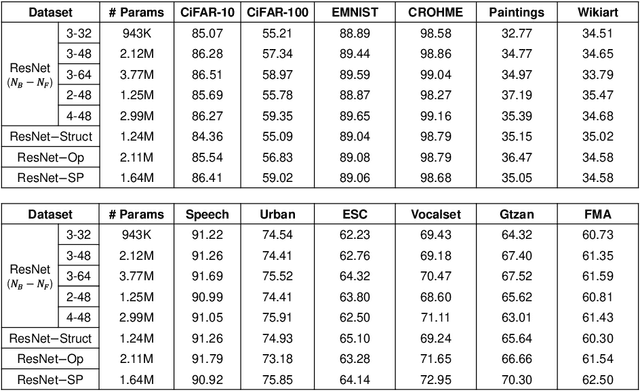

Network design has been a central topic in machine learning. Large amounts of effort have been devoted towards creating efficient architectures through manual exploration as well as automated neural architecture search. However, todays architectures have yet to consider the diversity of neurons and the existence of neurons with specific processing functions. In this work, we optimize networks containing models of the max and coincidence neurons using neural architecture search, and analyze the structure, operations, and neurons of optimized networks to develop a signal-processing ResNet. The developed network achieves an average of 2% improvement in accuracy and a 25% improvement in network size across a variety of datasets, demonstrating the importance of neuronal functions in creating compact, efficient networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge