Kaizhong Deng

Automatic Robotic-Assisted Diffuse Reflectance Spectroscopy Scanning System

Mar 11, 2025

Abstract:Diffuse Reflectance Spectroscopy (DRS) is a well-established optical technique for tissue composition assessment which has been clinically evaluated for tumour detection to ensure the complete removal of cancerous tissue. While point-wise assessment has many potential applications, incorporating automated large-area scanning would enable holistic tissue sampling with higher consistency. We propose a robotic system to facilitate autonomous DRS scanning with hybrid visual servoing control. A specially designed height compensation module enables precise contact condition control. The evaluation results show that the system can accurately execute the scanning command and acquire consistent DRS spectra with comparable results to the manual collection, which is the current gold standard protocol. Integrating the proposed system into surgery lays the groundwork for autonomous intra-operative DRS tissue assessment with high reliability and repeatability. This could reduce the need for manual scanning by the surgeon while ensuring complete tumor removal in clinical practice.

Hybrid Deep Reinforcement Learning for Radio Tracer Localisation in Robotic-assisted Radioguided Surgery

Mar 11, 2025

Abstract:Radioguided surgery, such as sentinel lymph node biopsy, relies on the precise localization of radioactive targets by non-imaging gamma/beta detectors. Manual radioactive target detection based on visual display or audible indication of gamma level is highly dependent on the ability of the surgeon to track and interpret the spatial information. This paper presents a learning-based method to realize the autonomous radiotracer detection in robot-assisted surgeries by navigating the probe to the radioactive target. We proposed novel hybrid approach that combines deep reinforcement learning (DRL) with adaptive robotic scanning. The adaptive grid-based scanning could provide initial direction estimation while the DRL-based agent could efficiently navigate to the target utilising historical data. Simulation experiments demonstrate a 95% success rate, and improved efficiency and robustness compared to conventional techniques. Real-world evaluation on the da Vinci Research Kit (dVRK) further confirms the feasibility of the approach, achieving an 80% success rate in radiotracer detection. This method has the potential to enhance consistency, reduce operator dependency, and improve procedural accuracy in radioguided surgeries.

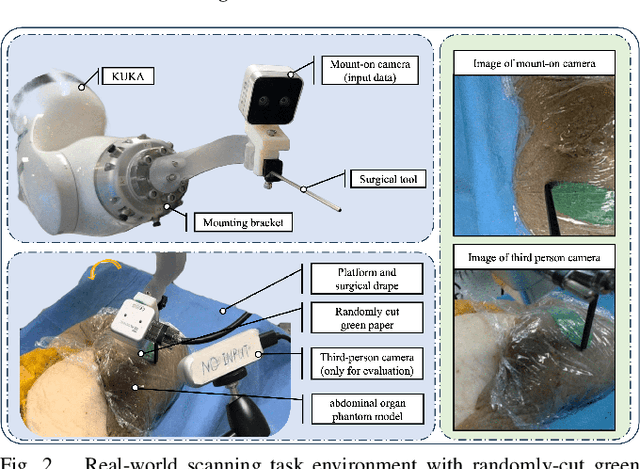

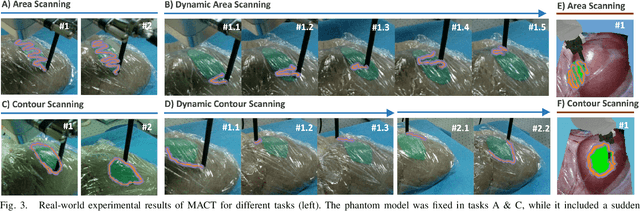

Memorized action chunking with Transformers: Imitation learning for vision-based tissue surface scanning

Nov 06, 2024

Abstract:Optical sensing technologies are emerging technologies used in cancer surgeries to ensure the complete removal of cancerous tissue. While point-wise assessment has many potential applications, incorporating automated large area scanning would enable holistic tissue sampling. However, such scanning tasks are challenging due to their long-horizon dependency and the requirement for fine-grained motion. To address these issues, we introduce Memorized Action Chunking with Transformers (MACT), an intuitive yet efficient imitation learning method for tissue surface scanning tasks. It utilizes a sequence of past images as historical information to predict near-future action sequences. In addition, hybrid temporal-spatial positional embeddings were employed to facilitate learning. In various simulation settings, MACT demonstrated significant improvements in contour scanning and area scanning over the baseline model. In real-world testing, with only 50 demonstration trajectories, MACT surpassed the baseline model by achieving a 60-80% success rate on all scanning tasks. Our findings suggest that MACT is a promising model for adaptive scanning in surgical settings.

Deep Imitation Learning for Automated Drop-In Gamma Probe Manipulation

Apr 27, 2023Abstract:The increasing prevalence of prostate cancer has led to the widespread adoption of Robotic-Assisted Surgery (RAS) as a treatment option. Sentinel lymph node biopsy (SLNB) is a crucial component of prostate cancer surgery and requires accurate diagnostic evidence. This procedure can be improved by using a drop-in gamma probe, SENSEI system, to distinguish cancerous tissue from normal tissue. However, manual control of the probe using live gamma level display and audible feedback could be challenging for inexperienced surgeons, leading to the potential for missed detections. In this study, a deep imitation training workflow was proposed to automate the radioactive node detection procedure. The proposed training workflow uses simulation data to train an end-to-end vision-based gamma probe manipulation agent. The evaluation results showed that the proposed approach was capable to predict the next-step action and holds promise for further improvement and extension to a hardware setup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge