Junzhong Ji

HSENet: Hybrid Spatial Encoding Network for 3D Medical Vision-Language Understanding

Jun 11, 2025Abstract:Automated 3D CT diagnosis empowers clinicians to make timely, evidence-based decisions by enhancing diagnostic accuracy and workflow efficiency. While multimodal large language models (MLLMs) exhibit promising performance in visual-language understanding, existing methods mainly focus on 2D medical images, which fundamentally limits their ability to capture complex 3D anatomical structures. This limitation often leads to misinterpretation of subtle pathologies and causes diagnostic hallucinations. In this paper, we present Hybrid Spatial Encoding Network (HSENet), a framework that exploits enriched 3D medical visual cues by effective visual perception and projection for accurate and robust vision-language understanding. Specifically, HSENet employs dual-3D vision encoders to perceive both global volumetric contexts and fine-grained anatomical details, which are pre-trained by dual-stage alignment with diagnostic reports. Furthermore, we propose Spatial Packer, an efficient multimodal projector that condenses high-resolution 3D spatial regions into a compact set of informative visual tokens via centroid-based compression. By assigning spatial packers with dual-3D vision encoders, HSENet can seamlessly perceive and transfer hybrid visual representations to LLM's semantic space, facilitating accurate diagnostic text generation. Experimental results demonstrate that our method achieves state-of-the-art performance in 3D language-visual retrieval (39.85% of R@100, +5.96% gain), 3D medical report generation (24.01% of BLEU-4, +8.01% gain), and 3D visual question answering (73.60% of Major Class Accuracy, +1.99% gain), confirming its effectiveness. Our code is available at https://github.com/YanzhaoShi/HSENet.

Brain Effective Connectivity Estimation via Fourier Spatiotemporal Attention

Mar 14, 2025

Abstract:Estimating brain effective connectivity (EC) from functional magnetic resonance imaging (fMRI) data can aid in comprehending the neural mechanisms underlying human behavior and cognition, providing a foundation for disease diagnosis. However, current spatiotemporal attention modules handle temporal and spatial attention separately, extracting temporal and spatial features either sequentially or in parallel. These approaches overlook the inherent spatiotemporal correlations present in real world fMRI data. Additionally, the presence of noise in fMRI data further limits the performance of existing methods. In this paper, we propose a novel brain effective connectivity estimation method based on Fourier spatiotemporal attention (FSTA-EC), which combines Fourier attention and spatiotemporal attention to simultaneously capture inter-series (spatial) dynamics and intra-series (temporal) dependencies from high-noise fMRI data. Specifically, Fourier attention is designed to convert the high-noise fMRI data to frequency domain, and map the denoised fMRI data back to physical domain, and spatiotemporal attention is crafted to simultaneously learn spatiotemporal dynamics. Furthermore, through a series of proofs, we demonstrate that incorporating learnable filter into fast Fourier transform and inverse fast Fourier transform processes is mathematically equivalent to performing cyclic convolution. The experimental results on simulated and real-resting-state fMRI datasets demonstrate that the proposed method exhibits superior performance when compared to state-of-the-art methods.

See Detail Say Clear: Towards Brain CT Report Generation via Pathological Clue-driven Representation Learning

Sep 29, 2024

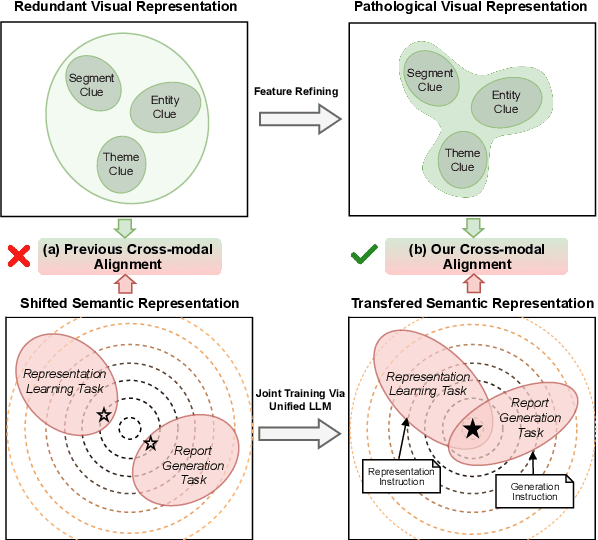

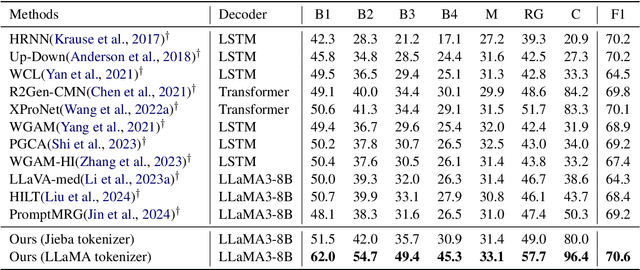

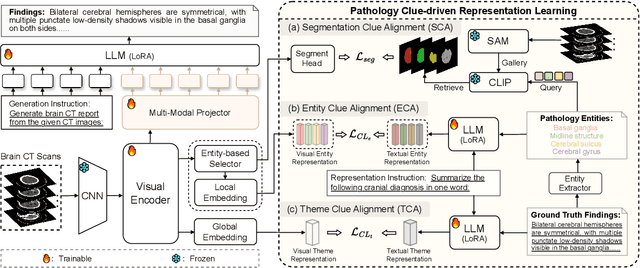

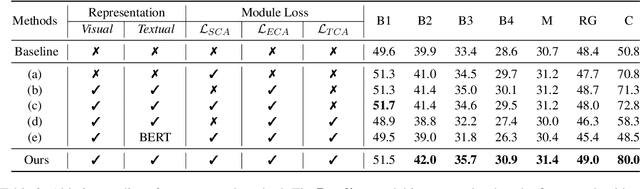

Abstract:Brain CT report generation is significant to aid physicians in diagnosing cranial diseases. Recent studies concentrate on handling the consistency between visual and textual pathological features to improve the coherence of report. However, there exist some challenges: 1) Redundant visual representing: Massive irrelevant areas in 3D scans distract models from representing salient visual contexts. 2) Shifted semantic representing: Limited medical corpus causes difficulties for models to transfer the learned textual representations to generative layers. This study introduces a Pathological Clue-driven Representation Learning (PCRL) model to build cross-modal representations based on pathological clues and naturally adapt them for accurate report generation. Specifically, we construct pathological clues from perspectives of segmented regions, pathological entities, and report themes, to fully grasp visual pathological patterns and learn cross-modal feature representations. To adapt the representations for the text generation task, we bridge the gap between representation learning and report generation by using a unified large language model (LLM) with task-tailored instructions. These crafted instructions enable the LLM to be flexibly fine-tuned across tasks and smoothly transfer the semantic representation for report generation. Experiments demonstrate that our method outperforms previous methods and achieves SoTA performance. Our code is available at https://github.com/Chauncey-Jheng/PCRL-MRG.

Supervised Contrastive Learning with Structure Inference for Graph Classification

Mar 15, 2022

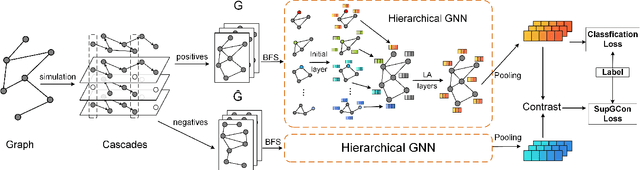

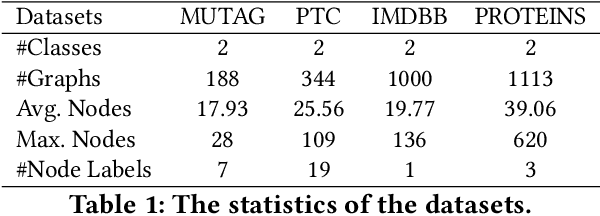

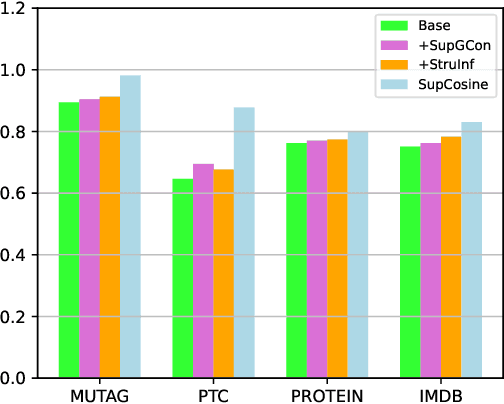

Abstract:Advanced graph neural networks have shown great potentials in graph classification tasks recently. Different from node classification where node embeddings aggregated from local neighbors can be directly used to learn node labels, graph classification requires a hierarchical accumulation of different levels of topological information to generate discriminative graph embeddings. Still, how to fully explore graph structures and formulate an effective graph classification pipeline remains rudimentary. In this paper, we propose a novel graph neural network based on supervised contrastive learning with structure inference for graph classification. First, we propose a data-driven graph augmentation strategy that can discover additional connections to enhance the existing edge set. Concretely, we resort to a structure inference stage based on diffusion cascades to recover possible connections with high node similarities. Second, to improve the contrastive power of graph neural networks, we propose to use a supervised contrastive loss for graph classification. With the integration of label information, the one-vs-many contrastive learning can be extended to a many-vs-many setting, so that the graph-level embeddings with higher topological similarities will be pulled closer. The supervised contrastive loss and structure inference can be naturally incorporated within the hierarchical graph neural networks where the topological patterns can be fully explored to produce discriminative graph embeddings. Experiment results show the effectiveness of the proposed method compared with recent state-of-the-art methods.

Multi-task Self-distillation for Graph-based Semi-Supervised Learning

Dec 02, 2021

Abstract:Graph convolutional networks have made great progress in graph-based semi-supervised learning. Existing methods mainly assume that nodes connected by graph edges are prone to have similar attributes and labels, so that the features smoothed by local graph structures can reveal the class similarities. However, there often exist mismatches between graph structures and labels in many real-world scenarios, where the structures may propagate misleading features or labels that eventually affect the model performance. In this paper, we propose a multi-task self-distillation framework that injects self-supervised learning and self-distillation into graph convolutional networks to separately address the mismatch problem from the structure side and the label side. First, we formulate a self-supervision pipeline based on pre-text tasks to capture different levels of similarities in graphs. The feature extraction process is encouraged to capture more complex proximity by jointly optimizing the pre-text task and the target task. Consequently, the local feature aggregations are improved from the structure side. Second, self-distillation uses soft labels of the model itself as additional supervision, which has similar effects as label smoothing. The knowledge from the classification pipeline and the self-supervision pipeline is collectively distilled to improve the generalization ability of the model from the label side. Experiment results show that the proposed method obtains remarkable performance gains under several classic graph convolutional architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge