Junzhe Xu

TBAC-UniImage: Unified Understanding and Generation by Ladder-Side Diffusion Tuning

Aug 11, 2025Abstract:This paper introduces TBAC-UniImage, a novel unified model for multimodal understanding and generation. We achieve this by deeply integrating a pre-trained Diffusion Model, acting as a generative ladder, with a Multimodal Large Language Model (MLLM). Previous diffusion-based unified models face two primary limitations. One approach uses only the MLLM's final hidden state as the generative condition. This creates a shallow connection, as the generator is isolated from the rich, hierarchical representations within the MLLM's intermediate layers. The other approach, pretraining a unified generative architecture from scratch, is computationally expensive and prohibitive for many researchers. To overcome these issues, our work explores a new paradigm. Instead of relying on a single output, we use representations from multiple, diverse layers of the MLLM as generative conditions for the diffusion model. This method treats the pre-trained generator as a ladder, receiving guidance from various depths of the MLLM's understanding process. Consequently, TBAC-UniImage achieves a much deeper and more fine-grained unification of understanding and generation.

Simple Semantic-Aided Few-Shot Learning

Nov 30, 2023

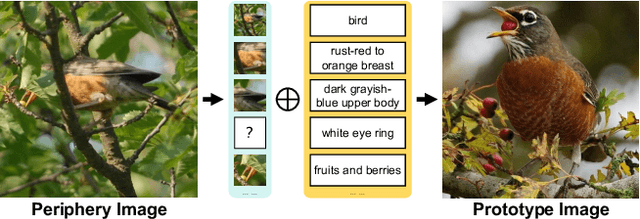

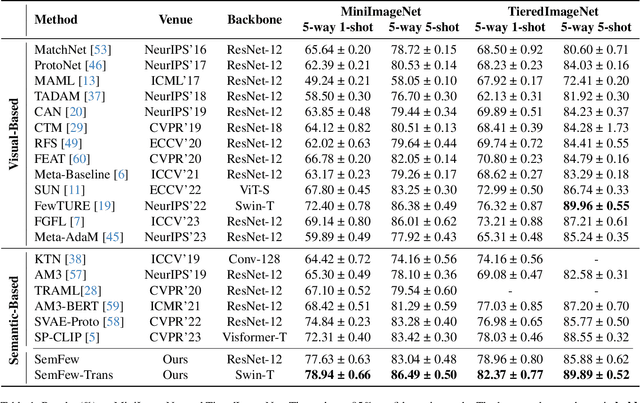

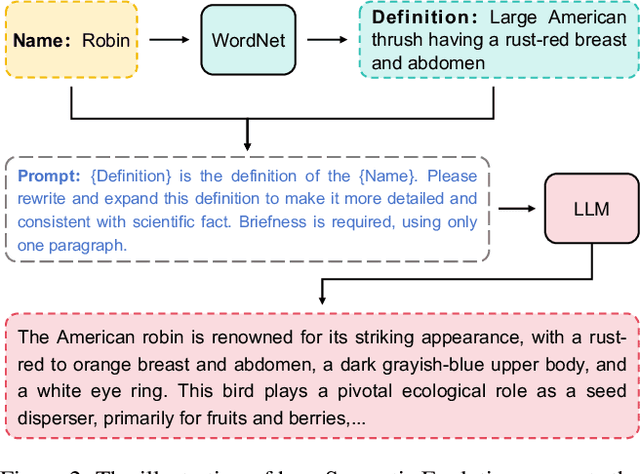

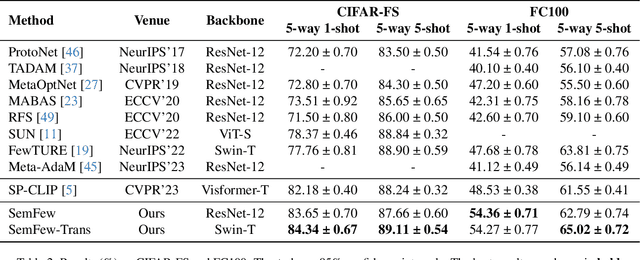

Abstract:Learning from a limited amount of data, namely Few-Shot Learning, stands out as a challenging computer vision task. Several works exploit semantics and design complicated semantic fusion mechanisms to compensate for rare representative features within restricted data. However, relying on naive semantics such as class names introduces biases due to their brevity, while acquiring extensive semantics from external knowledge takes a huge time and effort. This limitation severely constrains the potential of semantics in few-shot learning. In this paper, we design an automatic way called Semantic Evolution to generate high-quality semantics. The incorporation of high-quality semantics alleviates the need for complex network structures and learning algorithms used in previous works. Hence, we employ a simple two-layer network termed Semantic Alignment Network to transform semantics and visual features into robust class prototypes with rich discriminative features for few-shot classification. The experimental results show our framework outperforms all previous methods on five benchmarks, demonstrating a simple network with high-quality semantics can beat intricate multi-modal modules on few-shot classification tasks.

Attribute Localization and Revision Network for Zero-Shot Learning

Oct 11, 2023Abstract:Zero-shot learning enables the model to recognize unseen categories with the aid of auxiliary semantic information such as attributes. Current works proposed to detect attributes from local image regions and align extracted features with class-level semantics. In this paper, we find that the choice between local and global features is not a zero-sum game, global features can also contribute to the understanding of attributes. In addition, aligning attribute features with class-level semantics ignores potential intra-class attribute variation. To mitigate these disadvantages, we present Attribute Localization and Revision Network in this paper. First, we design Attribute Localization Module (ALM) to capture both local and global features from image regions, a novel module called Scale Control Unit is incorporated to fuse global and local representations. Second, we propose Attribute Revision Module (ARM), which generates image-level semantics by revising the ground-truth value of each attribute, compensating for performance degradation caused by ignoring intra-class variation. Finally, the output of ALM will be aligned with revised semantics produced by ARM to achieve the training process. Comprehensive experimental results on three widely used benchmarks demonstrate the effectiveness of our model in the zero-shot prediction task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge