Jun Deng

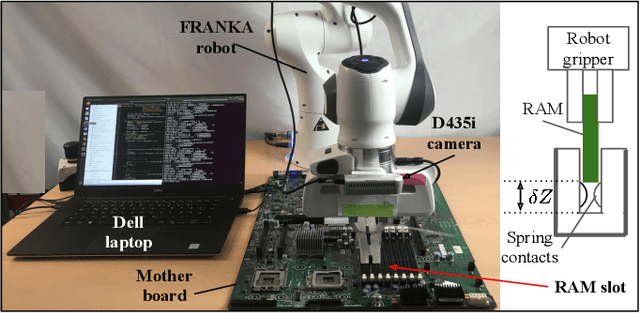

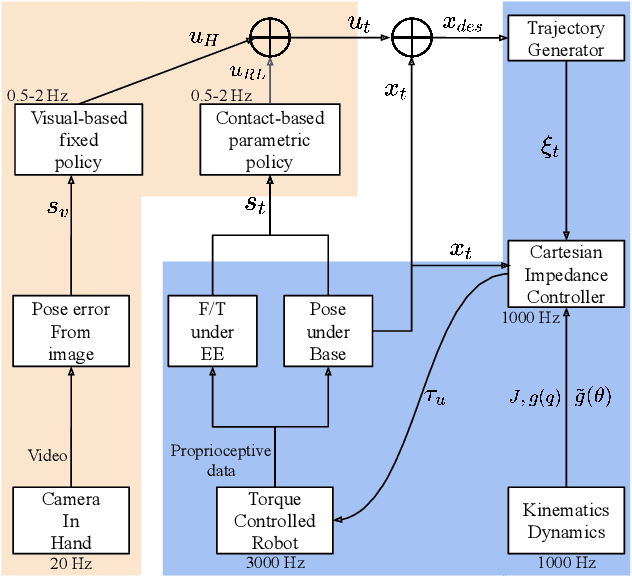

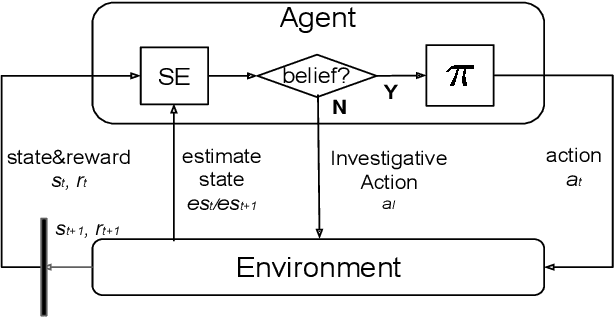

Proactive Action Visual Residual Reinforcement Learning for Contact-Rich Tasks Using a Torque-Controlled Robot

Oct 25, 2020

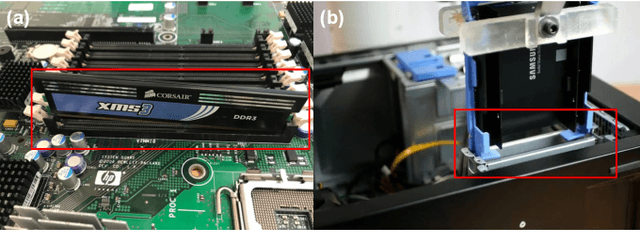

Abstract:Contact-rich manipulation tasks are commonly found in modern manufacturing settings. However, manually designing a robot controller is considered hard for traditional control methods as the controller requires an effective combination of modalities and vastly different characteristics. In this paper, we firstly consider incorporating operational space visual and haptic information into reinforcement learning(RL) methods to solve the target uncertainty problem in unstructured environments. Moreover, we propose a novel idea of introducing a proactive action to solve the partially observable Markov decision process problem. Together with these two ideas, our method can either adapt to reasonable variations in unstructured environments and improve the sample efficiency of policy learning. We evaluated our method on a task that involved inserting a random-access memory using a torque-controlled robot, and we tested the success rates of the different baselines used in the traditional methods. We proved that our method is robust and can tolerate environmental variations very well.

Center-of-Mass-based Robust Grasp Planning for Unknown Objects Using Tactile-Visual Sensors

Jun 01, 2020

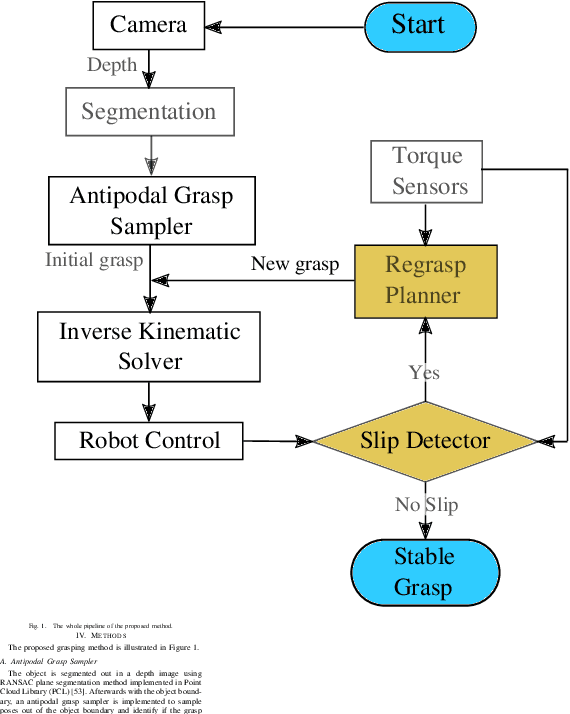

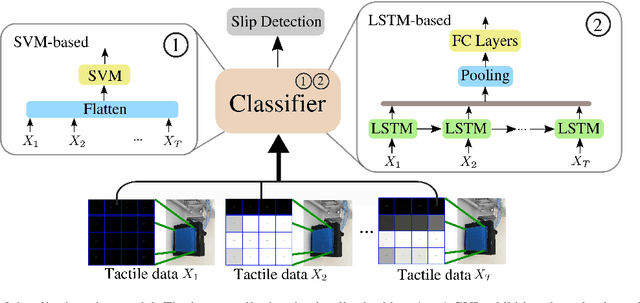

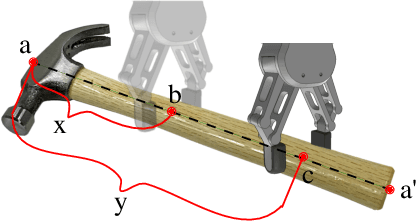

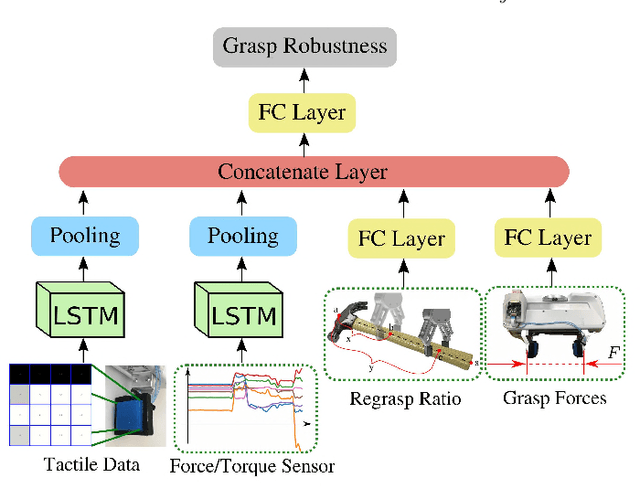

Abstract:An unstable grasp pose can lead to slip, thus an unstable grasp pose can be predicted by slip detection. A regrasp is required afterwards to correct the grasp pose in order to finish the task. In this work, we propose a novel regrasp planner with multi-sensor modules to plan grasp adjustments with the feedback from a slip detector. Then a regrasp planner is trained to estimate the location of center of mass, which helps robots find an optimal grasp pose. The dataset in this work consists of 1 025 slip experiments and 1 347 regrasps collected by one pair of tactile sensors, an RGB-D camera and one Franka Emika robot arm equipped with joint force/torque sensors. We show that our algorithm can successfully detect and classify the slip for 5 unknown test objects with an accuracy of 76.88% and a regrasp planner increases the grasp success rate by 31.0% compared to the state-of-the-art vision-based grasping algorithm.

* 6 pages + references

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge