Judith Degen

Expectations over Unspoken Alternatives Predict Pragmatic Inferences

Apr 07, 2023Abstract:Scalar inferences (SI) are a signature example of how humans interpret language based on unspoken alternatives. While empirical studies have demonstrated that human SI rates are highly variable -- both within instances of a single scale, and across different scales -- there have been few proposals that quantitatively explain both cross- and within-scale variation. Furthermore, while it is generally assumed that SIs arise through reasoning about unspoken alternatives, it remains debated whether humans reason about alternatives as linguistic forms, or at the level of concepts. Here, we test a shared mechanism explaining SI rates within and across scales: context-driven expectations about the unspoken alternatives. Using neural language models to approximate human predictive distributions, we find that SI rates are captured by the expectedness of the strong scalemate as an alternative. Crucially, however, expectedness robustly predicts cross-scale variation only under a meaning-based view of alternatives. Our results suggest that pragmatic inferences arise from context-driven expectations over alternatives, and these expectations operate at the level of concepts.

Harnessing the richness of the linguistic signal in predicting pragmatic inferences

Oct 31, 2019

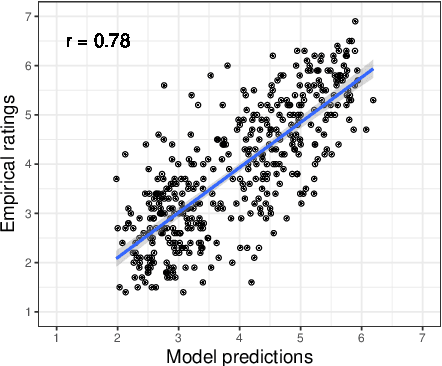

Abstract:The strength of pragmatic inferences systematically depends on linguistic and contextual cues. For example, the presence of a partitive construction increases the strength of a so-called scalar inference: humans perceive the inference that Chris did not eat all of the cookies to be stronger after hearing "Chris ate some of the cookies" than after hearing the same utterance without a partitive, "Chris ate some cookies". In this work, we explore to what extent it is possible to learn associations between linguistic cues and inference strength ratings without direct supervision. We show that an LSTM-based sentence encoder with an attention mechanism trained on a dataset of human inference strength ratings is able to predict ratings with high accuracy (r=0.78). We probe the model's behavior in multiple analyses using corpus data and manually constructed minimal pairs and find that the model learns associations between linguistic cues and scalar inferences, suggesting that these associations are inferable from statistical input.

When redundancy is rational: A Bayesian approach to 'overinformative' referring expressions

Mar 19, 2019

Abstract:Referring is one of the most basic and prevalent uses of language. How do speakers choose from the wealth of referring expressions at their disposal? Rational theories of language use have come under attack for decades for not being able to account for the seemingly irrational overinformativeness ubiquitous in referring expressions. Here we present a novel production model of referring expressions within the Rational Speech Act framework that treats speakers as agents that rationally trade off cost and informativeness of utterances. Crucially, we relax the assumption of deterministic meaning in favor of a graded semantics. This innovation allows us to capture a large number of seemingly disparate phenomena within one unified framework: the basic asymmetry in speakers' propensity to overmodify with color rather than size; the increase in overmodification in complex scenes; the increase in overmodification with atypical features; and the preference for basic level nominal reference. These findings cast a new light on the production of referring expressions: rather than being wastefully overinformative, reference is rationally redundant.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge