Harnessing the richness of the linguistic signal in predicting pragmatic inferences

Paper and Code

Oct 31, 2019

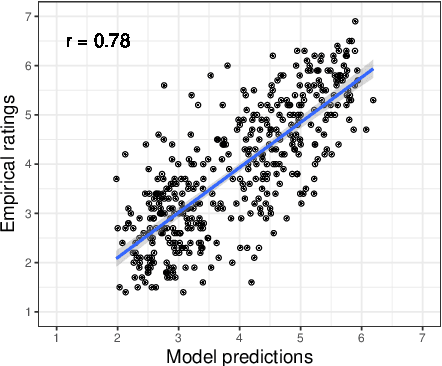

The strength of pragmatic inferences systematically depends on linguistic and contextual cues. For example, the presence of a partitive construction increases the strength of a so-called scalar inference: humans perceive the inference that Chris did not eat all of the cookies to be stronger after hearing "Chris ate some of the cookies" than after hearing the same utterance without a partitive, "Chris ate some cookies". In this work, we explore to what extent it is possible to learn associations between linguistic cues and inference strength ratings without direct supervision. We show that an LSTM-based sentence encoder with an attention mechanism trained on a dataset of human inference strength ratings is able to predict ratings with high accuracy (r=0.78). We probe the model's behavior in multiple analyses using corpus data and manually constructed minimal pairs and find that the model learns associations between linguistic cues and scalar inferences, suggesting that these associations are inferable from statistical input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge