Juan Luque

Robust Fair Clustering with Group Membership Uncertainty Sets

Jun 02, 2024Abstract:We study the canonical fair clustering problem where each cluster is constrained to have close to population-level representation of each group. Despite significant attention, the salient issue of having incomplete knowledge about the group membership of each point has been superficially addressed. In this paper, we consider a setting where errors exist in the assigned group memberships. We introduce a simple and interpretable family of error models that require a small number of parameters to be given by the decision maker. We then present an algorithm for fair clustering with provable robustness guarantees. Our framework enables the decision maker to trade off between the robustness and the clustering quality. Unlike previous work, our algorithms are backed by worst-case theoretical guarantees. Finally, we empirically verify the performance of our algorithm on real world datasets and show its superior performance over existing baselines.

Insta-RS: Instance-wise Randomized Smoothing for Improved Robustness and Accuracy

Mar 21, 2021

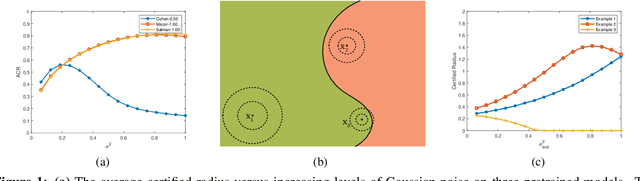

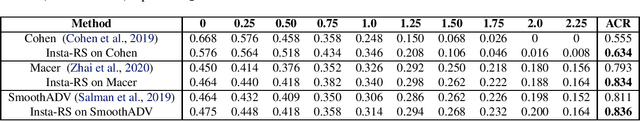

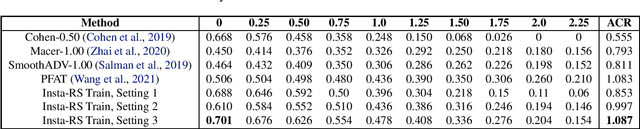

Abstract:Randomized smoothing (RS) is an effective and scalable technique for constructing neural network classifiers that are certifiably robust to adversarial perturbations. Most RS works focus on training a good base model that boosts the certified robustness of the smoothed model. However, existing RS techniques treat every data point the same, i.e., the variance of the Gaussian noise used to form the smoothed model is preset and universal for all training and test data. This preset and universal Gaussian noise variance is suboptimal since different data points have different margins and the local properties of the base model vary across the input examples. In this paper, we examine the impact of customized handling of examples and propose Instance-wise Randomized Smoothing (Insta-RS) -- a multiple-start search algorithm that assigns customized Gaussian variances to test examples. We also design Insta-RS Train -- a novel two-stage training algorithm that adaptively adjusts and customizes the noise level of each training example for training a base model that boosts the certified robustness of the instance-wise Gaussian smoothed model. Through extensive experiments on CIFAR-10 and ImageNet, we show that our method significantly enhances the average certified radius (ACR) as well as the clean data accuracy compared to existing state-of-the-art provably robust classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge