Juan C. Vidal

Improving Cardiac Risk Prediction Using Data Generation Techniques

Dec 19, 2025

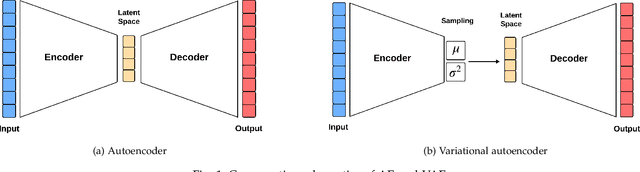

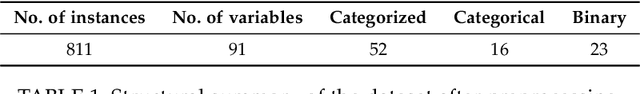

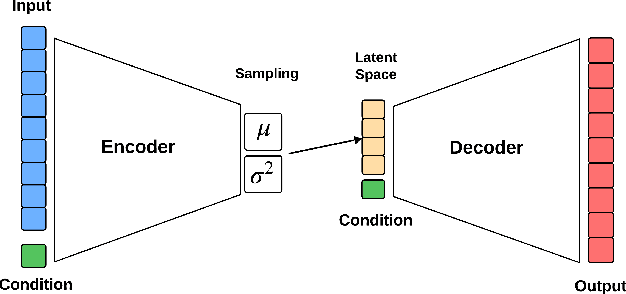

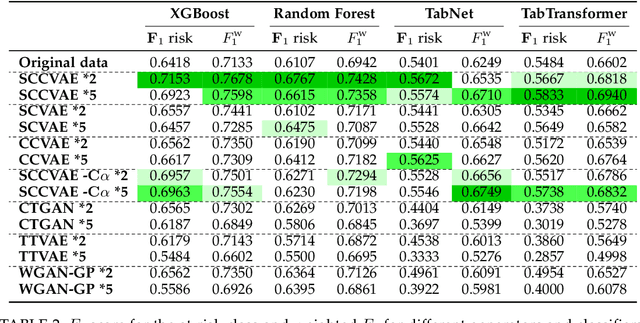

Abstract:Cardiac rehabilitation constitutes a structured clinical process involving multiple interdependent phases, individualized medical decisions, and the coordinated participation of diverse healthcare professionals. This sequential and adaptive nature enables the program to be modeled as a business process, thereby facilitating its analysis. Nevertheless, studies in this context face significant limitations inherent to real-world medical databases: data are often scarce due to both economic costs and the time required for collection; many existing records are not suitable for specific analytical purposes; and, finally, there is a high prevalence of missing values, as not all patients undergo the same diagnostic tests. To address these limitations, this work proposes an architecture based on a Conditional Variational Autoencoder (CVAE) for the synthesis of realistic clinical records that are coherent with real-world observations. The primary objective is to increase the size and diversity of the available datasets in order to enhance the performance of cardiac risk prediction models and to reduce the need for potentially hazardous diagnostic procedures, such as exercise stress testing. The results demonstrate that the proposed architecture is capable of generating coherent and realistic synthetic data, whose use improves the accuracy of the various classifiers employed for cardiac risk detection, outperforming state-of-the-art deep learning approaches for synthetic data generation.

ROC Analysis with Covariate Adjustment Using Neural Network Models: Evaluating the Role of Age in the Physical Activity-Mortality Association

Oct 16, 2025Abstract:The receiver operating characteristic (ROC) curve and its summary measure, the Area Under the Curve (AUC), are well-established tools for evaluating the efficacy of biomarkers in biomedical studies. Compared to the traditional ROC curve, the covariate-adjusted ROC curve allows for individual evaluation of the biomarker. However, the use of machine learning models has rarely been explored in this context, despite their potential to develop more powerful and sophisticated approaches for biomarker evaluation. The goal of this paper is to propose a framework for neural network-based covariate-adjusted ROC modeling that allows flexible and nonlinear evaluation of the effectiveness of a biomarker to discriminate between two reference populations. The finite-sample performance of our method is investigated through extensive simulation tests under varying dependency structures between biomarkers, covariates, and referenced populations. The methodology is further illustrated in a clinically case study that assesses daily physical activity - measured as total activity time (TAC), a proxy for daily step count-as a biomarker to predict mortality at three, five and eight years. Analyzes stratified by sex and adjusted for age and BMI reveal distinct covariate effects on mortality outcomes. These results underscore the importance of covariate-adjusted modeling in biomarker evaluation and highlight TAC's potential as a functional capacity biomarker based on specific individual characteristics.

Deep Learning Framework with Uncertainty Quantification for Survey Data: Assessing and Predicting Diabetes Mellitus Risk in the American Population

Mar 28, 2024Abstract:Complex survey designs are commonly employed in many medical cohorts. In such scenarios, developing case-specific predictive risk score models that reflect the unique characteristics of the study design is essential. This approach is key to minimizing potential selective biases in results. The objectives of this paper are: (i) To propose a general predictive framework for regression and classification using neural network (NN) modeling, which incorporates survey weights into the estimation process; (ii) To introduce an uncertainty quantification algorithm for model prediction, tailored for data from complex survey designs; (iii) To apply this method in developing robust risk score models to assess the risk of Diabetes Mellitus in the US population, utilizing data from the NHANES 2011-2014 cohort. The theoretical properties of our estimators are designed to ensure minimal bias and the statistical consistency, thereby ensuring that our models yield reliable predictions and contribute novel scientific insights in diabetes research. While focused on diabetes, this NN predictive framework is adaptable to create clinical models for a diverse range of diseases and medical cohorts. The software and the data used in this paper is publicly available on GitHub.

kNN Algorithm for Conditional Mean and Variance Estimation with Automated Uncertainty Quantification and Variable Selection

Feb 02, 2024Abstract:In this paper, we introduce a kNN-based regression method that synergizes the scalability and adaptability of traditional non-parametric kNN models with a novel variable selection technique. This method focuses on accurately estimating the conditional mean and variance of random response variables, thereby effectively characterizing conditional distributions across diverse scenarios.Our approach incorporates a robust uncertainty quantification mechanism, leveraging our prior estimation work on conditional mean and variance. The employment of kNN ensures scalable computational efficiency in predicting intervals and statistical accuracy in line with optimal non-parametric rates. Additionally, we introduce a new kNN semi-parametric algorithm for estimating ROC curves, accounting for covariates. For selecting the smoothing parameter k, we propose an algorithm with theoretical guarantees.Incorporation of variable selection enhances the performance of the method significantly over conventional kNN techniques in various modeling tasks. We validate the approach through simulations in low, moderate, and high-dimensional covariate spaces. The algorithm's effectiveness is particularly notable in biomedical applications as demonstrated in two case studies. Concluding with a theoretical analysis, we highlight the consistency and convergence rate of our method over traditional kNN models, particularly when the underlying regression model takes values in a low-dimensional space.

Prompting LLMs with content plans to enhance the summarization of scientific articles

Dec 15, 2023

Abstract:This paper presents novel prompting techniques to improve the performance of automatic summarization systems for scientific articles. Scientific article summarization is highly challenging due to the length and complexity of these documents. We conceive, implement, and evaluate prompting techniques that provide additional contextual information to guide summarization systems. Specifically, we feed summarizers with lists of key terms extracted from articles, such as author keywords or automatically generated keywords. Our techniques are tested with various summarization models and input texts. Results show performance gains, especially for smaller models summarizing sections separately. This evidences that prompting is a promising approach to overcoming the limitations of less powerful systems. Our findings introduce a new research direction of using prompts to aid smaller models.

Encoder-Decoder Model for Suffix Prediction in Predictive Monitoring

Nov 29, 2022

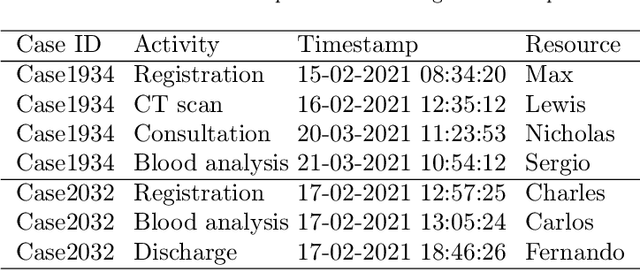

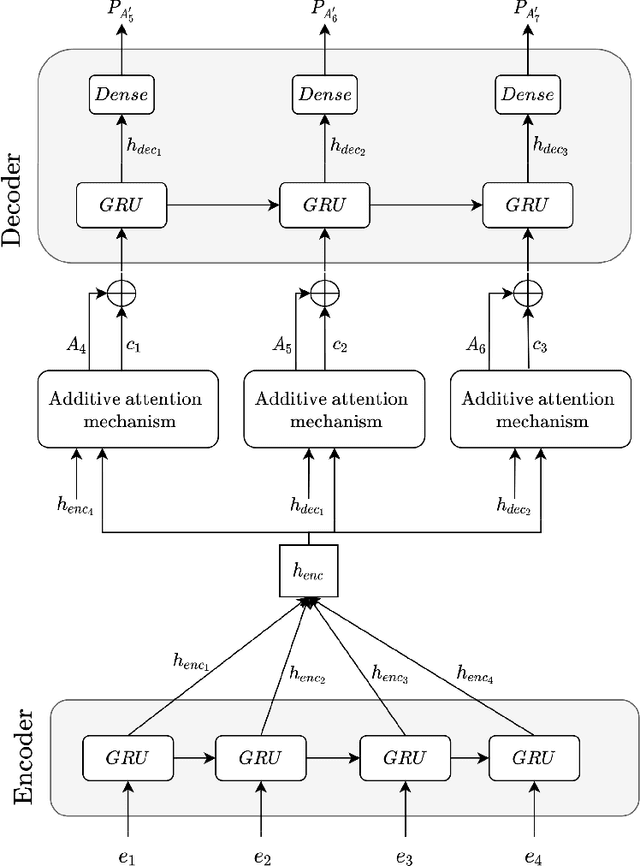

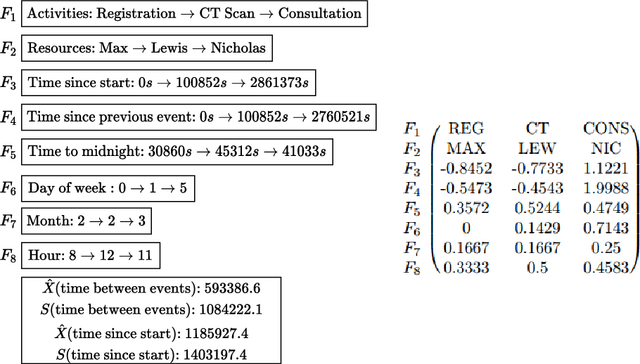

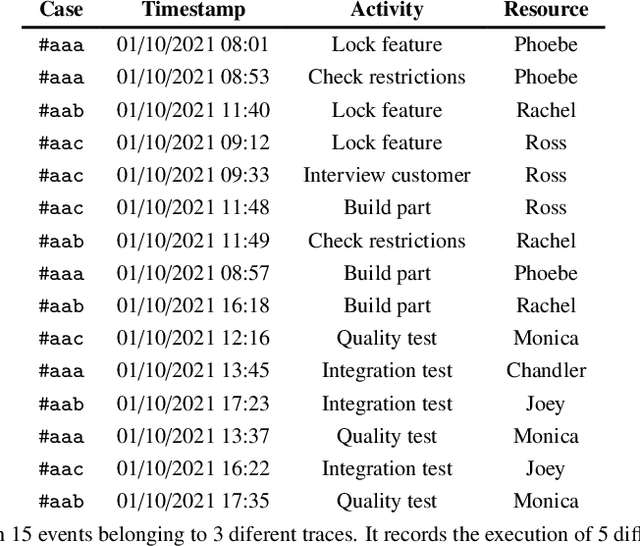

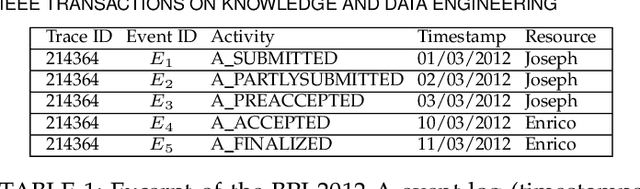

Abstract:Predictive monitoring is a subfield of process mining that aims to predict how a running case will unfold in the future. One of its main challenges is forecasting the sequence of activities that will occur from a given point in time -- suffix prediction -- . Most approaches to the suffix prediction problem learn to predict the suffix by learning how to predict the next activity only, not learning from the whole suffix during the training phase. This paper proposes a novel architecture based on an encoder-decoder model with an attention mechanism that decouples the representation learning of the prefixes from the inference phase, predicting only the activities of the suffix. During the inference phase, this architecture is extended with a heuristic search algorithm that improves the selection of the activity for each index of the suffix. Our approach has been tested using 12 public event logs against 6 different state-of-the-art proposals, showing that it significantly outperforms these proposals.

Gradual Drift Detection in Process Models Using Conformance Metrics

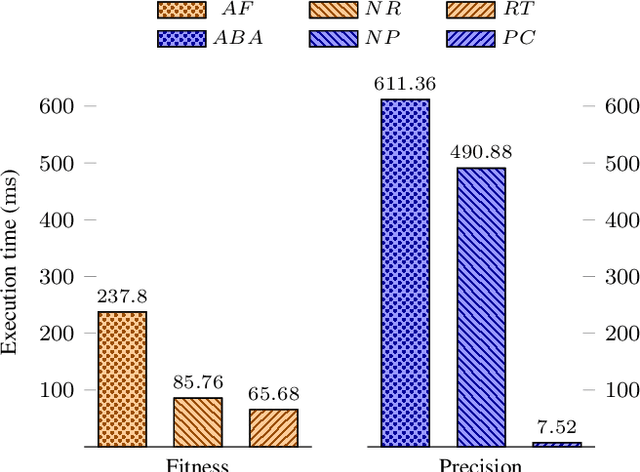

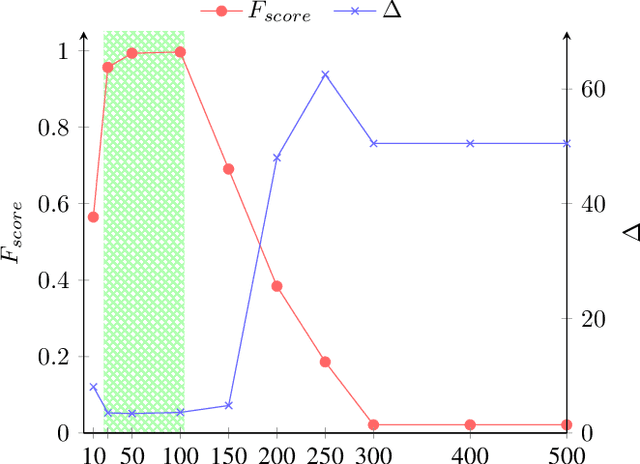

Jul 22, 2022

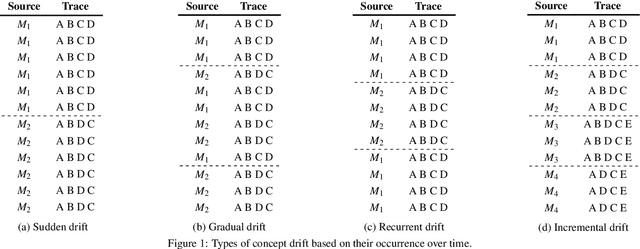

Abstract:Changes, planned or unexpected, are common during the execution of real-life processes. Detecting these changes is a must for optimizing the performance of organizations running such processes. Most of the algorithms present in the state-of-the-art focus on the detection of sudden changes, leaving aside other types of changes. In this paper, we will focus on the automatic detection of gradual drifts, a special type of change, in which the cases of two models overlap during a period of time. The proposed algorithm relies on conformance checking metrics to carry out the automatic detection of the changes, performing also a fully automatic classification of these changes into sudden or gradual. The approach has been validated with a synthetic dataset consisting of 120 logs with different distributions of changes, getting better results in terms of detection and classification accuracy, delay and change region overlapping than the main state-of-the-art algorithms.

Embedding Graph Convolutional Networks in Recurrent Neural Networks for Predictive Monitoring

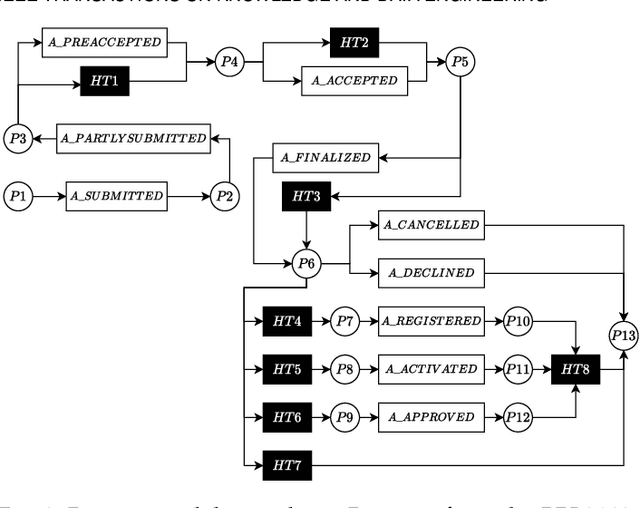

Dec 23, 2021

Abstract:Predictive monitoring of business processes is a subfield of process mining that aims to predict, among other things, the characteristics of the next event or the sequence of next events. Although multiple approaches based on deep learning have been proposed, mainly recurrent neural networks and convolutional neural networks, none of them really exploit the structural information available in process models. This paper proposes an approach based on graph convolutional networks and recurrent neural networks that uses information directly from the process model. An experimental evaluation on real-life event logs shows that our approach is more consistent and outperforms the current state-of-the-art approaches.

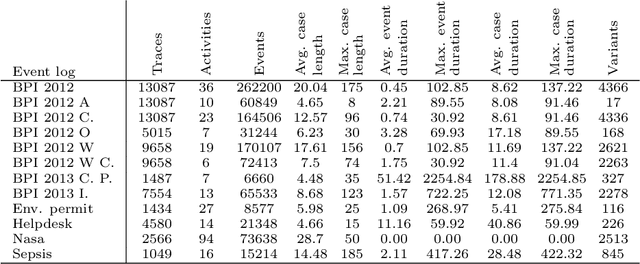

Deep Learning for Predictive Business Process Monitoring: Review and Benchmark

Sep 24, 2020Abstract:Predictive monitoring of business processes is concerned with the prediction of ongoing cases on a business process. Lately, the popularity of deep learning techniques has propitiated an ever-growing set of approaches focused on predictive monitoring based on these techniques. However, the high disparity of process logs and experimental setups used to evaluate these approaches makes it especially difficult to make a fair comparison. Furthermore, it also difficults the selection of the most suitable approach to solve a specific problem. In this paper, we provide both a systematic literature review of approaches that use deep learning to tackle the predictive monitoring tasks. In addition, we performed an exhaustive experimental evaluation of 10 different approaches over 12 publicly available process logs.

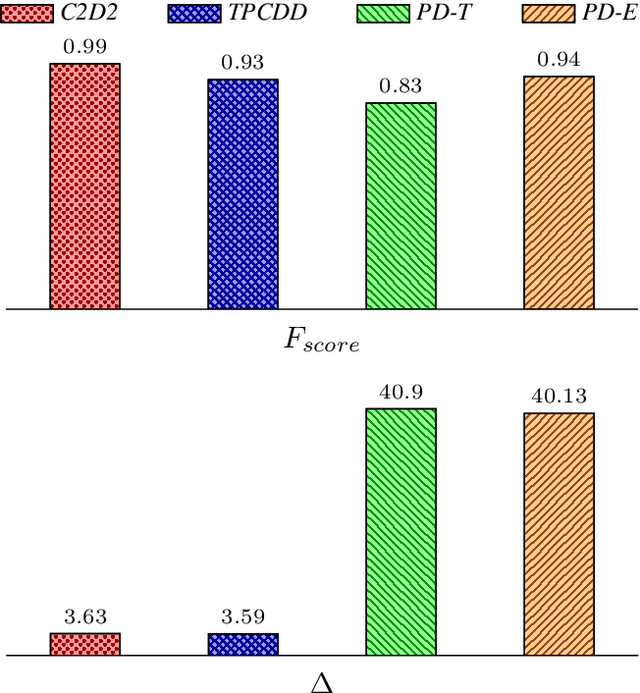

A Conformance Checking-based Approach for Drift Detection in Business Processes

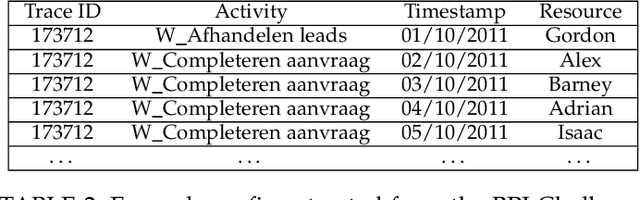

Jul 09, 2019

Abstract:Real life business processes change over time, in both planned and unexpected ways. The detection of these changes is crucial for organizations to ensure that the expected and the real behavior are as similar as possible. These changes over time are called concept drift and its detection is a big challenge in process mining since the inherent complexity of the data makes difficult distinguishing between a change and an anomalous execution. In this paper, we present C2D2 (Conformance Checking-based Drift Detection), a new approach to detect sudden control-flow changes in the process models from event traces. C2D2 combines discovery techniques with conformance checking methods to perform an offline detection. Our approach has been validated with a synthetic benchmarking dataset formed by 68 logs, showing an improvement in the accuracy while maintaining a minimum delay in the drift detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge