Joshua S. Bloom

AstroCompress: A benchmark dataset for multi-purpose compression of astronomical data

Jun 10, 2025

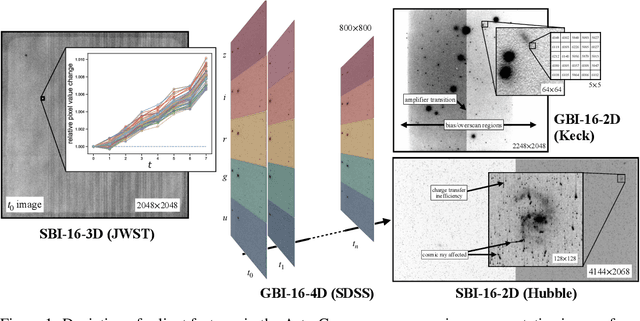

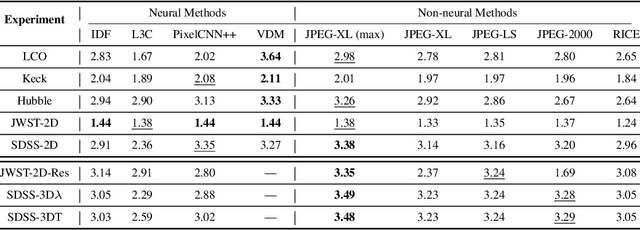

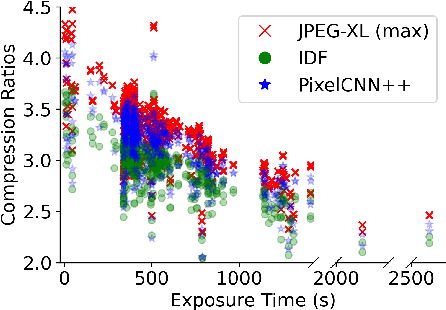

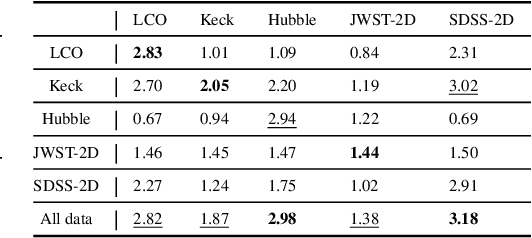

Abstract:The site conditions that make astronomical observatories in space and on the ground so desirable -- cold and dark -- demand a physical remoteness that leads to limited data transmission capabilities. Such transmission limitations directly bottleneck the amount of data acquired and in an era of costly modern observatories, any improvements in lossless data compression has the potential scale to billions of dollars worth of additional science that can be accomplished on the same instrument. Traditional lossless methods for compressing astrophysical data are manually designed. Neural data compression, on the other hand, holds the promise of learning compression algorithms end-to-end from data and outperforming classical techniques by leveraging the unique spatial, temporal, and wavelength structures of astronomical images. This paper introduces AstroCompress: a neural compression challenge for astrophysics data, featuring four new datasets (and one legacy dataset) with 16-bit unsigned integer imaging data in various modes: space-based, ground-based, multi-wavelength, and time-series imaging. We provide code to easily access the data and benchmark seven lossless compression methods (three neural and four non-neural, including all practical state-of-the-art algorithms). Our results on lossless compression indicate that lossless neural compression techniques can enhance data collection at observatories, and provide guidance on the adoption of neural compression in scientific applications. Though the scope of this paper is restricted to lossless compression, we also comment on the potential exploration of lossy compression methods in future studies.

AstroM$^3$: A self-supervised multimodal model for astronomy

Nov 13, 2024

Abstract:While machine-learned models are now routinely employed to facilitate astronomical inquiry, model inputs tend to be limited to a primary data source (namely images or time series) and, in the more advanced approaches, some metadata. Yet with the growing use of wide-field, multiplexed observational resources, individual sources of interest often have a broad range of observational modes available. Here we construct an astronomical multimodal dataset and propose AstroM$^3$, a self-supervised pre-training approach that enables a model to learn from multiple modalities simultaneously. Specifically, we extend the CLIP (Contrastive Language-Image Pretraining) model to a trimodal setting, allowing the integration of time-series photometry data, spectra, and astrophysical metadata. In a fine-tuning supervised setting, our results demonstrate that CLIP pre-training improves classification performance for time-series photometry, where accuracy increases from 84.6% to 91.5%. Furthermore, CLIP boosts classification accuracy by up to 12.6% when the availability of labeled data is limited, showing the effectiveness of leveraging larger corpora of unlabeled data. In addition to fine-tuned classification, we can use the trained model in other downstream tasks that are not explicitly contemplated during the construction of the self-supervised model. In particular we show the efficacy of using the learned embeddings for misclassifications identification, similarity search, and anomaly detection. One surprising highlight is the "rediscovery" of Mira subtypes and two Rotational variable subclasses using manifold learning and dimension reduction algorithm. To our knowledge this is the first construction of an $n>2$ mode model in astronomy. Extensions to $n>3$ modes is naturally anticipated with this approach.

nbi: the Astronomer's Package for Neural Posterior Estimation

Dec 21, 2023Abstract:Despite the promise of Neural Posterior Estimation (NPE) methods in astronomy, the adaptation of NPE into the routine inference workflow has been slow. We identify three critical issues: the need for custom featurizer networks tailored to the observed data, the inference inexactness, and the under-specification of physical forward models. To address the first two issues, we introduce a new framework and open-source software nbi (Neural Bayesian Inference), which supports both amortized and sequential NPE. First, nbi provides built-in "featurizer" networks with demonstrated efficacy on sequential data, such as light curve and spectra, thus obviating the need for this customization on the user end. Second, we introduce a modified algorithm SNPE-IS, which facilities asymptotically exact inference by using the surrogate posterior under NPE only as a proposal distribution for importance sampling. These features allow nbi to be applied off-the-shelf to astronomical inference problems involving light curves and spectra. We discuss how nbi may serve as an effective alternative to existing methods such as Nested Sampling. Our package is at https://github.com/kmzzhang/nbi.

Stellar Spectra Fitting with Amortized Neural Posterior Estimation and nbi

Dec 09, 2023

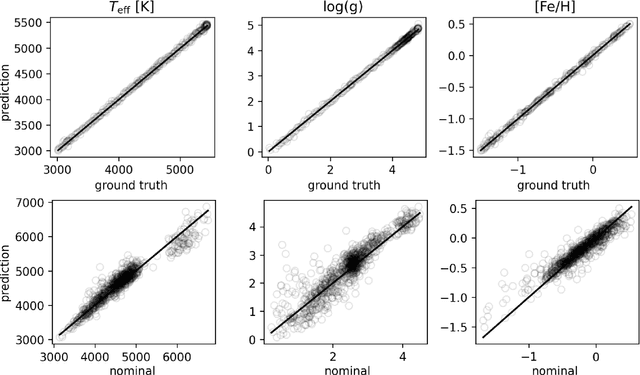

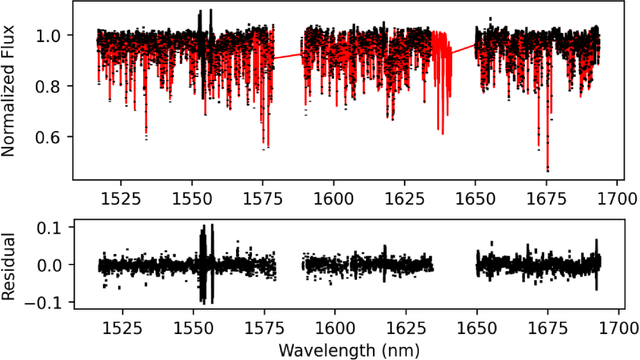

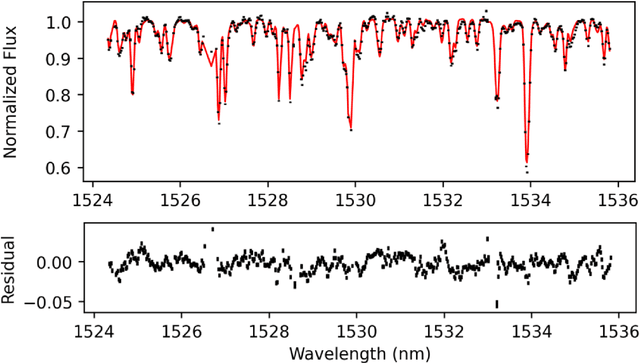

Abstract:Modern surveys often deliver hundreds of thousands of stellar spectra at once, which are fit to spectral models to derive stellar parameters/labels. Therefore, the technique of Amortized Neural Posterior Estimation (ANPE) stands out as a suitable approach, which enables the inference of large number of targets as sub-linear/constant computational costs. Leveraging our new nbi software package, we train an ANPE model for the APOGEE survey and demonstrate its efficacy on both mock and real APOGEE stellar spectra. Unique to the nbi package is its out-of-the-box functionality on astronomical inverse problems with sequential data. As such, we have been able to acquire the trained model with minimal effort. We introduce an effective approach to handling the measurement noise properties inherent in spectral data, which utilizes the actual uncertainties in the observed data. This allows training data to resemble observed data, an aspect that is crucial for ANPE applications. Given the association of spectral data properties with the observing instrument, we discuss the utility of an ANPE "model zoo," where models are trained for specific instruments and distributed under the nbi framework to facilitate real-time stellar parameter inference.

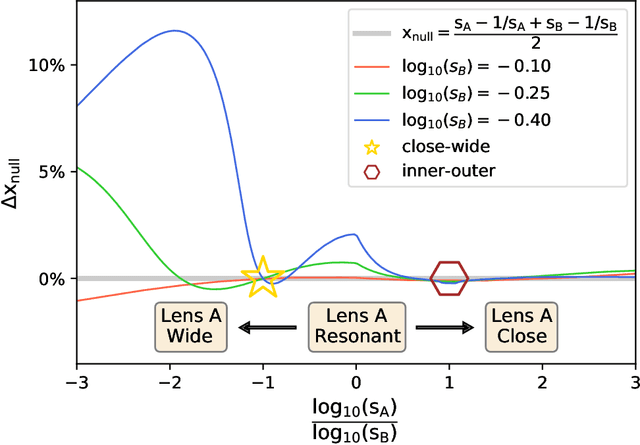

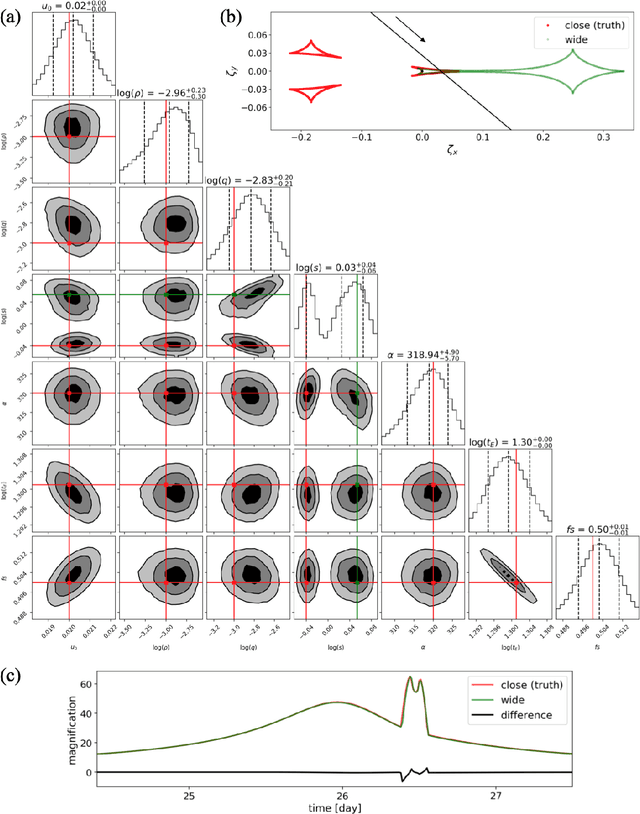

A Ubiquitous Unifying Degeneracy in 2-body Microlensing Systems

Nov 26, 2021

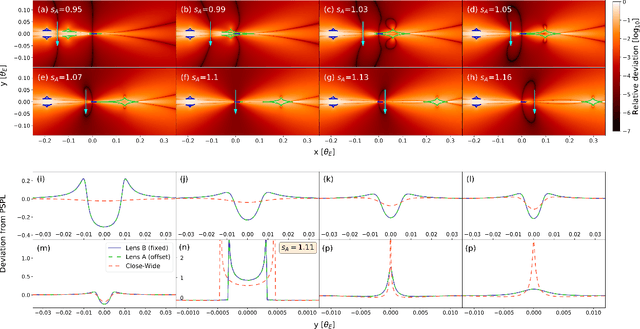

Abstract:While gravitational microlensing by planetary systems can provide unique vistas on the properties of exoplanets, observations of such 2-body microlensing events can often be explained with multiple and distinct physical configurations, so-called model degeneracies. An understanding of the intrinsic and exogenous origins of different classes of degeneracy provides a foundation for phenomenological interpretation. Here, leveraging a fast machine-learning based inference framework, we present the discovery of a new regime of degeneracy--the offset degeneracy--which unifies the previously known close-wide and inner-outer degeneracies, generalises to resonant caustics, and upon reanalysis, is ubiquitous in previously published planetary events with 2-fold degenerate solutions. Importantly, our discovery suggests that the commonly reported close-wide degeneracy essentially never arises in actual events and should, instead, be more suitably viewed as a transition point of the offset degeneracy. While previous studies of microlensing degeneracies are largely studies of degenerate caustics, our discovery demonstrates that degenerate caustics do not necessarily result in degenerate events, which for the latter it is more relevant to study magnifications at the location of the source. This discovery fundamentally changes the way in which degeneracies in planetary microlensing events should be interpreted, suggests a deeper symmetry in the mathematics of 2-body lenses than has previously been recognised, and will increasingly manifest itself in data from new generations of microlensing surveys.

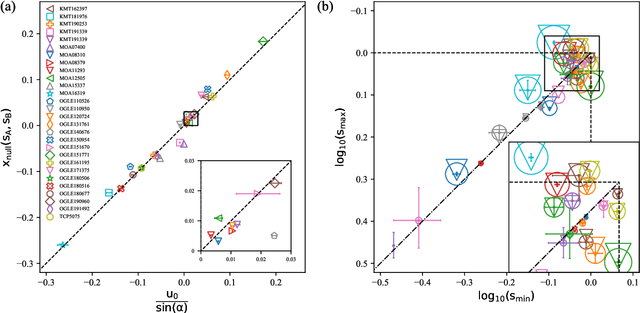

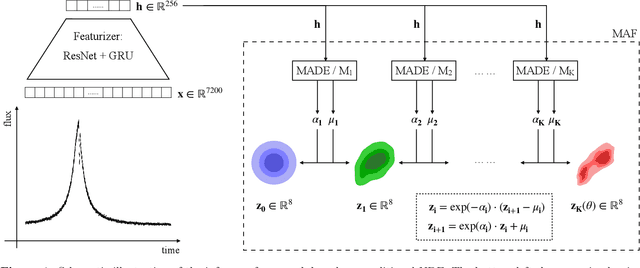

Real-Time Likelihood-Free Inference of Roman Binary Microlensing Events with Amortized Neural Posterior Estimation

Feb 17, 2021

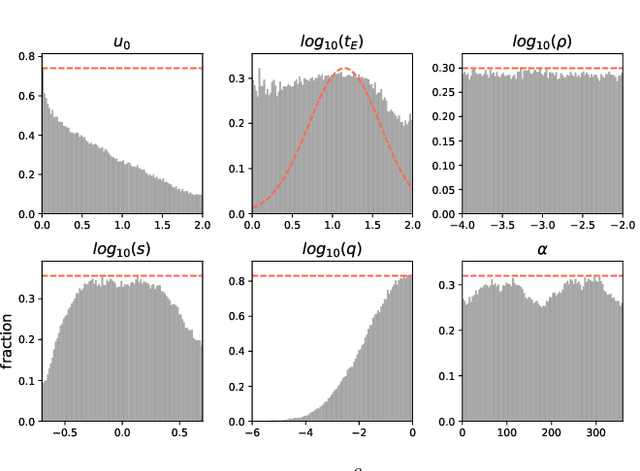

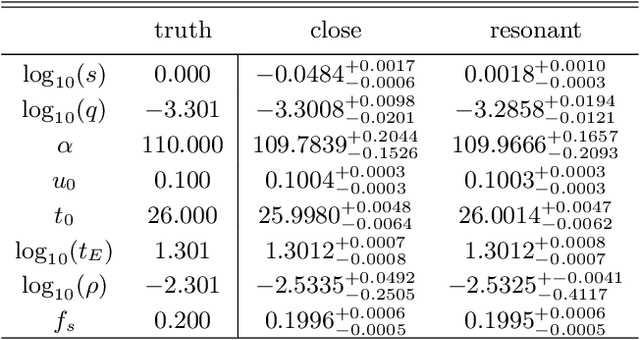

Abstract:Fast and automated inference of binary-lens, single-source (2L1S) microlensing events with sampling-based Bayesian algorithms (e.g., Markov Chain Monte Carlo; MCMC) is challenged on two fronts: high computational cost of likelihood evaluations with microlensing simulation codes, and a pathological parameter space where the negative-log-likelihood surface can contain a multitude of local minima that are narrow and deep. Analysis of 2L1S events usually involves grid searches over some parameters to locate approximate solutions as a prerequisite to posterior sampling, an expensive process that often requires human-in-the-loop domain expertise. As the next-generation, space-based microlensing survey with the Roman Space Telescope is expected to yield thousands of binary microlensing events, a new fast and automated method is desirable. Here, we present a likelihood-free inference (LFI) approach named amortized neural posterior estimation, where a neural density estimator (NDE) learns a surrogate posterior $\hat{p}(\theta|x)$ as an observation-parametrized conditional probability distribution, from pre-computed simulations over the full prior space. Trained on 291,012 simulated Roman-like 2L1S simulations, the NDE produces accurate and precise posteriors within seconds for any observation within the prior support without requiring a domain expert in the loop, thus allowing for real-time and automated inference. We show that the NDE also captures expected posterior degeneracies. The NDE posterior could then be refined into the exact posterior with a downstream MCMC sampler with minimal burn-in steps.

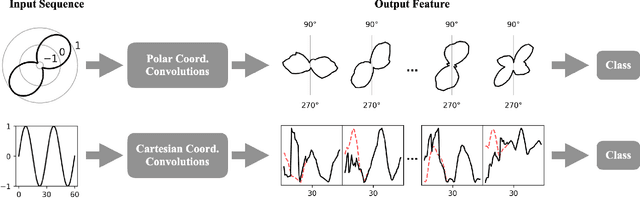

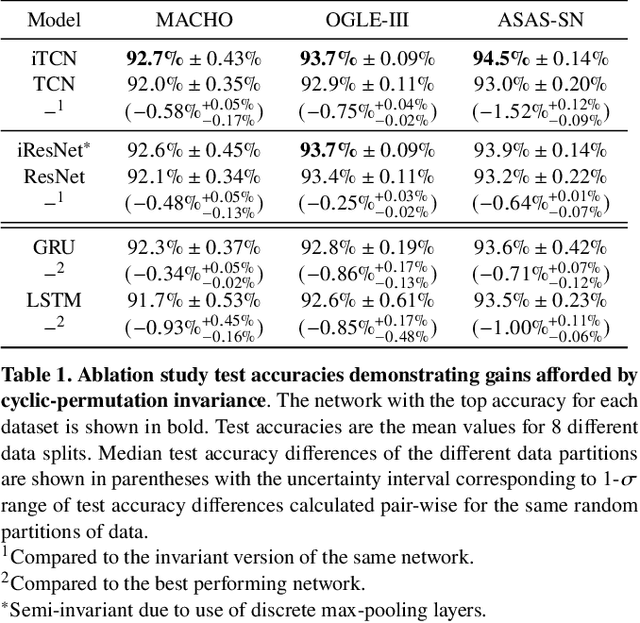

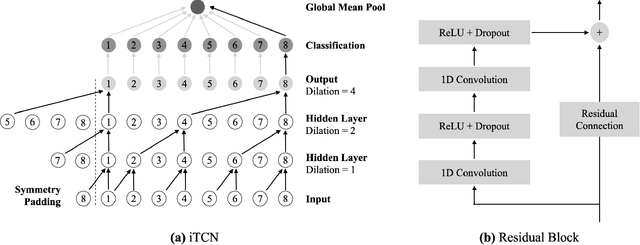

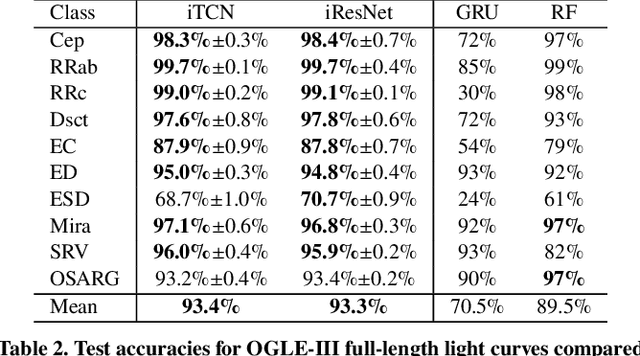

Classification of Periodic Variable Stars with Novel Cyclic-Permutation Invariant Neural Networks

Nov 02, 2020

Abstract:Neural networks (NNs) have been shown to be competitive against state-of-the-art feature engineering and random forest (RF) classification of periodic variable stars. Although previous work utilising NNs has made use of periodicity by period folding multiple-cycle time-series into a single cycle---from time-space to phase-space---no approach to date has taken advantage of the fact that network predictions should be invariant to the initial phase of the period-folded sequence. Initial phase is exogenous to the physical origin of the variability and should thus be factored out. Here, we present cyclic-permutation invariant networks, a novel class of NNs for which invariance to phase shifts is guaranteed through polar coordinate convolutions, which we implement by means of "Symmetry Padding." Across three different datasets of variable star light curves, we show that two implementations of the cyclic-permutation invariant network: the iTCN and the iResNet, consistently outperform non-invariant baselines and reduce overall error rates by between 4% to 22%. Over a 10-class OGLE-III sample, the iTCN/iResNet achieves an average per-class accuracy of 93.4%/93.3%, compared to RNN/RF accuracies of 70.5%/89.5% in a recent study using the same data. Finding improvement on a non-astronomy benchmark, we suggest that the methodology introduced here should also be applicable to a wide range of science domains where periodic data abounds due to physical symmetries.

Automating Inference of Binary Microlensing Events with Neural Density Estimation

Oct 08, 2020

Abstract:Automated inference of binary microlensing events with traditional sampling-based algorithms such as MCMC has been hampered by the slowness of the physical forward model and the pathological likelihood surface. Current analysis of such events requires both expert knowledge and large-scale grid searches to locate the approximate solution as a prerequisite to MCMC posterior sampling. As the next generation, space-based microlensing survey with the Roman Space Observatory is expected to yield thousands of binary microlensing events, a new scalable and automated approach is desired. Here, we present an automated inference method based on neural density estimation (NDE). We show that the NDE trained on simulated Roman data not only produces fast, accurate, and precise posteriors but also captures expected posterior degeneracies. A hybrid NDE-MCMC framework can further be applied to produce the exact posterior.

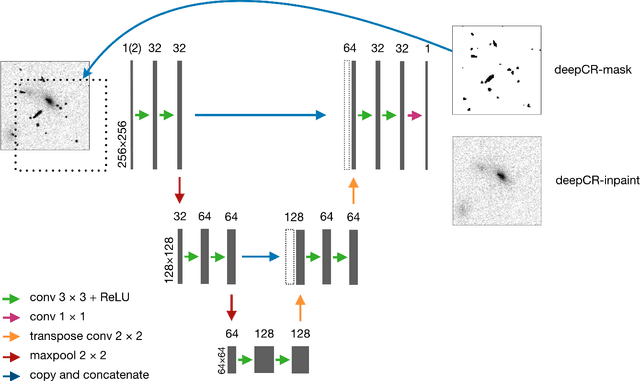

deepCR: Cosmic Ray Rejection with Deep Learning

Aug 29, 2019

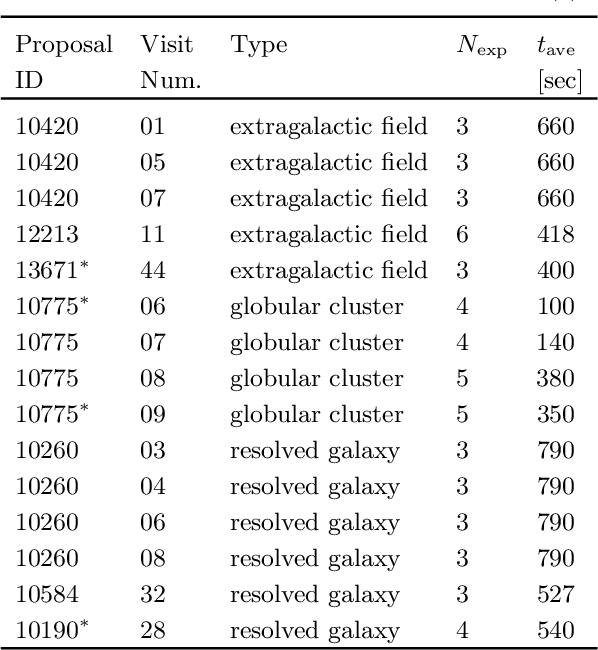

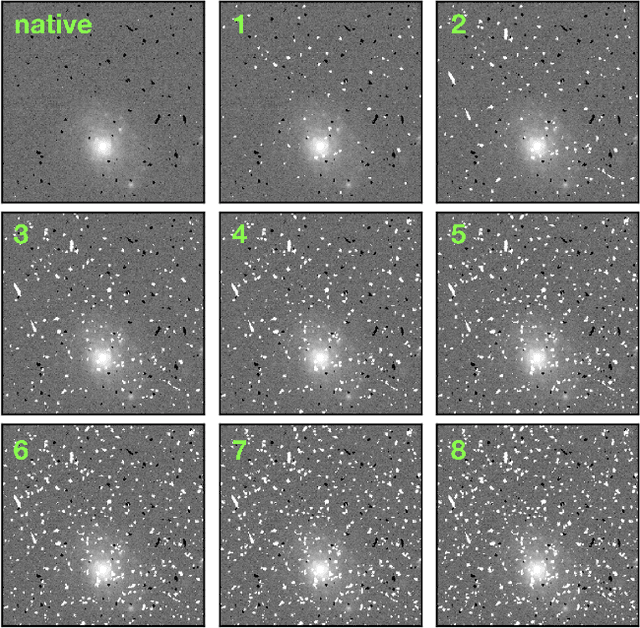

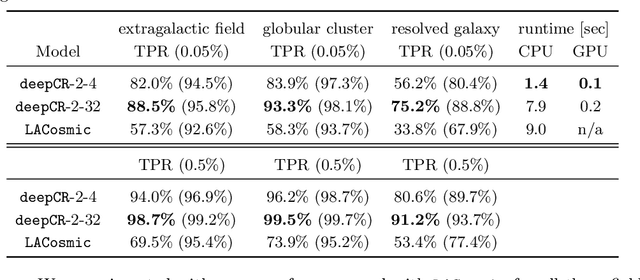

Abstract:Cosmic ray (CR) identification and replacement are critical components of imaging and spectroscopic reduction pipelines involving solid-state detectors. We present deepCR, a deep learning based framework for CR identification and subsequent image inpainting based on the predicted CR mask. To demonstrate the effectiveness of this framework, we train and evaluate models on Hubble Space Telescope ACS/WFC images of sparse extragalactic fields, globular clusters, and resolved galaxies. We demonstrate that at a false positive rate of 0.5%, deepCR achieves close to 100% detection rates in both extragalactic and globular cluster fields, and 91% in resolved galaxy fields, which is a significant improvement over the current state-of-the-art method LACosmic. Compared to a multicore CPU implementation of LACosmic, deepCR CR mask predictions run up to 6.5 times faster on CPU and 90 times faster on a single GPU. For image inpainting, the mean squared errors of deepCR predictions are 20 times lower in globular cluster fields, 5 times lower in resolved galaxy fields, and 2.5 times lower in extragalactic fields, compared to the best performing non-neural technique tested. We present our framework and the trained models as an open-source Python project, with a simple-to-use API. To facilitate reproducibility of the results we also provide a benchmarking codebase.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge