Joseph Chang

Virtual Avatar Stream: a cost-down approach to the Metaverse experience

Apr 04, 2023Abstract:The Metaverse through VR headsets is a rapidly growing concept, but the high cost of entry currently limits access for many users. This project aims to provide an accessible entry point to the immersive Metaverse experience by leveraging web technologies. The platform developed allows users to engage with rendered avatars using only a web browser, microphone, and webcam. By employing the WebGL and MediaPipe face tracking AI model from Google, the application generates real-time 3D face meshes for users. It uses a client-to-client streaming cluster to establish a connection, and clients negotiate SRTP protocol through WebRTC for direct data streaming. Additionally, the project addresses backend challenges through an architecture that is serverless, distributive, auto-scaling, highly resilient, and secure. The platform offers a scalable, hardware-free solution for users to experience a near-immersive Metaverse, with the potential for future integration with game server clusters. This project provides an important step toward a more inclusive Metaverse accessible to a wider audience.

A Deep Learning Approach to Unsupervised Ensemble Learning

Feb 06, 2016

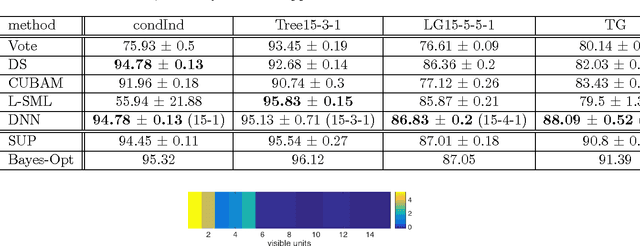

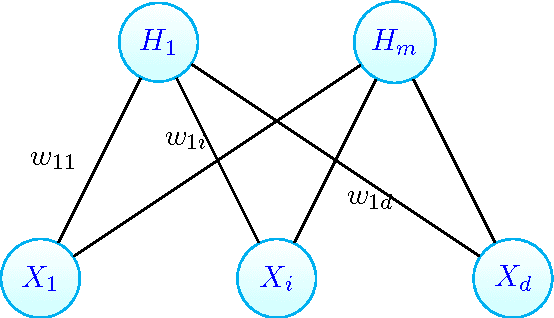

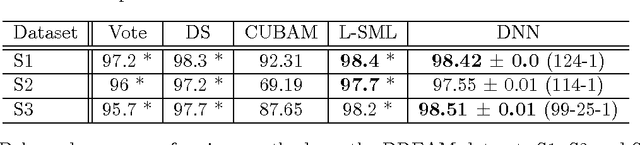

Abstract:We show how deep learning methods can be applied in the context of crowdsourcing and unsupervised ensemble learning. First, we prove that the popular model of Dawid and Skene, which assumes that all classifiers are conditionally independent, is {\em equivalent} to a Restricted Boltzmann Machine (RBM) with a single hidden node. Hence, under this model, the posterior probabilities of the true labels can be instead estimated via a trained RBM. Next, to address the more general case, where classifiers may strongly violate the conditional independence assumption, we propose to apply RBM-based Deep Neural Net (DNN). Experimental results on various simulated and real-world datasets demonstrate that our proposed DNN approach outperforms other state-of-the-art methods, in particular when the data violates the conditional independence assumption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge