Josef Kitler

Rethinking Positive Pairs in Contrastive Learning

Oct 23, 2024

Abstract:Contrastive learning, a prominent approach to representation learning, traditionally assumes positive pairs are closely related samples (the same image or class) and negative pairs are distinct samples. We challenge this assumption by proposing to learn from arbitrary pairs, allowing any pair of samples to be positive within our framework.The primary challenge of the proposed approach lies in applying contrastive learning to disparate pairs which are semantically distant. Motivated by the discovery that SimCLR can separate given arbitrary pairs (e.g., garter snake and table lamp) in a subspace, we propose a feature filter in the condition of class pairs that creates the requisite subspaces by gate vectors selectively activating or deactivating dimensions. This filter can be optimized through gradient descent within a conventional contrastive learning mechanism. We present Hydra, a universal contrastive learning framework for visual representations that extends conventional contrastive learning to accommodate arbitrary pairs. Our approach is validated using IN1K, where 1K diverse classes compose 500,500 pairs, most of them being distinct. Surprisingly, Hydra achieves superior performance in this challenging setting. Additional benefits include the prevention of dimensional collapse and the discovery of class relationships. Our work highlights the value of learning common features of arbitrary pairs and potentially broadens the applicability of contrastive learning techniques on the sample pairs with weak relationships.

SS-CXR: Multitask Representation Learning using Self Supervised Pre-training from Chest X-Rays

Nov 23, 2022Abstract:Chest X-rays (CXRs) are a widely used imaging modality for the diagnosis and prognosis of lung disease. The image analysis tasks vary. Examples include pathology detection and lung segmentation. There is a large body of work where machine learning algorithms are developed for specific tasks. A significant recent example is Coronavirus disease (covid-19) detection using CXR data. However, the traditional diagnostic tool design methods based on supervised learning are burdened by the need to provide training data annotation, which should be of good quality for better clinical outcomes. Here, we propose an alternative solution, a new self-supervised paradigm, where a general representation from CXRs is learned using a group-masked self-supervised framework. The pre-trained model is then fine-tuned for domain-specific tasks such as covid-19, pneumonia detection, and general health screening. We show that the same pre-training can be used for the lung segmentation task. Our proposed paradigm shows robust performance in multiple downstream tasks which demonstrates the success of the pre-training. Moreover, the performance of the pre-trained models on data with significant drift during test time proves the learning of a better generic representation. The methods are further validated by covid-19 detection in a unique small-scale pediatric data set. The performance gain in accuracy (~25\%) is significant when compared to a supervised transformer-based method. This adds credence to the strength and reliability of our proposed framework and pre-training strategy.

SB-SSL: Slice-Based Self-Supervised Transformers for Knee Abnormality Classification from MRI

Aug 29, 2022

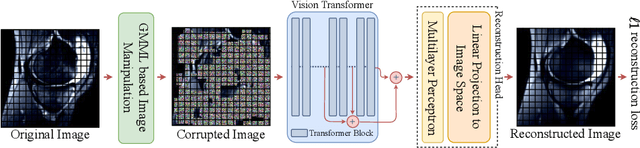

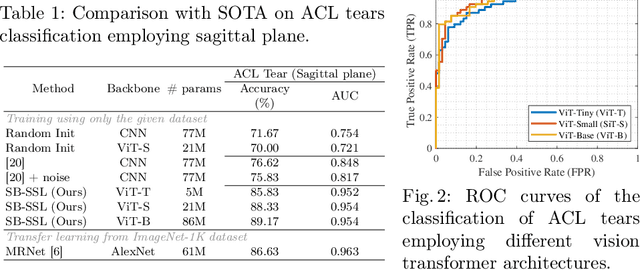

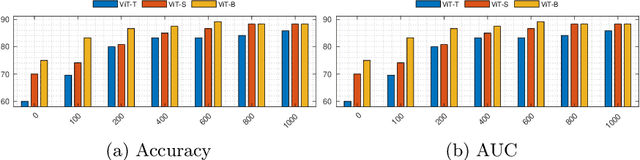

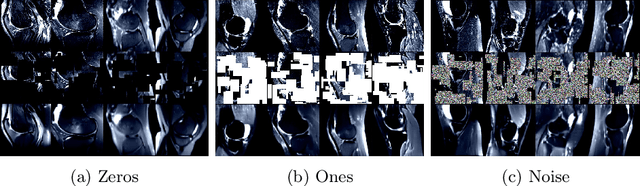

Abstract:The availability of large scale data with high quality ground truth labels is a challenge when developing supervised machine learning solutions for healthcare domain. Although, the amount of digital data in clinical workflows is increasing, most of this data is distributed on clinical sites and protected to ensure patient privacy. Radiological readings and dealing with large-scale clinical data puts a significant burden on the available resources, and this is where machine learning and artificial intelligence play a pivotal role. Magnetic Resonance Imaging (MRI) for musculoskeletal (MSK) diagnosis is one example where the scans have a wealth of information, but require a significant amount of time for reading and labeling. Self-supervised learning (SSL) can be a solution for handling the lack of availability of ground truth labels, but generally requires a large amount of training data during the pretraining stage. Herein, we propose a slice-based self-supervised deep learning framework (SB-SSL), a novel slice-based paradigm for classifying abnormality using knee MRI scans. We show that for a limited number of cases (<1000), our proposed framework is capable to identify anterior cruciate ligament tear with an accuracy of 89.17% and an AUC of 0.954, outperforming state-of-the-art without usage of external data during pretraining. This demonstrates that our proposed framework is suited for SSL in the limited data regime.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge