José V. Manjon

RegQCNET: Deep Quality Control for Image-to-template Brain MRI Registration

May 14, 2020

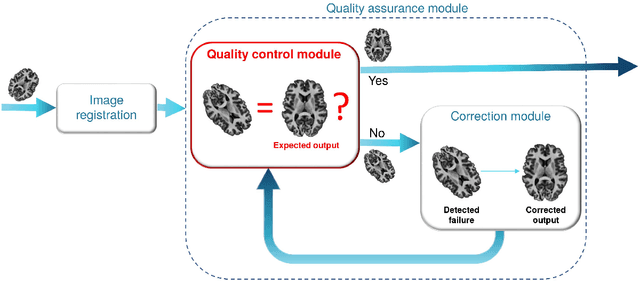

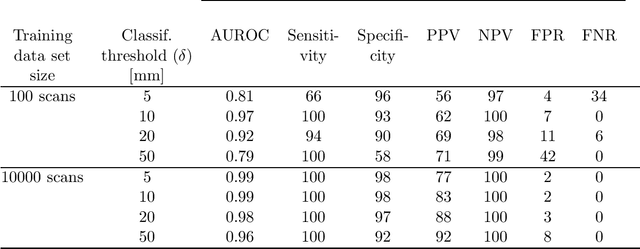

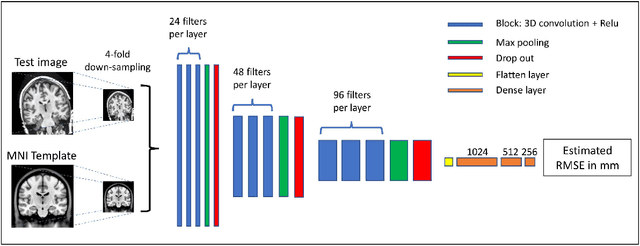

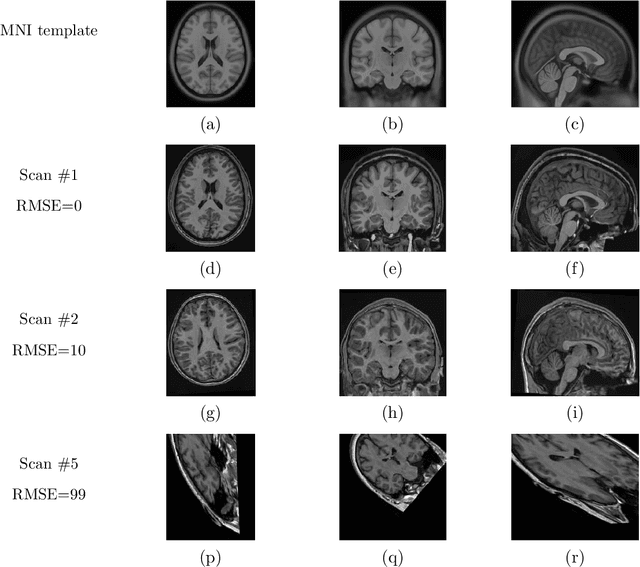

Abstract:Registration of one or several brain image(s) onto a common reference space defined by a template is a necessary prerequisite for many image processing tasks, such as brain structure segmentation or functional MRI study. Manual assessment of registration quality is a tedious and time-consuming task, especially when a large amount of data is involved. An automated and reliable quality control (QC) is thus mandatory. Moreover, the computation time of the QC must be also compatible with the processing of massive datasets. Therefore, deep neural network approaches appear as a method of choice to automatically assess registration quality. In the current study, a compact 3D CNN, referred to as RegQCNET, is introduced to quantitatively predict the amplitude of a registration mismatch between the registered image and the reference template. This quantitative estimation of registration error is expressed using metric unit system. Therefore, a meaningful task-specific threshold can be manually or automatically defined in order to distinguish usable and non-usable images. The robustness of the proposed RegQCNET is first analyzed on lifespan brain images undergoing various simulated spatial transformations and intensity variations between training and testing. Secondly, the potential of RegQCNET to classify images as usable or non-usable is evaluated using both manual and automatic thresholds. The latters were estimated using several computer-assisted classification models through cross-validation. To this end we used expert's visual quality control estimated on a lifespan cohort of 3953 brains. Finally, the RegQCNET accuracy is compared to usual image features such as image correlation coefficient and mutual information. Results show that the proposed deep learning QC is robust, fast and accurate to estimate registration error in processing pipeline.

AssemblyNet: A large ensemble of CNNs for 3D Whole Brain MRI Segmentation

Nov 20, 2019

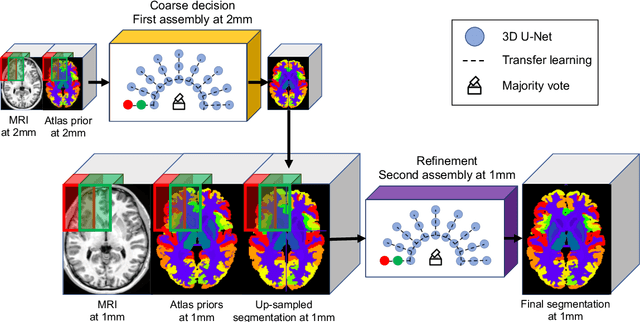

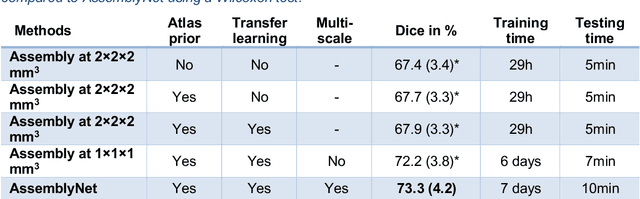

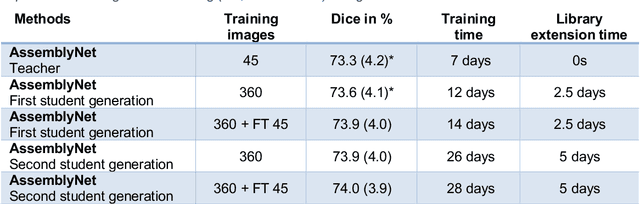

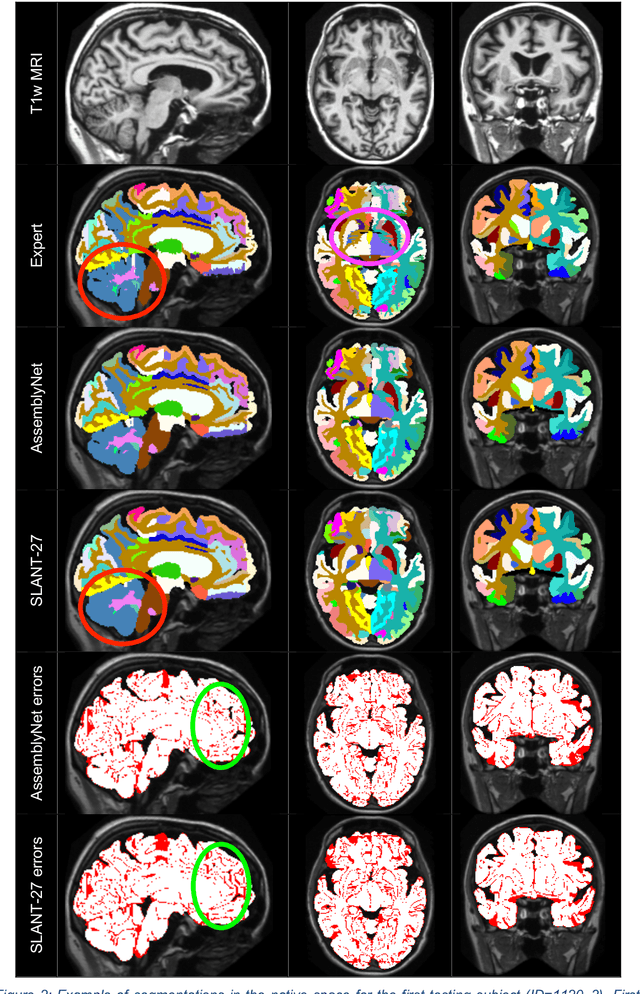

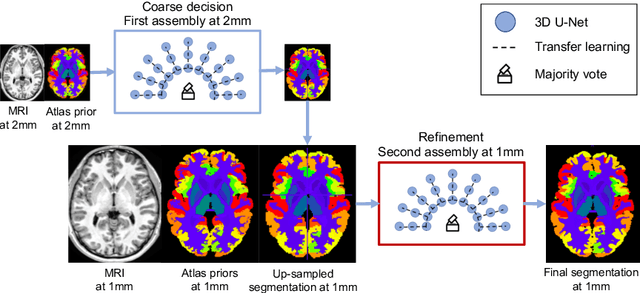

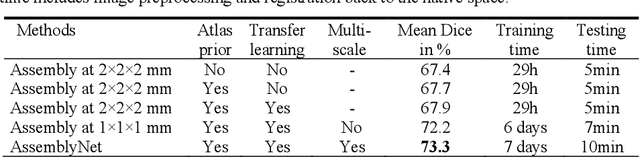

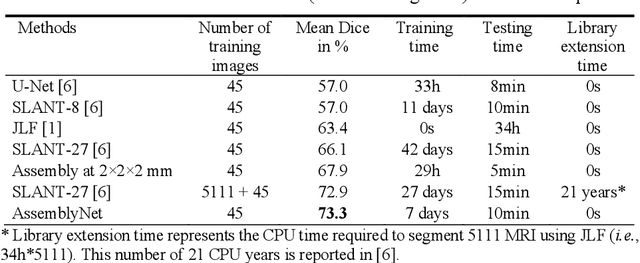

Abstract:Whole brain segmentation using deep learning (DL) is a very challenging task since the number of anatomical labels is very high compared to the number of available training images. To address this problem, previous DL methods proposed to use a single convolution neural network (CNN) or few independent CNNs. In this paper, we present a novel ensemble method based on a large number of CNNs processing different overlapping brain areas. Inspired by parliamentary decision-making systems, we propose a framework called AssemblyNet, made of two "assemblies" of U-Nets. Such a parliamentary system is capable of dealing with complex decisions, unseen problem and reaching a consensus quickly. AssemblyNet introduces sharing of knowledge among neighboring U-Nets, an "amendment" procedure made by the second assembly at higher-resolution to refine the decision taken by the first one, and a final decision obtained by majority voting. During our validation, AssemblyNet showed competitive performance compared to state-of-the-art methods such as U-Net, Joint label fusion and SLANT. Moreover, we investigated the scan-rescan consistency and the robustness to disease effects of our method. These experiences demonstrated the reliability of AssemblyNet. Finally, we showed the interest of using semi-supervised learning to improve the performance of our method.

AssemblyNet: A Novel Deep Decision-Making Process for Whole Brain MRI Segmentation

Jun 05, 2019

Abstract:Whole brain segmentation using deep learning (DL) is a very challenging task since the number of anatomical labels is very high compared to the number of available training images. To address this problem, previous DL methods proposed to use a global convolution neural network (CNN) or few independent CNNs. In this paper, we present a novel ensemble method based on a large number of CNNs processing different overlapping brain areas. Inspired by parliamentary decision-making systems, we propose a framework called AssemblyNet, made of two "assemblies" of U-Nets. Such a parliamentary system is capable of dealing with complex decisions and reaching a consensus quickly. AssemblyNet introduces sharing of knowledge among neighboring U-Nets, an "amendment" procedure made by the second assembly at higher-resolution to refine the decision taken by the first one, and a final decision obtained by majority voting. When using the same 45 training images, AssemblyNet outperforms global U-Net by 28% in terms of the Dice metric, patch-based joint label fusion by 15% and SLANT-27 by 10%. Finally, AssemblyNet demonstrates high capacity to deal with limited training data to achieve whole brain segmentation in practical training and testing times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge