Jorg Conradt

The Importance of Balanced Data Sets: Analyzing a Vehicle Trajectory Prediction Model based on Neural Networks and Distributed Representations

Sep 30, 2020

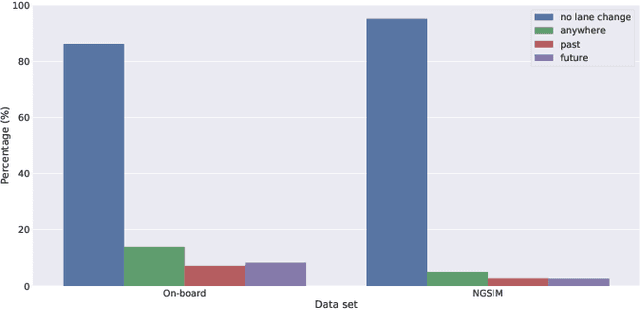

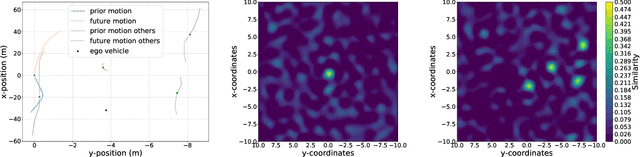

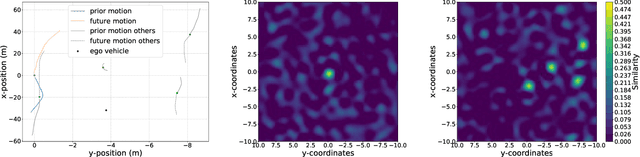

Abstract:Predicting future behavior of other traffic participants is an essential task that needs to be solved by automated vehicles and human drivers alike to achieve safe and situationaware driving. Modern approaches to vehicles trajectory prediction typically rely on data-driven models like neural networks, in particular LSTMs (Long Short-Term Memorys), achieving promising results. However, the question of optimal composition of the underlying training data has received less attention. In this paper, we expand on previous work on vehicle trajectory prediction based on neural network models employing distributed representations to encode automotive scenes in a semantic vector substrate. We analyze the influence of variations in the training data on the performance of our prediction models. Thereby, we show that the models employing our semantic vector representation outperform the numerical model when trained on an adequate data set and thereby, that the composition of training data in vehicle trajectory prediction is crucial for successful training. We conduct our analysis on challenging real-world driving data.

Analyzing the Capacity of Distributed Vector Representations to Encode Spatial Information

Sep 30, 2020

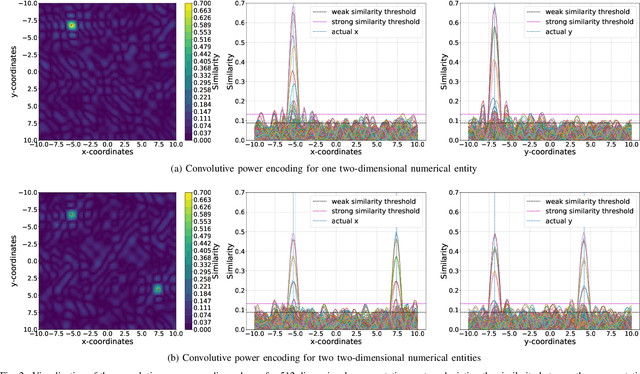

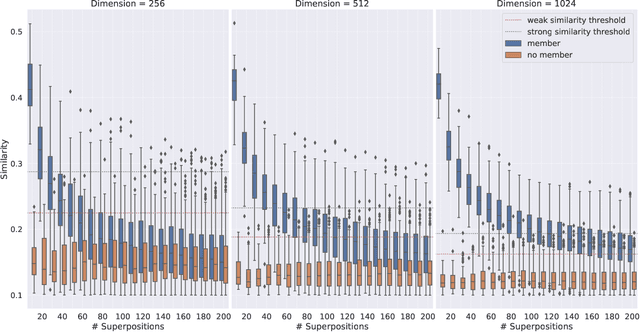

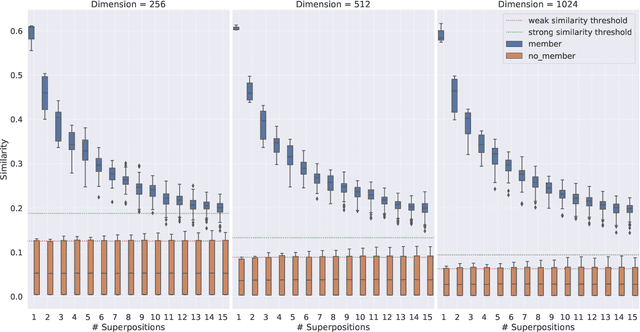

Abstract:Vector Symbolic Architectures belong to a family of related cognitive modeling approaches that encode symbols and structures in high-dimensional vectors. Similar to human subjects, whose capacity to process and store information or concepts in short-term memory is subject to numerical restrictions,the capacity of information that can be encoded in such vector representations is limited and one way of modeling the numerical restrictions to cognition. In this paper, we analyze these limits regarding information capacity of distributed representations. We focus our analysis on simple superposition and more complex, structured representations involving convolutive powers to encode spatial information. In two experiments, we find upper bounds for the number of concepts that can effectively be stored in a single vector.

Event Based, Near Eye Gaze Tracking Beyond 10,000Hz

Apr 07, 2020

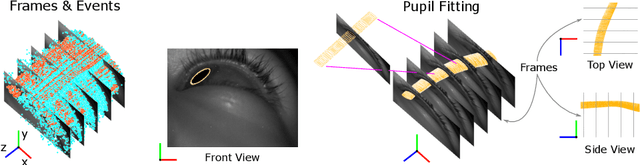

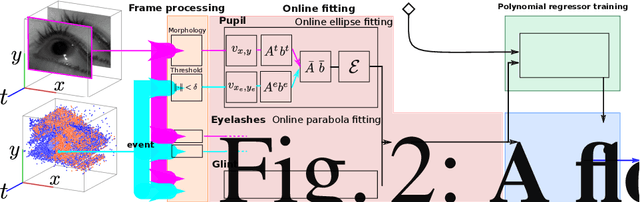

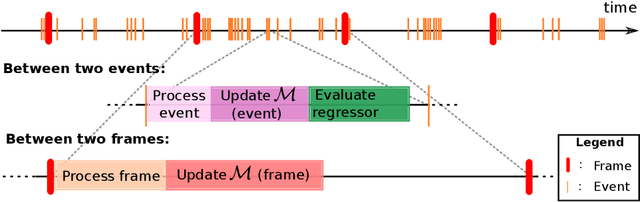

Abstract:Fast and accurate eye tracking is crucial for many applications. Current camera-based eye tracking systems, however, are fundamentally limited by their bandwidth, forcing a tradeoff between image resolution and framerate, i.e. between latency and update rate. Here, we propose a hybrid frame-event-based near-eye gaze tracking system offering update rates beyond 10,000 Hz with an accuracy that matches that of high-end desktop-mounted commercial eye trackers when evaluated in the same conditions. Our system builds on emerging event cameras that simultaneously acquire regularly sampled frames and adaptively sampled events. We develop an online 2D pupil fitting method that updates a parametric model every one or few events. Moreover, we propose a polynomial regressor for estimating the gaze vector from the parametric pupil model in real time. Using the first hybrid frame-event gaze dataset, which will be made public, we demonstrate that our system achieves accuracies of 0.45 degrees -- 1.75 degrees for fields of view ranging from 45 degrees to 98 degrees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge