Joost Prins

An optimal Petrov-Galerkin framework for operator networks

Mar 06, 2025Abstract:The optimal Petrov-Galerkin formulation to solve partial differential equations (PDEs) recovers the best approximation in a specified finite-dimensional (trial) space with respect to a suitable norm. However, the recovery of this optimal solution is contingent on being able to construct the optimal weighting functions associated with the trial basis. While explicit constructions are available for simple one- and two-dimensional problems, such constructions for a general multidimensional problem remain elusive. In the present work, we revisit the optimal Petrov-Galerkin formulation through the lens of deep learning. We propose an operator network framework called Petrov-Galerkin Variationally Mimetic Operator Network (PG-VarMiON), which emulates the optimal Petrov-Galerkin weak form of the underlying PDE. The PG-VarMiON is trained in a supervised manner using a labeled dataset comprising the PDE data and the corresponding PDE solution, with the training loss depending on the choice of the optimal norm. The special architecture of the PG-VarMiON allows it to implicitly learn the optimal weighting functions, thus endowing the proposed operator network with the ability to generalize well beyond the training set. We derive approximation error estimates for PG-VarMiON, highlighting the contributions of various error sources, particularly the error in learning the true weighting functions. Several numerical results are presented for the advection-diffusion equation to demonstrate the efficacy of the proposed method. By embedding the Petrov-Galerkin structure into the network architecture, PG-VarMiON exhibits greater robustness and improved generalization compared to other popular deep operator frameworks, particularly when the training data is limited.

Neural Green's Operators for Parametric Partial Differential Equations

Jun 04, 2024

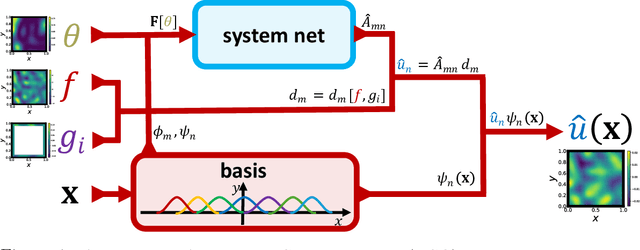

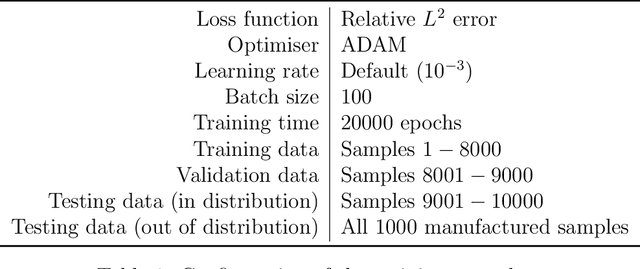

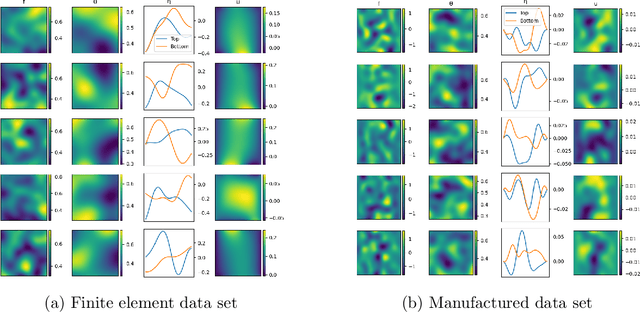

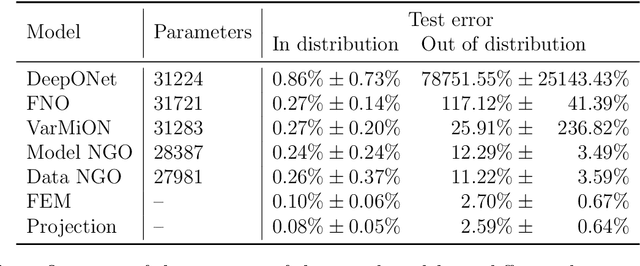

Abstract:This work introduces neural Green's operators (NGOs), a novel neural operator network architecture that learns the solution operator for a parametric family of linear partial differential equations (PDEs). Our construction of NGOs is derived directly from the Green's formulation of such a solution operator. Similar to deep operator networks (DeepONets) and variationally mimetic operator networks (VarMiONs), NGOs constitutes an expansion of the solution to the PDE in terms of basis functions, that is returned from a sub-network, contracted with coefficients, that are returned from another sub-network. However, in accordance with the Green's formulation, NGOs accept weighted averages of the input functions, rather than sampled values thereof, as is the case in DeepONets and VarMiONs. Application of NGOs to canonical linear parametric PDEs shows that, while they remain competitive with DeepONets, VarMiONs and Fourier neural operators when testing on data that lie within the training distribution, they robustly generalize when testing on finer-scale data generated outside of the training distribution. Furthermore, we show that the explicit representation of the Green's function that is returned by NGOs enables the construction of effective preconditioners for numerical solvers for PDEs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge