Jonathan Vacher

MAP5 - UMR 8145

Deep Neural Networks as Iterated Function Systems and a Generalization Bound

Jan 27, 2026Abstract:Deep neural networks (DNNs) achieve remarkable performance on a wide range of tasks, yet their mathematical analysis remains fragmented: stability and generalization are typically studied in disparate frameworks and on a case-by-case basis. Architecturally, DNNs rely on the recursive application of parametrized functions, a mechanism that can be unstable and difficult to train, making stability a primary concern. Even when training succeeds, there are few rigorous results on how well such models generalize beyond the observed data, especially in the generative setting. In this work, we leverage the theory of stochastic Iterated Function Systems (IFS) and show that two important deep architectures can be viewed as, or canonically associated with, place-dependent IFS. This connection allows us to import results from random dynamical systems to (i) establish the existence and uniqueness of invariant measures under suitable contractivity assumptions, and (ii) derive a Wasserstein generalization bound for generative modeling. The bound naturally leads to a new training objective that directly controls the collage-type approximation error between the data distribution and its image under the learned transfer operator. We illustrate the theory on a controlled 2D example and empirically evaluate the proposed objective on standard image datasets (MNIST, CelebA, CIFAR-10).

Perceptual Measurements, Distances and Metrics

Oct 18, 2023Abstract:Perception is often viewed as a process that transforms physical variables, external to an observer, into internal psychological variables. Such a process can be modeled by a function coined perceptual scale. The perceptual scale can be deduced from psychophysical measurements that consist in comparing the relative differences between stimuli (i.e. difference scaling experiments). However, this approach is often overlooked by the modeling and experimentation communities. Here, we demonstrate the value of measuring the perceptual scale of classical (spatial frequency, orientation) and less classical physical variables (interpolation between textures) by embedding it in recent probabilistic modeling of perception. First, we show that the assumption that an observer has an internal representation of univariate parameters such as spatial frequency or orientation while stimuli are high-dimensional does not lead to contradictory predictions when following the theoretical framework. Second, we show that the measured perceptual scale corresponds to the transduction function hypothesized in this framework. In particular, we demonstrate that it is related to the Fisher information of the generative model that underlies perception and we test the predictions given by the generative model of different stimuli in a set a of difference scaling experiments. Our main conclusion is that the perceptual scale is mostly driven by the stimulus power spectrum. Finally, we propose that this measure of perceptual scale is a way to push further the notion of perceptual distances by estimating the perceptual geometry of images i.e. the path between images instead of simply the distance between those.

Measuring uncertainty in human visual segmentation

Jan 18, 2023

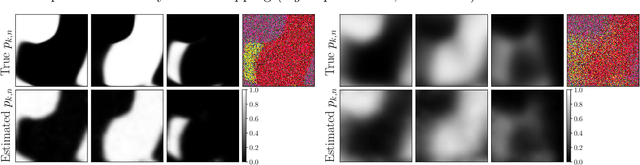

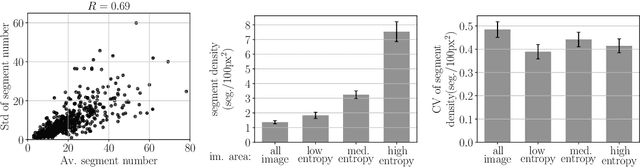

Abstract:Segmenting visual stimuli into distinct groups of features and visual objects is central to visual function. Classical psychophysical methods have helped uncover many rules of human perceptual segmentation, and recent progress in machine learning has produced successful algorithms. Yet, the computational logic of human segmentation remains unclear, partially because we lack well-controlled paradigms to measure perceptual segmentation maps and compare models quantitatively. Here we propose a new, integrated approach: given an image, we measure multiple pixel-based same-different judgments and perform model--based reconstruction of the underlying segmentation map. The reconstruction is robust to several experimental manipulations and captures the variability of individual participants. We demonstrate the validity of the approach on human segmentation of natural images and composite textures. We show that image uncertainty affects measured human variability, and it influences how participants weigh different visual features. Because any putative segmentation algorithm can be inserted to perform the reconstruction, our paradigm affords quantitative tests of theories of perception as well as new benchmarks for segmentation algorithms.

Texture Interpolation for Probing Visual Perception

Jun 05, 2020

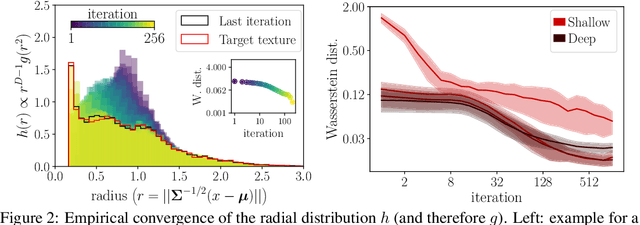

Abstract:Texture synthesis models are important to understand visual processing. In particular, statistical approaches based on neurally relevant features have been instrumental to understanding aspects of visual perception and of neural coding. New deep learning-based approaches further improve the quality of synthetic textures. Yet, it is still unclear why deep texture synthesis performs so well, and applications of this new framework to probe visual perception are scarce. Here, we show that distributions of deep convolutional neural network (CNN) activations of a texture are well described by elliptical distributions and therefore, following optimal transport theory, constraining their mean and covariance is sufficient to generate new texture samples. Then, we propose the natural geodesics (i.e. the shortest path between two points) arising with the optimal transport metric to interpolate between arbitrary textures. The comparison to alternative interpolation methods suggests that ours matches more closely the geometry of texture perception, and is better suited to study its statistical nature. We demonstrate our method by measuring the perceptual scale associated to the interpolation parameter in human observers, and the neural sensitivity of different areas of visual cortex in macaque monkeys.

Combining mixture models with linear mixing updates: multilayer image segmentation and synthesis

May 25, 2019

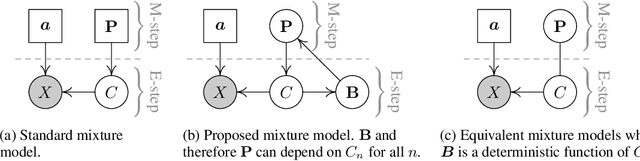

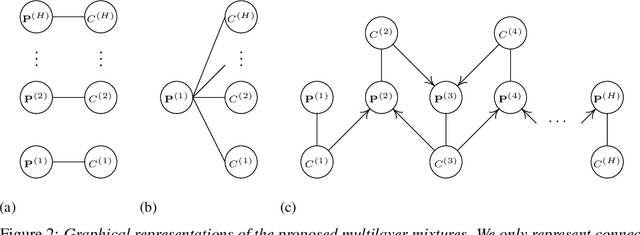

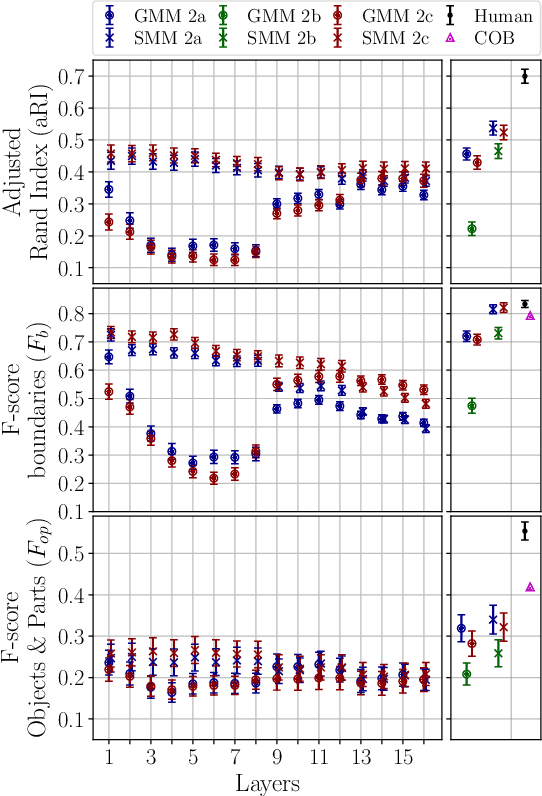

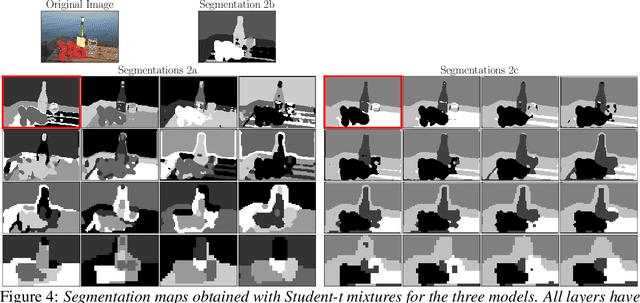

Abstract:Finite mixture models for clustering can often be improved by adding a regularization that is specific to the topology of the data. For instance, mixtures are common in unsupervised image segmentation, and typically rely on averaging the posterior mixing probabilities of spatially adjacent data points (i.e. smoothing). However, this approach has had limited success with natural images. Here we make three contributions. First, we show that a Dirichlet prior with an appropriate choice of parameters allows -- using the Expectation-Maximization approach -- to define any linear update rule for the mixing probabilities, including many smoothing regularizations as special cases. Second, we demonstrate how to use this flexible design of the update rule to propagate segmentation information across layers of a deep network, and to train mixtures jointly across layers. Third, we compare the standard Gaussian mixture and the Student-t mixture, which is known to better capture the statistics of low-level visual features. We show that our models achieve competitive performance in natural image segmentation, with the Student-t mixtures reaching state-of-the art on boundaries scores. We also demonstrate how to exploit the resulting multilayer probabilistic generative model to synthesize naturalistic images beyond uniform textures.

Bayesian Modeling of Motion Perception using Dynamical Stochastic Textures

Aug 21, 2018Abstract:A common practice to account for psychophysical biases in vision is to frame them as consequences of a dynamic process relying on optimal inference with respect to a generative model. The present study details the complete formulation of such a generative model intended to probe visual motion perception with a dynamic texture model. It is first derived in a set of axiomatic steps constrained by biological plausibility. We extend previous contributions by detailing three equivalent formulations of this texture model. First, the composite dynamic textures are constructed by the random aggregation of warped patterns, which can be viewed as 3D Gaussian fields. Secondly, these textures are cast as solutions to a stochastic partial differential equation (sPDE). This essential step enables real time, on-the-fly texture synthesis using time-discretized auto-regressive processes. It also allows for the derivation of a local motion-energy model, which corresponds to the log-likelihood of the probability density. The log-likelihoods are essential for the construction of a Bayesian inference framework. We use the dynamic texture model to psychophysically probe speed perception in humans using zoom-like changes in the spatial frequency content of the stimulus. The human data replicates previous findings showing perceived speed to be positively biased by spatial frequency increments. A Bayesian observer who combines a Gaussian likelihood centered at the true speed and a spatial frequency dependent width with a "slow speed prior" successfully accounts for the perceptual bias. More precisely, the bias arises from a decrease in the observer's likelihood width estimated from the experiments as the spatial frequency increases. Such a trend is compatible with the trend of the dynamic texture likelihood width.

An Ideal Observer Model to Probe Human Visual Segmentation of Natural Images

Jun 04, 2018

Abstract:Visual segmentation is a key perceptual function that partitions visual space and allows for detection, recognition and discrimination of objects in complex environments. The processes underlying human segmentation of natural images are still poorly understood. Existing datasets rely on manual labeling that conflate perceptual, motor, and cognitive factors. In part, this is because we lack an ideal observer model of segmentation to guide constrained experiments. On the other hand, despite recent progress in machine learning, modern algorithms still fall short of human segmentation performance. Our goal here is two-fold (i) propose a model to probe human visual segmentation mechanisms and (ii) develop an efficient algorithm for image segmentation. To this aim, we propose a novel probabilistic generative model of visual segmentation that for the first time combines 1) knowledge about the sensitivity of neurons in the visual cortex to statistical regularities in natural images; and 2) non-parametric Bayesian priors over segmentation maps (ie partitions of the visual space). We provide an algorithm for learning and inference, validate it on synthetic data, and illustrate how the two components of our model improve segmentation of natural images. We then show that the posterior distribution over segmentations captures well the variability across human subjects, indicating that our model provides a viable approach to probe human visual segmentation.

Biologically Inspired Dynamic Textures for Probing Motion Perception

Nov 09, 2015

Abstract:Perception is often described as a predictive process based on an optimal inference with respect to a generative model. We study here the principled construction of a generative model specifically crafted to probe motion perception. In that context, we first provide an axiomatic, biologically-driven derivation of the model. This model synthesizes random dynamic textures which are defined by stationary Gaussian distributions obtained by the random aggregation of warped patterns. Importantly, we show that this model can equivalently be described as a stochastic partial differential equation. Using this characterization of motion in images, it allows us to recast motion-energy models into a principled Bayesian inference framework. Finally, we apply these textures in order to psychophysically probe speed perception in humans. In this framework, while the likelihood is derived from the generative model, the prior is estimated from the observed results and accounts for the perceptual bias in a principled fashion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge