Pascal Mamassian

Perceptual Measurements, Distances and Metrics

Oct 18, 2023Abstract:Perception is often viewed as a process that transforms physical variables, external to an observer, into internal psychological variables. Such a process can be modeled by a function coined perceptual scale. The perceptual scale can be deduced from psychophysical measurements that consist in comparing the relative differences between stimuli (i.e. difference scaling experiments). However, this approach is often overlooked by the modeling and experimentation communities. Here, we demonstrate the value of measuring the perceptual scale of classical (spatial frequency, orientation) and less classical physical variables (interpolation between textures) by embedding it in recent probabilistic modeling of perception. First, we show that the assumption that an observer has an internal representation of univariate parameters such as spatial frequency or orientation while stimuli are high-dimensional does not lead to contradictory predictions when following the theoretical framework. Second, we show that the measured perceptual scale corresponds to the transduction function hypothesized in this framework. In particular, we demonstrate that it is related to the Fisher information of the generative model that underlies perception and we test the predictions given by the generative model of different stimuli in a set a of difference scaling experiments. Our main conclusion is that the perceptual scale is mostly driven by the stimulus power spectrum. Finally, we propose that this measure of perceptual scale is a way to push further the notion of perceptual distances by estimating the perceptual geometry of images i.e. the path between images instead of simply the distance between those.

Measuring uncertainty in human visual segmentation

Jan 18, 2023

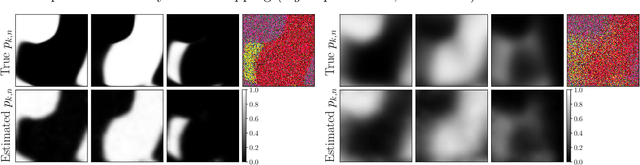

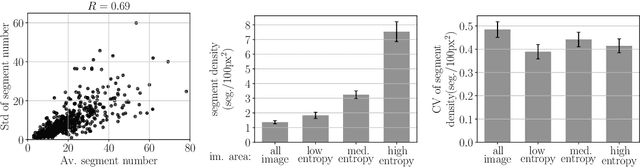

Abstract:Segmenting visual stimuli into distinct groups of features and visual objects is central to visual function. Classical psychophysical methods have helped uncover many rules of human perceptual segmentation, and recent progress in machine learning has produced successful algorithms. Yet, the computational logic of human segmentation remains unclear, partially because we lack well-controlled paradigms to measure perceptual segmentation maps and compare models quantitatively. Here we propose a new, integrated approach: given an image, we measure multiple pixel-based same-different judgments and perform model--based reconstruction of the underlying segmentation map. The reconstruction is robust to several experimental manipulations and captures the variability of individual participants. We demonstrate the validity of the approach on human segmentation of natural images and composite textures. We show that image uncertainty affects measured human variability, and it influences how participants weigh different visual features. Because any putative segmentation algorithm can be inserted to perform the reconstruction, our paradigm affords quantitative tests of theories of perception as well as new benchmarks for segmentation algorithms.

An Ideal Observer Model to Probe Human Visual Segmentation of Natural Images

Jun 04, 2018

Abstract:Visual segmentation is a key perceptual function that partitions visual space and allows for detection, recognition and discrimination of objects in complex environments. The processes underlying human segmentation of natural images are still poorly understood. Existing datasets rely on manual labeling that conflate perceptual, motor, and cognitive factors. In part, this is because we lack an ideal observer model of segmentation to guide constrained experiments. On the other hand, despite recent progress in machine learning, modern algorithms still fall short of human segmentation performance. Our goal here is two-fold (i) propose a model to probe human visual segmentation mechanisms and (ii) develop an efficient algorithm for image segmentation. To this aim, we propose a novel probabilistic generative model of visual segmentation that for the first time combines 1) knowledge about the sensitivity of neurons in the visual cortex to statistical regularities in natural images; and 2) non-parametric Bayesian priors over segmentation maps (ie partitions of the visual space). We provide an algorithm for learning and inference, validate it on synthetic data, and illustrate how the two components of our model improve segmentation of natural images. We then show that the posterior distribution over segmentations captures well the variability across human subjects, indicating that our model provides a viable approach to probe human visual segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge