An Ideal Observer Model to Probe Human Visual Segmentation of Natural Images

Paper and Code

Jun 04, 2018

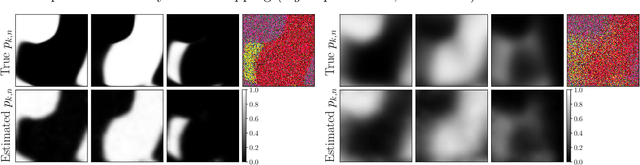

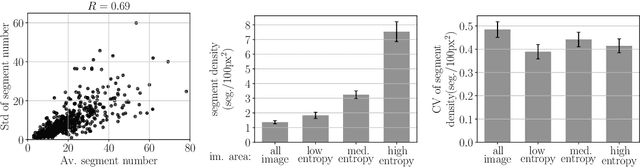

Visual segmentation is a key perceptual function that partitions visual space and allows for detection, recognition and discrimination of objects in complex environments. The processes underlying human segmentation of natural images are still poorly understood. Existing datasets rely on manual labeling that conflate perceptual, motor, and cognitive factors. In part, this is because we lack an ideal observer model of segmentation to guide constrained experiments. On the other hand, despite recent progress in machine learning, modern algorithms still fall short of human segmentation performance. Our goal here is two-fold (i) propose a model to probe human visual segmentation mechanisms and (ii) develop an efficient algorithm for image segmentation. To this aim, we propose a novel probabilistic generative model of visual segmentation that for the first time combines 1) knowledge about the sensitivity of neurons in the visual cortex to statistical regularities in natural images; and 2) non-parametric Bayesian priors over segmentation maps (ie partitions of the visual space). We provide an algorithm for learning and inference, validate it on synthetic data, and illustrate how the two components of our model improve segmentation of natural images. We then show that the posterior distribution over segmentations captures well the variability across human subjects, indicating that our model provides a viable approach to probe human visual segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge