Ruben Coen-Cagli

Measuring uncertainty in human visual segmentation

Jan 18, 2023

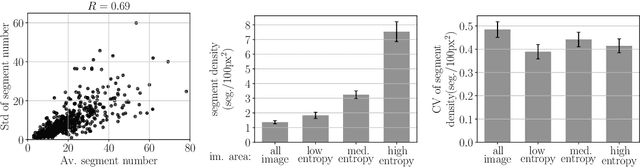

Abstract:Segmenting visual stimuli into distinct groups of features and visual objects is central to visual function. Classical psychophysical methods have helped uncover many rules of human perceptual segmentation, and recent progress in machine learning has produced successful algorithms. Yet, the computational logic of human segmentation remains unclear, partially because we lack well-controlled paradigms to measure perceptual segmentation maps and compare models quantitatively. Here we propose a new, integrated approach: given an image, we measure multiple pixel-based same-different judgments and perform model--based reconstruction of the underlying segmentation map. The reconstruction is robust to several experimental manipulations and captures the variability of individual participants. We demonstrate the validity of the approach on human segmentation of natural images and composite textures. We show that image uncertainty affects measured human variability, and it influences how participants weigh different visual features. Because any putative segmentation algorithm can be inserted to perform the reconstruction, our paradigm affords quantitative tests of theories of perception as well as new benchmarks for segmentation algorithms.

Texture Interpolation for Probing Visual Perception

Jun 05, 2020

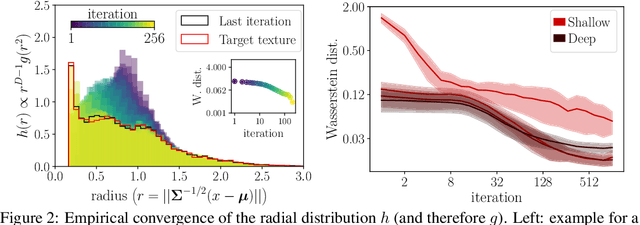

Abstract:Texture synthesis models are important to understand visual processing. In particular, statistical approaches based on neurally relevant features have been instrumental to understanding aspects of visual perception and of neural coding. New deep learning-based approaches further improve the quality of synthetic textures. Yet, it is still unclear why deep texture synthesis performs so well, and applications of this new framework to probe visual perception are scarce. Here, we show that distributions of deep convolutional neural network (CNN) activations of a texture are well described by elliptical distributions and therefore, following optimal transport theory, constraining their mean and covariance is sufficient to generate new texture samples. Then, we propose the natural geodesics (i.e. the shortest path between two points) arising with the optimal transport metric to interpolate between arbitrary textures. The comparison to alternative interpolation methods suggests that ours matches more closely the geometry of texture perception, and is better suited to study its statistical nature. We demonstrate our method by measuring the perceptual scale associated to the interpolation parameter in human observers, and the neural sensitivity of different areas of visual cortex in macaque monkeys.

Conditional Finite Mixtures of Poisson Distributions for Context-Dependent Neural Correlations

Aug 16, 2019

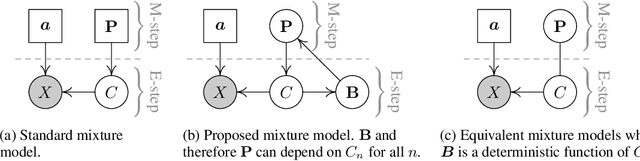

Abstract:Parallel recordings of neural spike counts have revealed the existence of context-dependent noise correlations in neural populations. Theories of population coding have also shown that such correlations can impact the information encoded by neural populations about external stimuli. Although studies have shown that these correlations often have a low-dimensional structure, it has proven difficult to capture this structure in a model that is compatible with theories of rate coding in correlated populations. To address this difficulty we develop a novel model based on conditional finite mixtures of independent Poisson distributions. The model can be conditioned on context variables (e.g. stimuli or task variables), and the number of mixture components in the model can be cross-validated to estimate the dimensionality of the target correlations. We derive an expectation-maximization algorithm to efficiently fit the model to realistic amounts of data from large neural populations. We then demonstrate that the model successfully captures stimulus-dependent correlations in the responses of macaque V1 neurons to oriented gratings. Our model incorporates arbitrary nonlinear context-dependence, and can thus be applied to improve predictions of neural activity based on deep neural networks.

Combining mixture models with linear mixing updates: multilayer image segmentation and synthesis

May 25, 2019

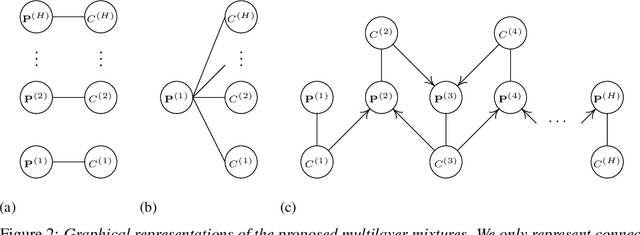

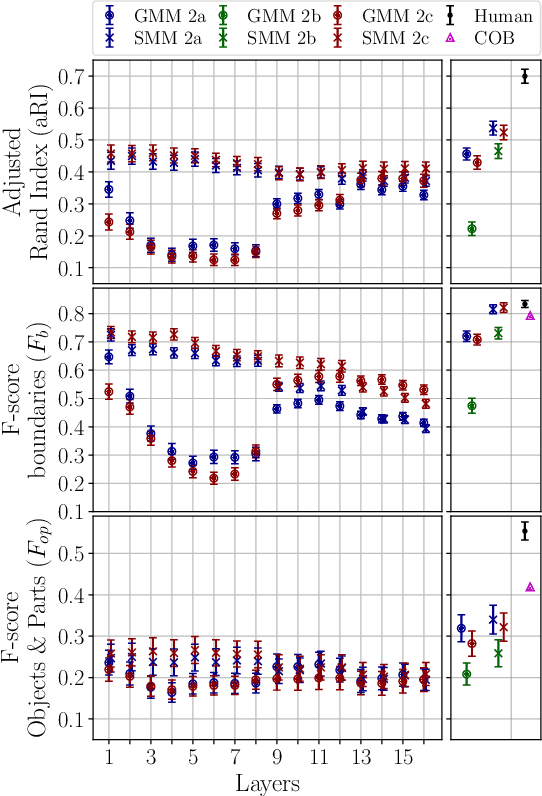

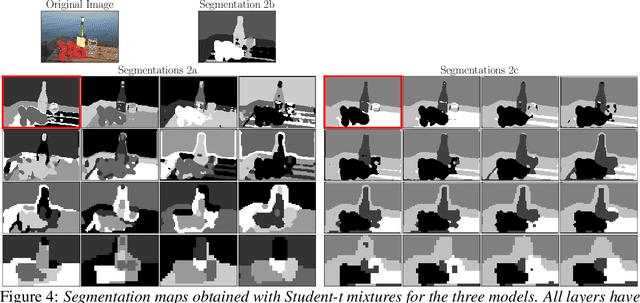

Abstract:Finite mixture models for clustering can often be improved by adding a regularization that is specific to the topology of the data. For instance, mixtures are common in unsupervised image segmentation, and typically rely on averaging the posterior mixing probabilities of spatially adjacent data points (i.e. smoothing). However, this approach has had limited success with natural images. Here we make three contributions. First, we show that a Dirichlet prior with an appropriate choice of parameters allows -- using the Expectation-Maximization approach -- to define any linear update rule for the mixing probabilities, including many smoothing regularizations as special cases. Second, we demonstrate how to use this flexible design of the update rule to propagate segmentation information across layers of a deep network, and to train mixtures jointly across layers. Third, we compare the standard Gaussian mixture and the Student-t mixture, which is known to better capture the statistics of low-level visual features. We show that our models achieve competitive performance in natural image segmentation, with the Student-t mixtures reaching state-of-the art on boundaries scores. We also demonstrate how to exploit the resulting multilayer probabilistic generative model to synthesize naturalistic images beyond uniform textures.

An Ideal Observer Model to Probe Human Visual Segmentation of Natural Images

Jun 04, 2018

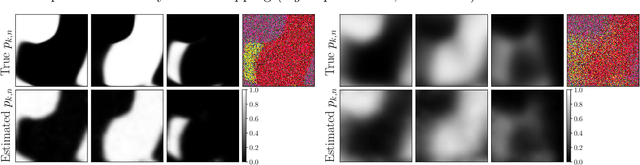

Abstract:Visual segmentation is a key perceptual function that partitions visual space and allows for detection, recognition and discrimination of objects in complex environments. The processes underlying human segmentation of natural images are still poorly understood. Existing datasets rely on manual labeling that conflate perceptual, motor, and cognitive factors. In part, this is because we lack an ideal observer model of segmentation to guide constrained experiments. On the other hand, despite recent progress in machine learning, modern algorithms still fall short of human segmentation performance. Our goal here is two-fold (i) propose a model to probe human visual segmentation mechanisms and (ii) develop an efficient algorithm for image segmentation. To this aim, we propose a novel probabilistic generative model of visual segmentation that for the first time combines 1) knowledge about the sensitivity of neurons in the visual cortex to statistical regularities in natural images; and 2) non-parametric Bayesian priors over segmentation maps (ie partitions of the visual space). We provide an algorithm for learning and inference, validate it on synthetic data, and illustrate how the two components of our model improve segmentation of natural images. We then show that the posterior distribution over segmentations captures well the variability across human subjects, indicating that our model provides a viable approach to probe human visual segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge