Jonathan R. Brennan

Decoding Probing: Revealing Internal Linguistic Structures in Neural Language Models using Minimal Pairs

Mar 26, 2024Abstract:Inspired by cognitive neuroscience studies, we introduce a novel `decoding probing' method that uses minimal pairs benchmark (BLiMP) to probe internal linguistic characteristics in neural language models layer by layer. By treating the language model as the `brain' and its representations as `neural activations', we decode grammaticality labels of minimal pairs from the intermediate layers' representations. This approach reveals: 1) Self-supervised language models capture abstract linguistic structures in intermediate layers that GloVe and RNN language models cannot learn. 2) Information about syntactic grammaticality is robustly captured through the first third layers of GPT-2 and also distributed in later layers. As sentence complexity increases, more layers are required for learning grammatical capabilities. 3) Morphological and semantics/syntax interface-related features are harder to capture than syntax. 4) For Transformer-based models, both embeddings and attentions capture grammatical features but show distinct patterns. Different attention heads exhibit similar tendencies toward various linguistic phenomena, but with varied contributions.

Modeling structure-building in the brain with CCG parsing and large language models

Nov 01, 2022Abstract:To model behavioral and neural correlates of language comprehension in naturalistic environments, researchers have turned to broad-coverage tools from natural-language processing and machine learning. Where syntactic structure is explicitly modeled, prior work has relied predominantly on context-free grammars (CFG), yet such formalisms are not sufficiently expressive for human languages. Combinatory Categorial Grammars (CCGs) are sufficiently expressive directly compositional models of grammar with flexible constituency that affords incremental interpretation. In this work we evaluate whether a more expressive CCG provides a better model than a CFG for human neural signals collected with fMRI while participants listen to an audiobook story. We further test between variants of CCG that differ in how they handle optional adjuncts. These evaluations are carried out against a baseline that includes estimates of next-word predictability from a Transformer neural network language model. Such a comparison reveals unique contributions of CCG structure-building predominantly in the left posterior temporal lobe: CCG-derived measures offer a superior fit to neural signals compared to those derived from a CFG. These effects are spatially distinct from bilateral superior temporal effects that are unique to predictability. Neural effects for structure-building are thus separable from predictability during naturalistic listening, and those effects are best characterized by a grammar whose expressive power is motivated on independent linguistic grounds.

Finding Syntax in Human Encephalography with Beam Search

Jun 11, 2018

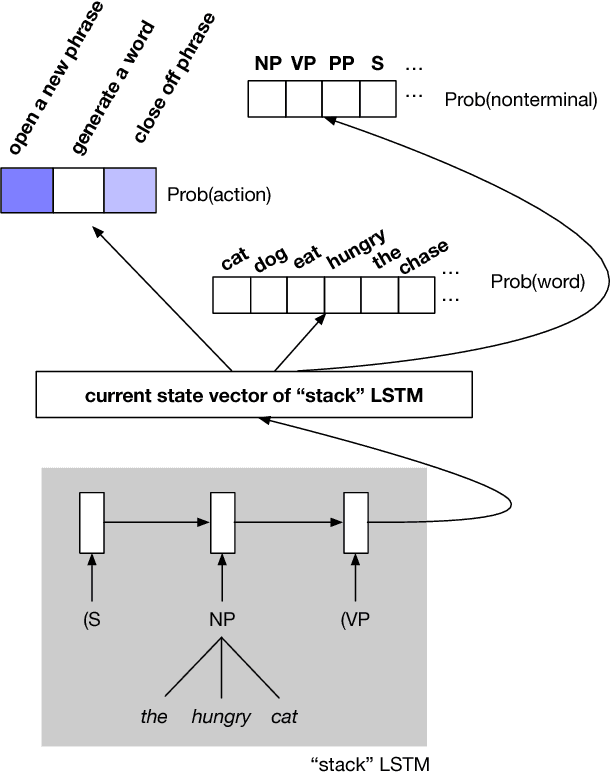

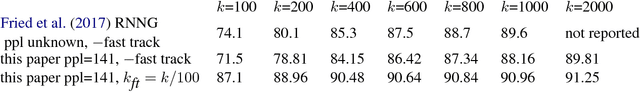

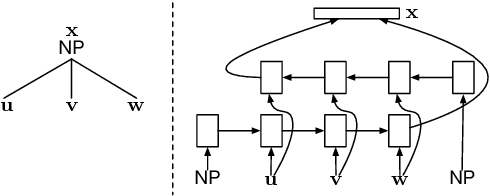

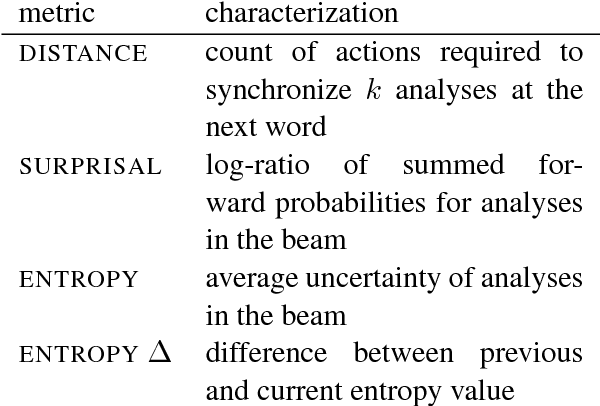

Abstract:Recurrent neural network grammars (RNNGs) are generative models of (tree,string) pairs that rely on neural networks to evaluate derivational choices. Parsing with them using beam search yields a variety of incremental complexity metrics such as word surprisal and parser action count. When used as regressors against human electrophysiological responses to naturalistic text, they derive two amplitude effects: an early peak and a P600-like later peak. By contrast, a non-syntactic neural language model yields no reliable effects. Model comparisons attribute the early peak to syntactic composition within the RNNG. This pattern of results recommends the RNNG+beam search combination as a mechanistic model of the syntactic processing that occurs during normal human language comprehension.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge