Jonathan P. Chang

Thread With Caution: Proactively Helping Users Assess and Deescalate Tension in Their Online Discussions

Dec 02, 2022

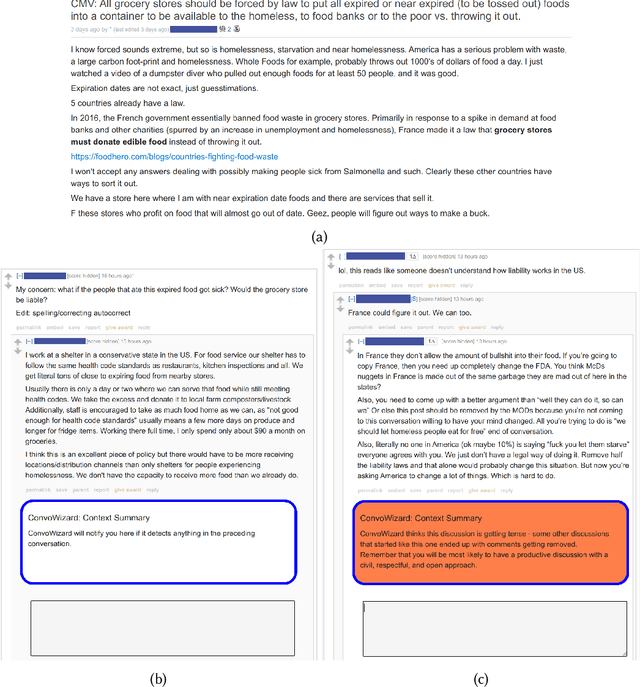

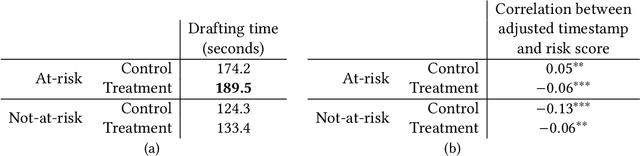

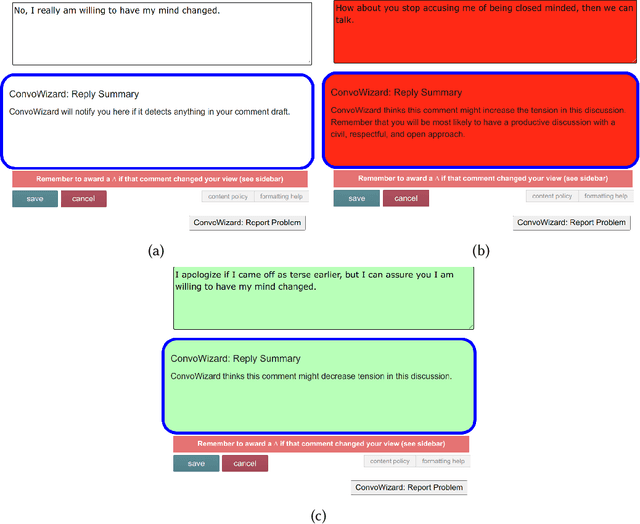

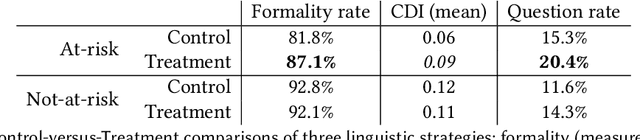

Abstract:Incivility remains a major challenge for online discussion platforms, to such an extent that even conversations between well-intentioned users can often derail into uncivil behavior. Traditionally, platforms have relied on moderators to -- with or without algorithmic assistance -- take corrective actions such as removing comments or banning users. In this work we propose a complementary paradigm that directly empowers users by proactively enhancing their awareness about existing tension in the conversation they are engaging in and actively guides them as they are drafting their replies to avoid further escalation. As a proof of concept for this paradigm, we design an algorithmic tool that provides such proactive information directly to users, and conduct a user study in a popular discussion platform. Through a mixed methods approach combining surveys with a randomized controlled experiment, we uncover qualitative and quantitative insights regarding how the participants utilize and react to this information. Most participants report finding this proactive paradigm valuable, noting that it helps them to identify tension that they may have otherwise missed and prompts them to further reflect on their own replies and to revise them. These effects are corroborated by a comparison of how the participants draft their reply when our tool warns them that their conversation is at risk of derailing into uncivil behavior versus in a control condition where the tool is disabled. These preliminary findings highlight the potential of this user-centered paradigm and point to concrete directions for future implementations.

* 37 pages, 2 figures. More information at https://www.cs.cornell.edu/~cristian/Thread_With_Caution.html

Proactive Moderation of Online Discussions: Existing Practices and the Potential for Algorithmic Support

Nov 29, 2022

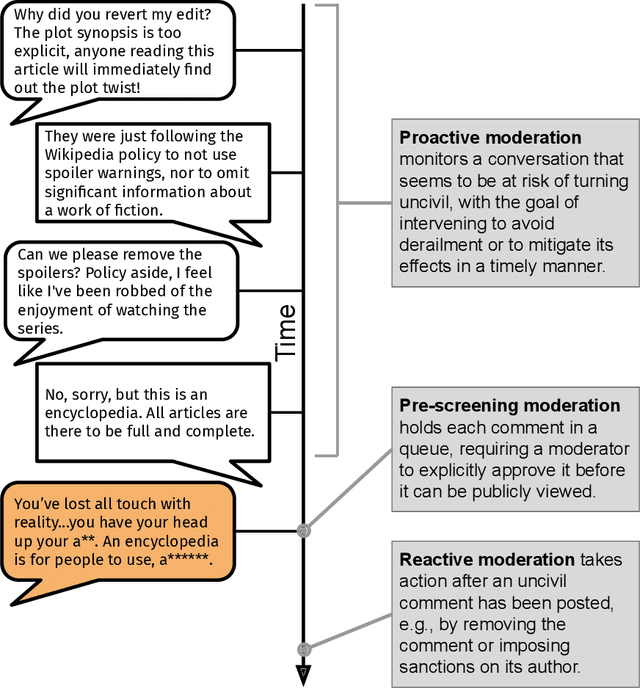

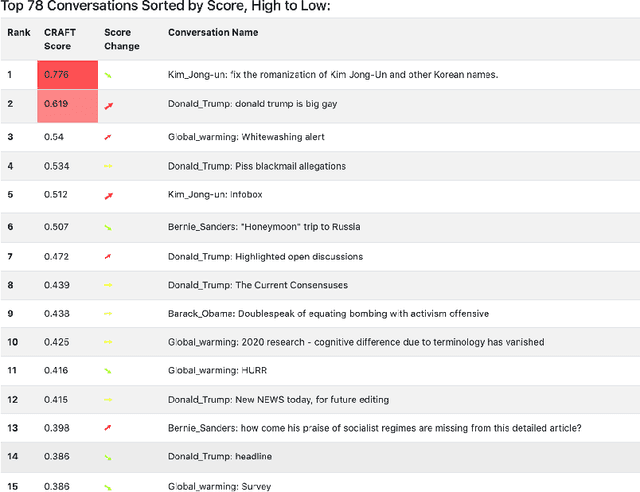

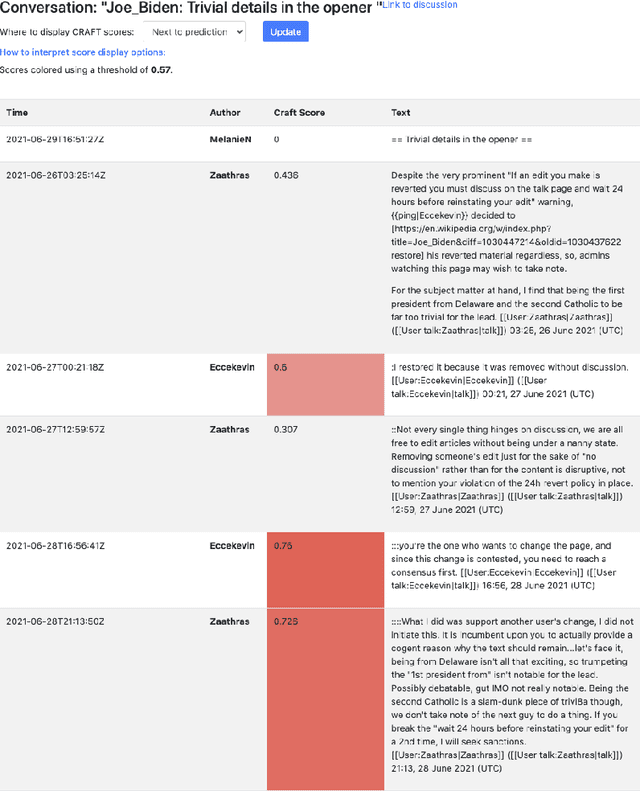

Abstract:To address the widespread problem of uncivil behavior, many online discussion platforms employ human moderators to take action against objectionable content, such as removing it or placing sanctions on its authors. This reactive paradigm of taking action against already-posted antisocial content is currently the most common form of moderation, and has accordingly underpinned many recent efforts at introducing automation into the moderation process. Comparatively less work has been done to understand other moderation paradigms -- such as proactively discouraging the emergence of antisocial behavior rather than reacting to it -- and the role algorithmic support can play in these paradigms. In this work, we investigate such a proactive framework for moderation in a case study of a collaborative setting: Wikipedia Talk Pages. We employ a mixed methods approach, combining qualitative and design components for a holistic analysis. Through interviews with moderators, we find that despite a lack of technical and social support, moderators already engage in a number of proactive moderation behaviors, such as preemptively intervening in conversations to keep them on track. Further, we explore how automation could assist with this existing proactive moderation workflow by building a prototype tool, presenting it to moderators, and examining how the assistance it provides might fit into their workflow. The resulting feedback uncovers both strengths and drawbacks of the prototype tool and suggests concrete steps towards further developing such assisting technology so it can most effectively support moderators in their existing proactive moderation workflow.

* 27 pages, 3 figures. More info at https://www.cs.cornell.edu/~cristian/Proactive_Moderation.html

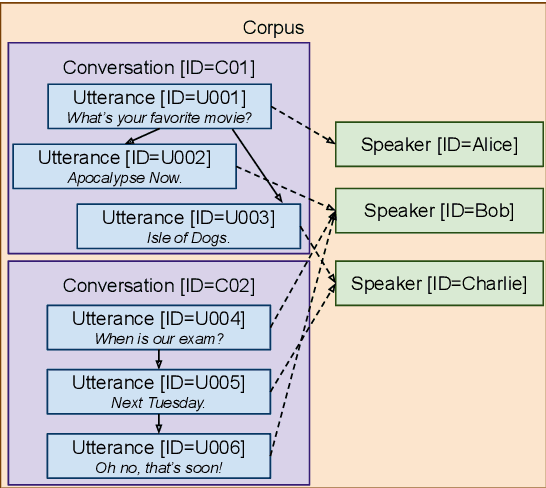

ConvoKit: A Toolkit for the Analysis of Conversations

May 08, 2020

Abstract:This paper describes the design and functionality of ConvoKit, an open-source toolkit for analyzing conversations and the social interactions embedded within. ConvoKit provides an unified framework for representing and manipulating conversational data, as well as a large and diverse collection of conversational datasets. By providing an intuitive interface for exploring and interacting with conversational data, this toolkit lowers the technical barriers for the broad adoption of computational methods for conversational analysis.

Don't Let Me Be Misunderstood: Comparing Intentions and Perceptions in Online Discussions

Apr 28, 2020Abstract:Discourse involves two perspectives: a person's intention in making an utterance and others' perception of that utterance. The misalignment between these perspectives can lead to undesirable outcomes, such as misunderstandings, low productivity and even overt strife. In this work, we present a computational framework for exploring and comparing both perspectives in online public discussions. We combine logged data about public comments on Facebook with a survey of over 16,000 people about their intentions in writing these comments or about their perceptions of comments that others had written. Unlike previous studies of online discussions that have largely relied on third-party labels to quantify properties such as sentiment and subjectivity, our approach also directly captures what the speakers actually intended when writing their comments. In particular, our analysis focuses on judgments of whether a comment is stating a fact or an opinion, since these concepts were shown to be often confused. We show that intentions and perceptions diverge in consequential ways. People are more likely to perceive opinions than to intend them, and linguistic cues that signal how an utterance is intended can differ from those that signal how it will be perceived. Further, this misalignment between intentions and perceptions can be linked to the future health of a conversation: when a comment whose author intended to share a fact is misperceived as sharing an opinion, the subsequent conversation is more likely to derail into uncivil behavior than when the comment is perceived as intended. Altogether, these findings may inform the design of discussion platforms that better promote positive interactions.

Trouble on the Horizon: Forecasting the Derailment of Online Conversations as they Develop

Sep 03, 2019

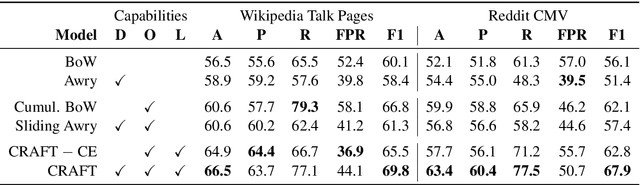

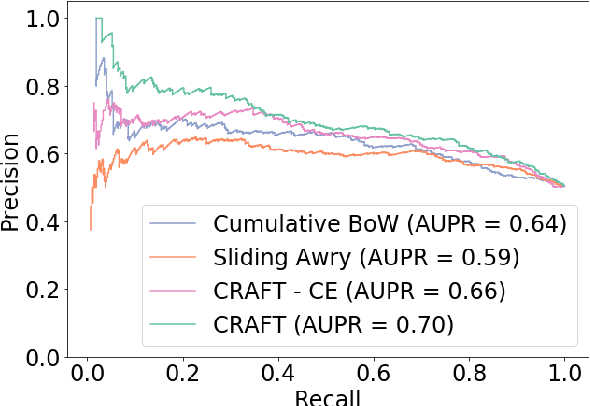

Abstract:Online discussions often derail into toxic exchanges between participants. Recent efforts mostly focused on detecting antisocial behavior after the fact, by analyzing single comments in isolation. To provide more timely notice to human moderators, a system needs to preemptively detect that a conversation is heading towards derailment before it actually turns toxic. This means modeling derailment as an emerging property of a conversation rather than as an isolated utterance-level event. Forecasting emerging conversational properties, however, poses several inherent modeling challenges. First, since conversations are dynamic, a forecasting model needs to capture the flow of the discussion, rather than properties of individual comments. Second, real conversations have an unknown horizon: they can end or derail at any time; thus a practical forecasting model needs to assess the risk in an online fashion, as the conversation develops. In this work we introduce a conversational forecasting model that learns an unsupervised representation of conversational dynamics and exploits it to predict future derailment as the conversation develops. By applying this model to two new diverse datasets of online conversations with labels for antisocial events, we show that it outperforms state-of-the-art systems at forecasting derailment.

Asking the Right Question: Inferring Advice-Seeking Intentions from Personal Narratives

Apr 02, 2019

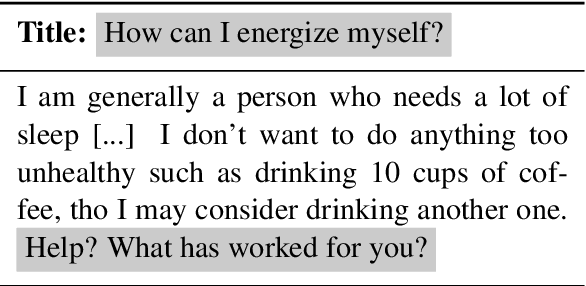

Abstract:People often share personal narratives in order to seek advice from others. To properly infer the narrator's intention, one needs to apply a certain degree of common sense and social intuition. To test the capabilities of NLP systems to recover such intuition, we introduce the new task of inferring what is the advice-seeking goal behind a personal narrative. We formulate this as a cloze test, where the goal is to identify which of two advice-seeking questions was removed from a given narrative. The main challenge in constructing this task is finding pairs of semantically plausible advice-seeking questions for given narratives. To address this challenge, we devise a method that exploits commonalities in experiences people share online to automatically extract pairs of questions that are appropriate candidates for the cloze task. This results in a dataset of over 20,000 personal narratives, each matched with a pair of related advice-seeking questions: one actually intended by the narrator, and the other one not. The dataset covers a very broad array of human experiences, from dating, to career options, to stolen iPads. We use human annotation to determine the degree to which the task relies on common sense and social intuition in addition to a semantic understanding of the narrative. By introducing several baselines for this new task we demonstrate its feasibility and identify avenues for better modeling the intention of the narrator.

Trajectories of Blocked Community Members: Redemption, Recidivism and Departure

Feb 22, 2019

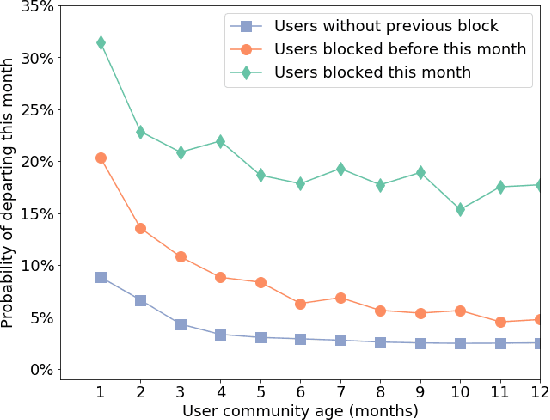

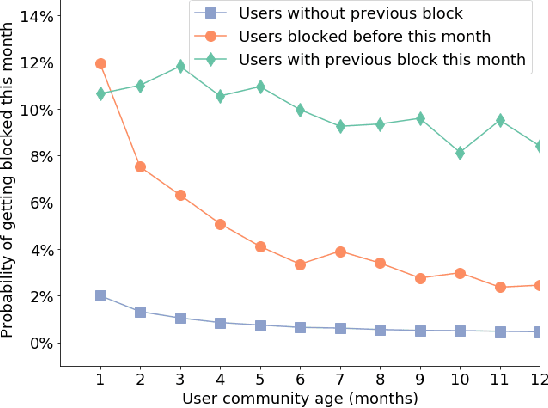

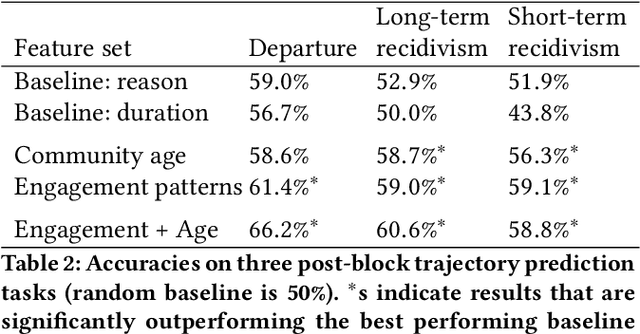

Abstract:Community norm violations can impair constructive communication and collaboration online. As a defense mechanism, community moderators often address such transgressions by temporarily blocking the perpetrator. Such actions, however, come with the cost of potentially alienating community members. Given this tradeoff, it is essential to understand to what extent, and in which situations, this common moderation practice is effective in reinforcing community rules. In this work, we introduce a computational framework for studying the future behavior of blocked users on Wikipedia. After their block expires, they can take several distinct paths: they can reform and adhere to the rules, but they can also recidivate, or straight-out abandon the community. We reveal that these trajectories are tied to factors rooted both in the characteristics of the blocked individual and in whether they perceived the block to be fair and justified. Based on these insights, we formulate a series of prediction tasks aiming to determine which of these paths a user is likely to take after being blocked for their first offense, and demonstrate the feasibility of these new tasks. Overall, this work builds towards a more nuanced approach to moderation by highlighting the tradeoffs that are in play.

Conversations Gone Awry: Detecting Early Signs of Conversational Failure

May 14, 2018

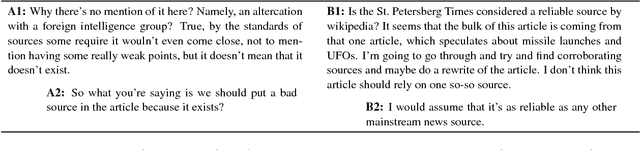

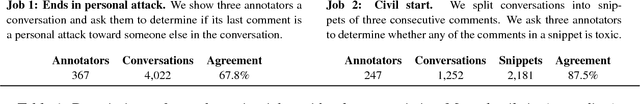

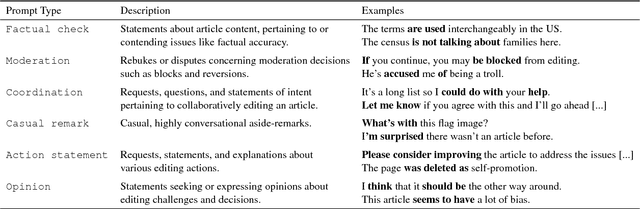

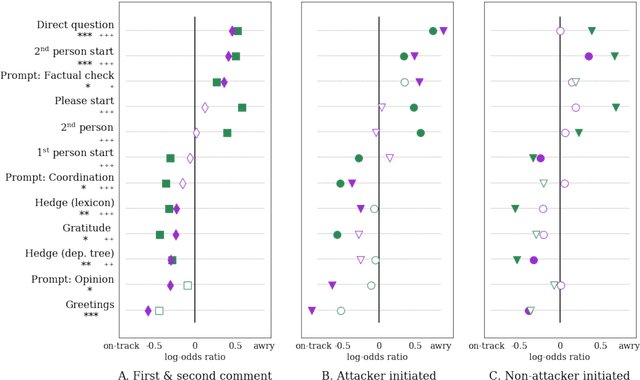

Abstract:One of the main challenges online social systems face is the prevalence of antisocial behavior, such as harassment and personal attacks. In this work, we introduce the task of predicting from the very start of a conversation whether it will get out of hand. As opposed to detecting undesirable behavior after the fact, this task aims to enable early, actionable prediction at a time when the conversation might still be salvaged. To this end, we develop a framework for capturing pragmatic devices---such as politeness strategies and rhetorical prompts---used to start a conversation, and analyze their relation to its future trajectory. Applying this framework in a controlled setting, we demonstrate the feasibility of detecting early warning signs of antisocial behavior in online discussions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge