Jonathan G. Richens

First do no harm: counterfactual objective functions for safe & ethical AI

Apr 27, 2022

Abstract:To act safely and ethically in the real world, agents must be able to reason about harm and avoid harmful actions. In this paper we develop the first statistical definition of harm and a framework for factoring harm into algorithmic decisions. We argue that harm is fundamentally a counterfactual quantity, and show that standard machine learning algorithms are guaranteed to pursue harmful policies in certain environments. To resolve this, we derive a family of counterfactual objective functions that robustly mitigate for harm. We demonstrate our approach with a statistical model for identifying optimal drug doses. While identifying optimal doses using the causal treatment effect results in harmful treatment decisions, our counterfactual algorithm identifies doses that are far less harmful without sacrificing efficacy. Our results show that counterfactual reasoning is a key ingredient for safe and ethical AI.

Leveraging directed causal discovery to detect latent common causes

Nov 11, 2019

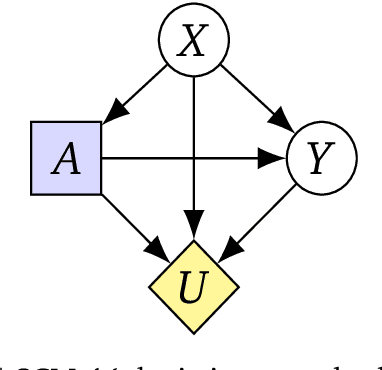

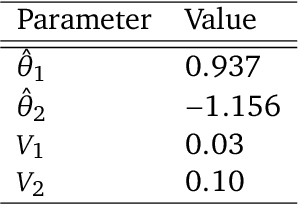

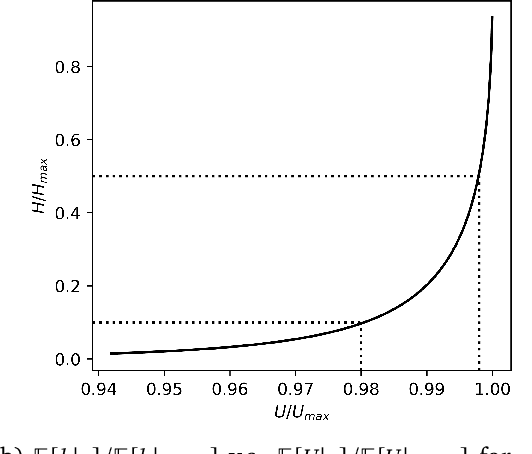

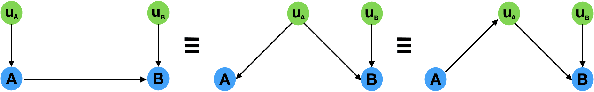

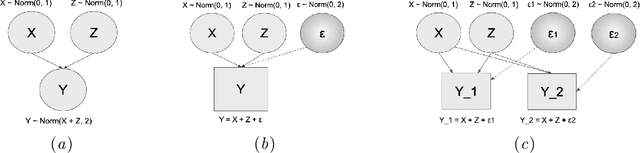

Abstract:The discovery of causal relationships is a fundamental problem in science and medicine. In recent years, many elegant approaches to discovering causal relationships between two variables from uncontrolled data have been proposed. However, most of these deal only with purely directed causal relationships and cannot detect latent common causes. Here, we devise a general method which takes a purely directed causal discovery algorithm and modifies it so that it can also detect latent common causes. The identifiability of the modified algorithm depends on the identifiability of the original, as well as an assumption that the strength of noise be relatively small. We apply our method to two directed causal discovery algorithms, the Information Geometric Causal Inference of (Daniusis et al., 2010) and the Kernel Conditional Deviance for Causal Inference of (Mitrovic, Sejdinovic, and Teh, 2018), and extensively test on synthetic data---detecting latent common causes in additive, multiplicative and complex noise regimes---and on real data, where we are able to detect known common causes. In addition to detecting latent common causes, our experiments demonstrate that both modified algorithms preserve the performance of the original directed algorithm in distinguishing directed causal relations.

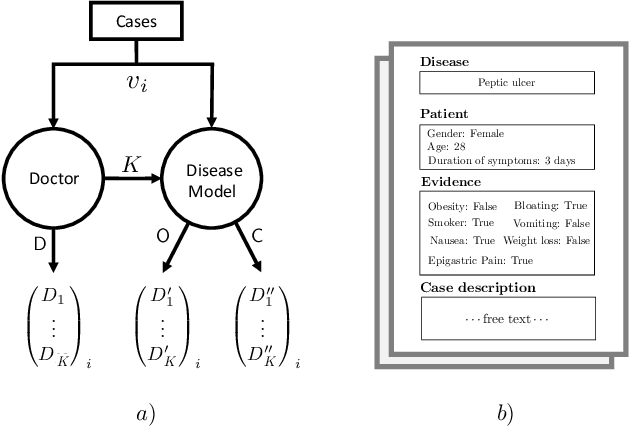

Counterfactual diagnosis

Oct 28, 2019

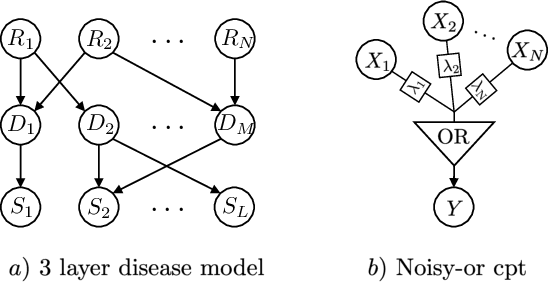

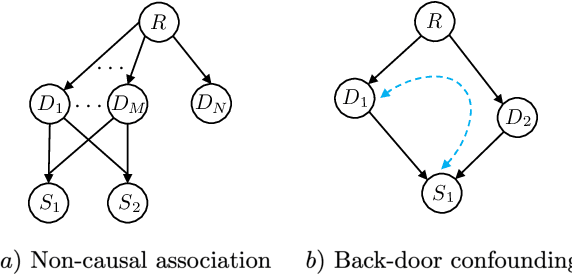

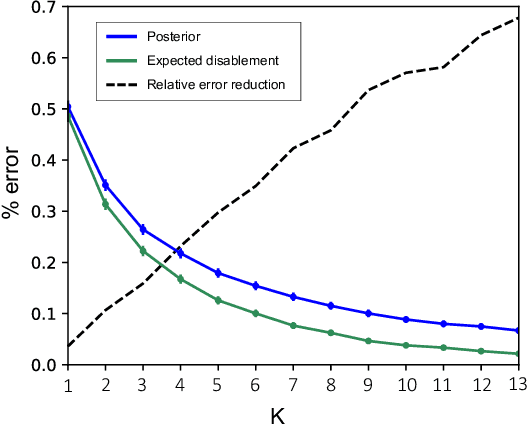

Abstract:Causal knowledge is vital for effective reasoning in science and medicine. In medical diagnosis for example, a doctor aims to explain a patient's symptoms by determining the diseases \emph{causing} them. However, all previous approaches to Machine-Learning assisted diagnosis, including Deep Learning and model-based Bayesian approaches, learn by association and do not distinguish correlation from causation. Here, we propose a new diagnostic algorithm based on counterfactual inference which captures the causal aspect of diagnosis overlooked by previous approaches. Using a statistical disease model, which describes the relations between hundreds of diseases, symptoms and risk factors, we compare our counterfactual algorithm to the standard Bayesian diagnostic algorithm, and test these against a cohort of 44 doctors. We use 1671 clinical vignettes created by a separate panel of doctors to benchmark performance. Each vignette provides a non-exhaustive list of symptoms and medical history simulating a single presentation of a disease. The algorithms and doctors are tasked with determining the underlying disease for each vignette from symptom and medical history information alone. While the Bayesian algorithm achieves an accuracy comparable to the average doctor, placing in the top 48\% of doctors in our cohort, our counterfactual algorithm places in the top 25\% of doctors, achieving expert clinical accuracy. Our results demonstrate the advantage of counterfactual over associative reasoning in a complex real-world task, and show that counterfactual reasoning is a vital missing ingredient for applying machine learning to medical diagnosis.

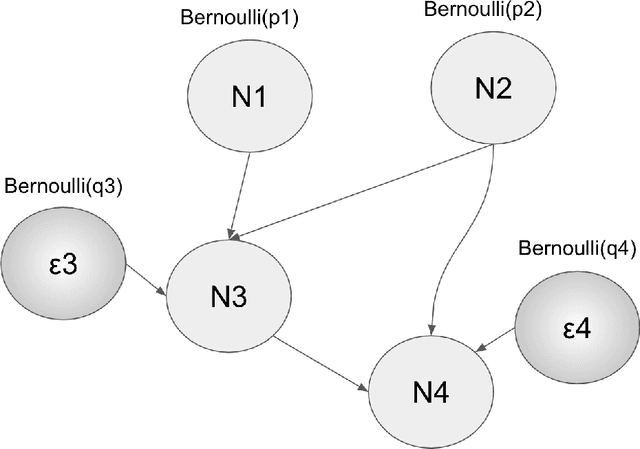

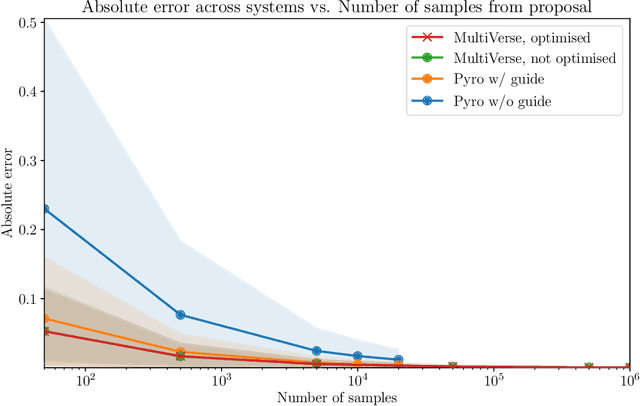

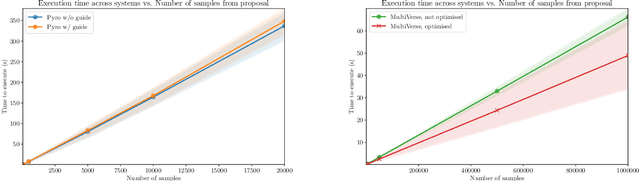

MultiVerse: Causal Reasoning using Importance Sampling in Probabilistic Programming

Oct 17, 2019

Abstract:We elaborate on using importance sampling for causal reasoning, in particular for counterfactual inference. We show how this can be implemented natively in probabilistic programming. By considering the structure of the counterfactual query, one can significantly optimise the inference process. We also consider design choices to enable further optimisations. We introduce MultiVerse, a probabilistic programming prototype engine for approximate causal reasoning. We provide experimental results and compare with Pyro, an existing probabilistic programming framework with some of causal reasoning tools.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge