John-John Cabibihan

Safety experiments for small robots investigating the potential of soft materials in mitigating the harm to the head due to impacts

Apr 21, 2019

Abstract:There is a growing interest in social robots to be considered in the therapy of children with autism due to their effectiveness in improving the outcomes. However, children on the spectrum exhibit challenging behaviors that need to be considered when designing robots for them. A child could involuntarily throw a small social robot during meltdown and that could hit another person's head and cause harm (e.g. concussion). In this paper, the application of soft materials is investigated for its potential in attenuating head's linear acceleration upon impact. The thickness and storage modulus of three different soft materials were considered as the control factors while the noise factor was the impact velocity. The design of experiments was based on Taguchi method. A total of 27 experiments were conducted on a developed dummy head setup that reports the linear acceleration of the head. ANOVA tests were performed to analyze the data. The findings showed that the control factors are not statistically significant in attenuating the response. The optimal values of the control factors were identified using the signal-to-noise (S/N) ratio optimization technique. Confirmation runs at the optimal parameters (i.e. thickness of 3 mm and 5 mm) showed a better response as compared to other conditions. Designers of social robots should consider the application of soft materials to their designs as it help in reducing the potential harm to the head.

Illusory Sense of Human Touch from a Warm and Soft Artificial Hand

Feb 27, 2015

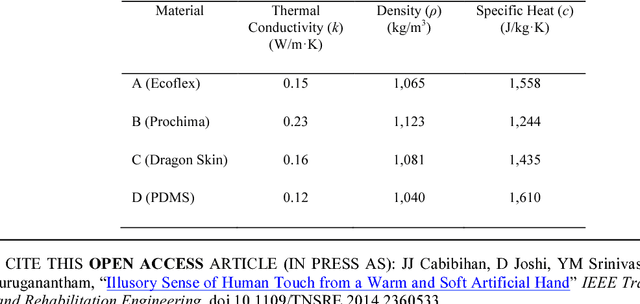

Abstract:To touch and be touched are vital to human development, well being, and relationships. However, to those who have lost their arms and hands due to accident or war, touching becomes a serious concern that often leads to psychosocial issues and social stigma. In this paper, we demonstrate that the touch from a warm and soft rubber hand can be perceived by another person as if the touch were coming from a human hand. We describe a three step process toward this goal. First, we made participants select artificial skin samples according to their preferred warmth and softness characteristics. At room temperature, the preferred warmth was found to be 28.4 deg C at the skin surface of a soft silicone rubber material that has a Shore durometer value of 30 at the OO scale. Second, we developed a process to create a rubber hand replica of a human hand. To compare the skin softness of a human hand and artificial hands, a robotic indenter was employed to produce a softness map by recording the displacement data when constant indentation force of 1 N was applied to 780 data points on the palmar side of the hand. Results showed that an artificial hand with skeletal structure is as soft as a human hand. Lastly, the participants arms were touched with human and artificial hands, but they were prevented to see the hand that touched them. Receiver operating characteristic curve analysis suggests that a warm and soft artificial hand can create an illusion that the touch is from a human hand. These findings open the possibilities for prosthetic and robotic hands that are lifelike and are more socially acceptable.

Why robots? A survey on the roles and benefits of social robots in the therapy of children with autism

Nov 02, 2013Abstract:This paper reviews the use of socially interactive robots to assist in the therapy of children with autism. The extent to which the robots were successful in helping the children in their social, emotional, and communication deficits was investigated. Child-robot interactions were scrutinized with respect to the different target behaviors that are to be elicited from a child during therapy. These behaviors were thoroughly examined with respect to a childs development needs. Most importantly, experimental data from the surveyed works were extracted and analyzed in terms of the target behaviors and how each robot was used during a therapy session to achieve these behaviors. The study concludes by categorizing the different therapeutic roles that these robots were observed to play, and highlights the important design features that enable them to achieve high levels of effectiveness in autism therapy.

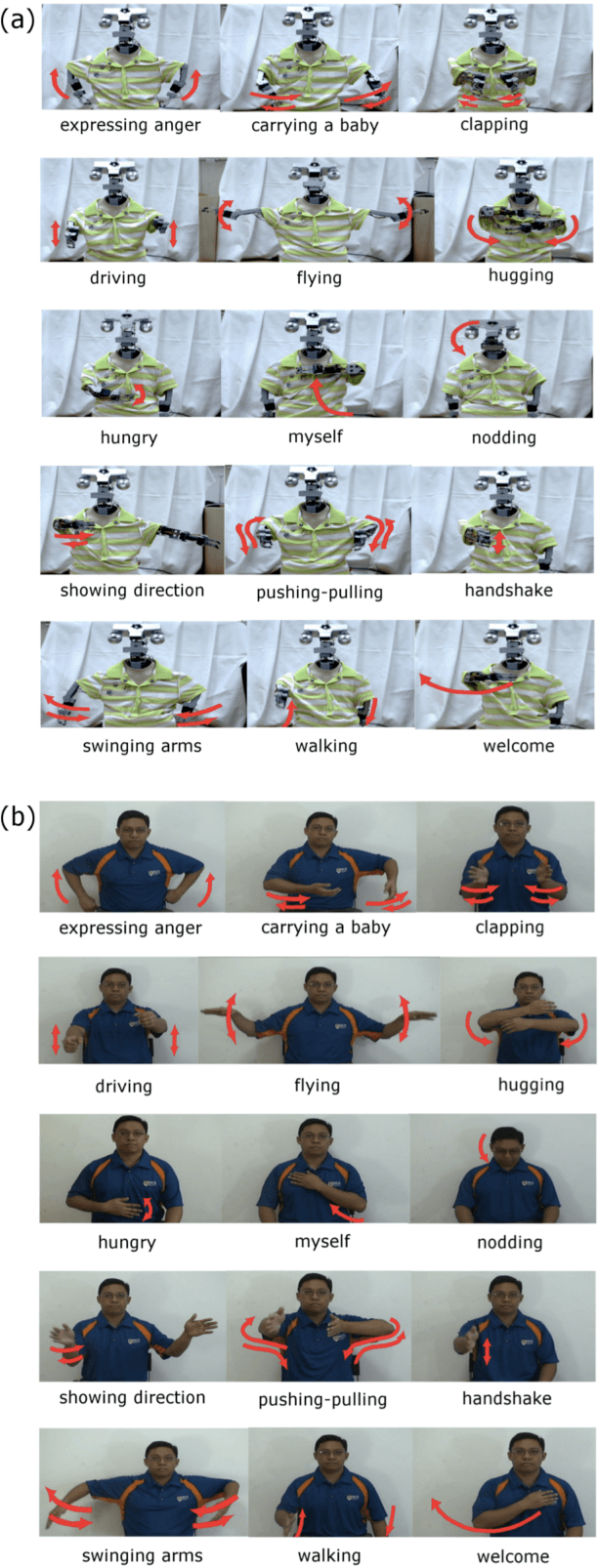

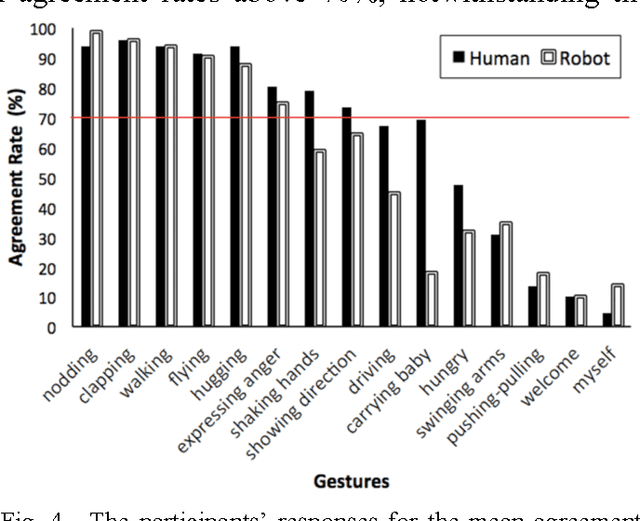

Human-Recognizable Robotic Gestures

Dec 26, 2012

Abstract:For robots to be accommodated in human spaces and in humans daily activities, robots should be able to understand messages from the human conversation partner. In the same light, humans must also understand the messages that are being communicated by robots, including the non-verbal ones. We conducted a web-based video study wherein participants gave interpretations on the iconic gestures and emblems that were produced by an anthropomorphic robot. Out of the 15 gestures presented, we found 6 robotic gestures that can be accurately recognized by the human observer. These were nodding, clapping, hugging, expressing anger, walking, and flying. We reviewed these gestures for their meaning from literatures in human and animal behavior. We conclude by discussing the possible implications of these gestures for the design of social robots that are aimed to have engaging interactions with humans.

* 21 pages, 5 figures

Telerobotic Pointing Gestures Shape Human Spatial Cognition

Jul 08, 2012

Abstract:This paper aimed to explore whether human beings can understand gestures produced by telepresence robots. If it were the case, they can derive meaning conveyed in telerobotic gestures when processing spatial information. We conducted two experiments over Skype in the present study. Participants were presented with a robotic interface that had arms, which were teleoperated by an experimenter. The robot could point to virtual locations that represented certain entities. In Experiment 1, the experimenter described spatial locations of fictitious objects sequentially in two conditions: speech condition (SO, verbal descriptions clearly indicated the spatial layout) and speech and gesture condition (SR, verbal descriptions were ambiguous but accompanied by robotic pointing gestures). Participants were then asked to recall the objects' spatial locations. We found that the number of spatial locations recalled in the SR condition was on par with that in the SO condition, suggesting that telerobotic pointing gestures compensated ambiguous speech during the process of spatial information. In Experiment 2, the experimenter described spatial locations non-sequentially in the SR and SO conditions. Surprisingly, the number of spatial locations recalled in the SR condition was even higher than that in the SO condition, suggesting that telerobotic pointing gestures were more powerful than speech in conveying spatial information when information was presented in an unpredictable order. The findings provide evidence that human beings are able to comprehend telerobotic gestures, and importantly, integrate these gestures with co-occurring speech. This work promotes engaging remote collaboration among humans through a robot intermediary.

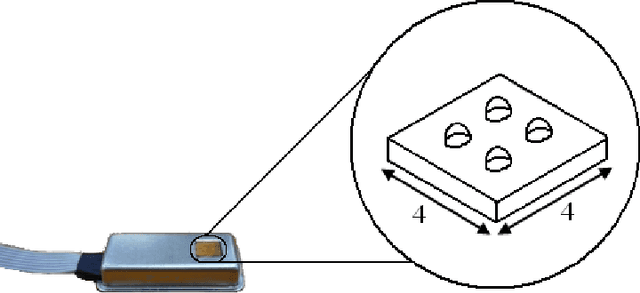

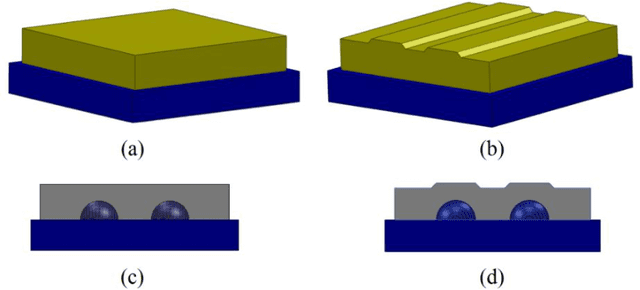

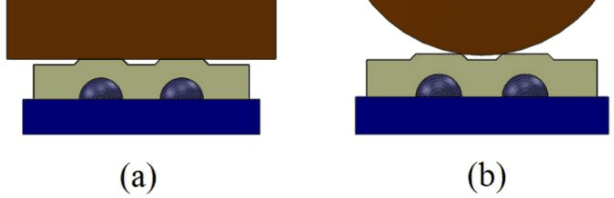

Artificial Skin Ridges Enhance Local Tactile Shape Discrimination

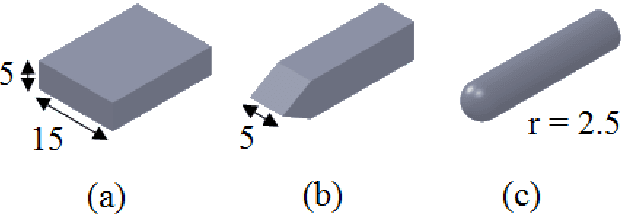

Sep 16, 2011

Abstract:One of the fundamental requirements for an artificial hand to successfully grasp and manipulate an object is to be able to distinguish different objects' shapes and, more specifically, the objects' surface curvatures. In this study, we investigate the possibility of enhancing the curvature detection of embedded tactile sensors by proposing a ridged fingertip structure, simulating human fingerprints. In addition, a curvature detection approach based on machine learning methods is proposed to provide the embedded sensors with the ability to discriminate the surface curvature of different objects. For this purpose, a set of experiments were carried out to collect tactile signals from a 2 \times 2 tactile sensor array, then the signals were processed and used for learning algorithms. To achieve the best possible performance for our machine learning approach, three different learning algorithms of Na\"ive Bayes (NB), Artificial Neural Networks (ANN), and Support Vector Machines (SVM) were implemented and compared for various parameters. Finally, the most accurate method was selected to evaluate the proposed skin structure in recognition of three different curvatures. The results showed an accuracy rate of 97.5% in surface curvature discrimination.

* 10 figures

Patient-Specific Prosthetic Fingers by Remote Collaboration - A Case Study

May 05, 2011

Abstract:The concealment of amputation through prosthesis usage can shield an amputee from social stigma and help improve the emotional healing process especially at the early stages of hand or finger loss. However, the traditional techniques in prosthesis fabrication defy this as the patients need numerous visits to the clinics for measurements, fitting and follow-ups. This paper presents a method for constructing a prosthetic finger through online collaboration with the designer. The main input from the amputee comes from the Computer Tomography (CT) data in the region of the affected and the non-affected fingers. These data are sent over the internet and the prosthesis is constructed using visualization, computer-aided design and manufacturing tools. The finished product is then shipped to the patient. A case study with a single patient having an amputated ring finger at the proximal interphalangeal joint shows that the proposed method has a potential to address the patient's psychosocial concerns and minimize the exposure of the finger loss to the public.

* Open Access article

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge