Johann Prankl

High Dynamic Range SLAM with Map-Aware Exposure Time Control

Apr 20, 2018

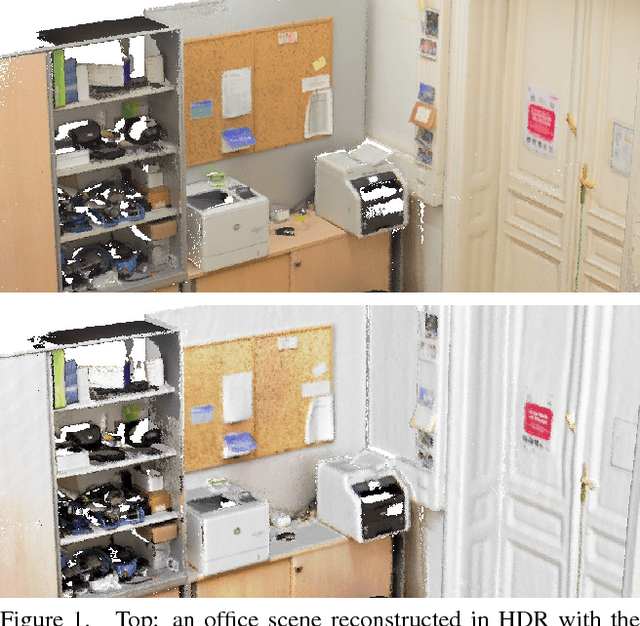

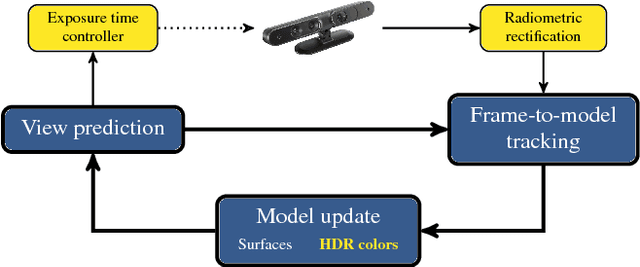

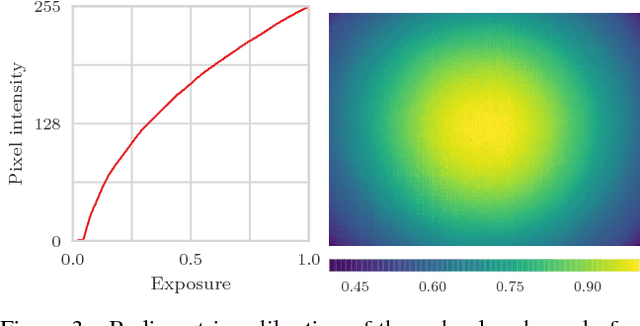

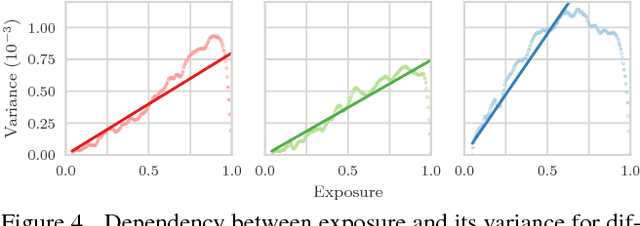

Abstract:The research in dense online 3D mapping is mostly focused on the geometrical accuracy and spatial extent of the reconstructions. Their color appearance is often neglected, leading to inconsistent colors and noticeable artifacts. We rectify this by extending a state-of-the-art SLAM system to accumulate colors in HDR space. We replace the simplistic pixel intensity averaging scheme with HDR color fusion rules tailored to the incremental nature of SLAM and a noise model suitable for off-the-shelf RGB-D cameras. Our main contribution is a map-aware exposure time controller. It makes decisions based on the global state of the map and predicted camera motion, attempting to maximize the information gain of each observation. We report a set of experiments demonstrating the improved texture quality and advantages of using the custom controller that is tightly integrated in the mapping loop.

Where to look first? Behaviour control for fetch-and-carry missions of service robots

Oct 06, 2015

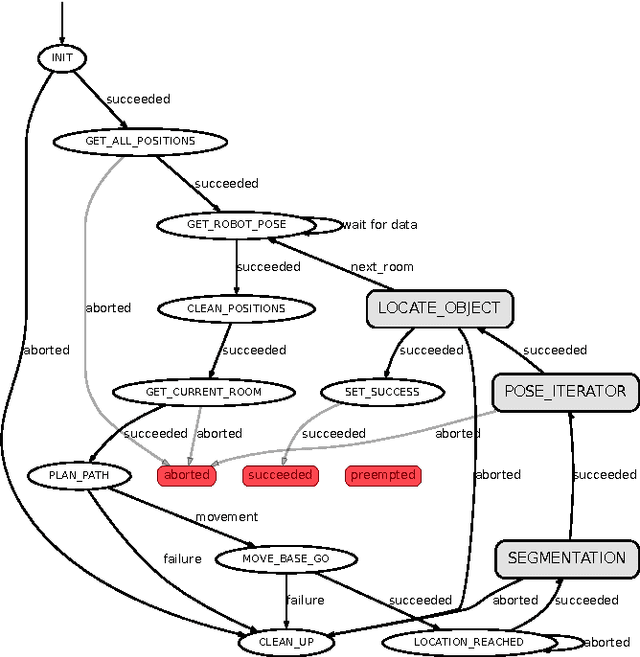

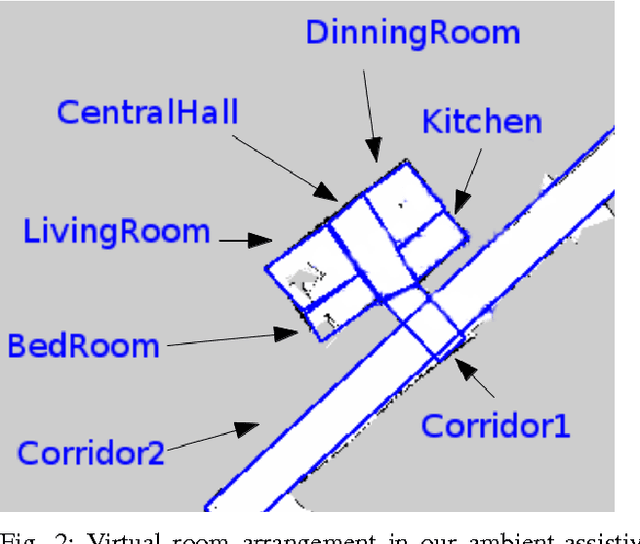

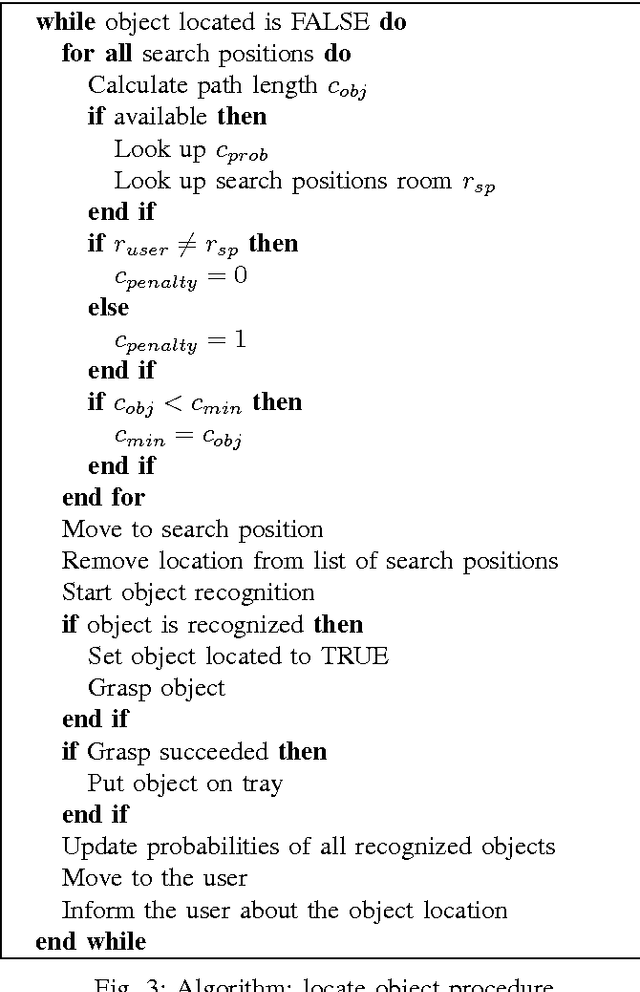

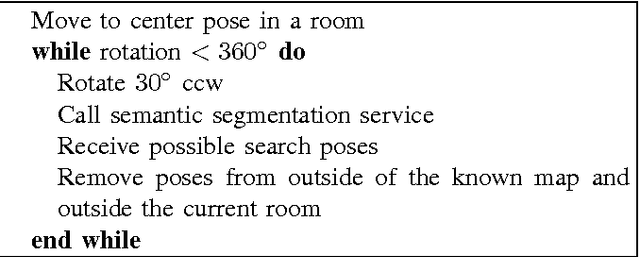

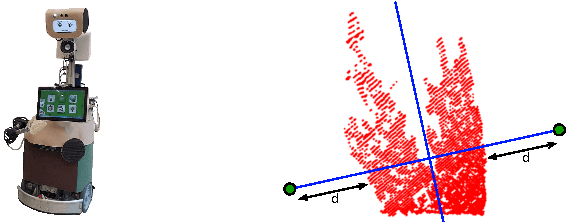

Abstract:This paper presents the behaviour control of a service robot for intelligent object search in a domestic environment. A major challenge in service robotics is to enable fetch-and-carry missions that are satisfying for the user in terms of efficiency and human-oriented perception. The proposed behaviour controller provides an informed intelligent search based on a semantic segmentation framework for indoor scenes and integrates it with object recognition and grasping. Instead of manually annotating search positions in the environment, the framework automatically suggests likely locations to search for an object based on contextual information, e.g. next to tables and shelves. In a preliminary set of experiments we demonstrate that this behaviour control is as efficient as using manually annotated locations. Moreover, we argue that our approach will reduce the intensity of labour associated with programming fetch-and-carry tasks for service robots and that it will be perceived as more human-oriented.

Object Modelling with a Handheld RGB-D Camera

May 21, 2015

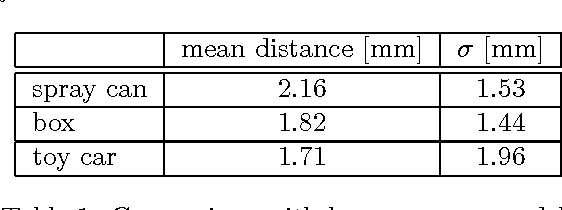

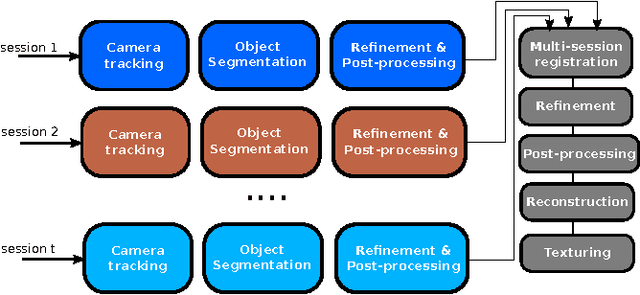

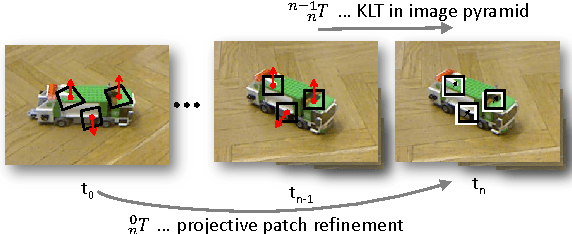

Abstract:This work presents a flexible system to reconstruct 3D models of objects captured with an RGB-D sensor. A major advantage of the method is that our reconstruction pipeline allows the user to acquire a full 3D model of the object. This is achieved by acquiring several partial 3D models in different sessions that are automatically merged together to reconstruct a full model. In addition, the 3D models acquired by our system can be directly used by state-of-the-art object instance recognition and object tracking modules, providing object-perception capabilities for different applications, such as human-object interaction analysis or robot grasping. The system does not impose constraints in the appearance of objects (textured, untextured) nor in the modelling setup (moving camera with static object or a turn-table setup). The proposed reconstruction system has been used to model a large number of objects resulting in metrically accurate and visually appealing 3D models.

Find my mug: Efficient object search with a mobile robot using semantic segmentation

Apr 23, 2014

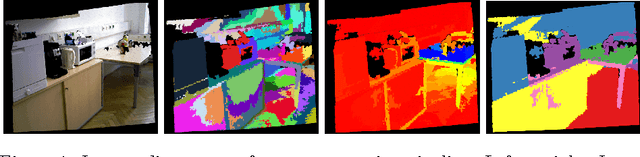

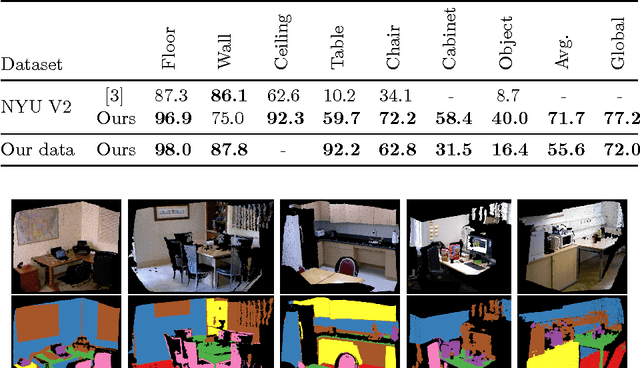

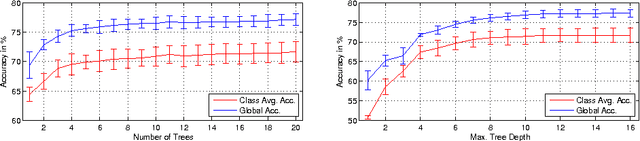

Abstract:In this paper, we propose an efficient semantic segmentation framework for indoor scenes, tailored to the application on a mobile robot. Semantic segmentation can help robots to gain a reasonable understanding of their environment, but to reach this goal, the algorithms not only need to be accurate, but also fast and robust. Therefore, we developed an optimized 3D point cloud processing framework based on a Randomized Decision Forest, achieving competitive results at sufficiently high frame rates. We evaluate the capabilities of our method on the popular NYU depth dataset and our own data and demonstrate its feasibility by deploying it on a mobile service robot, for which we could optimize an object search procedure using our results.

Visual Room-Awareness for Humanoid Robot Self-Localization

Apr 22, 2013

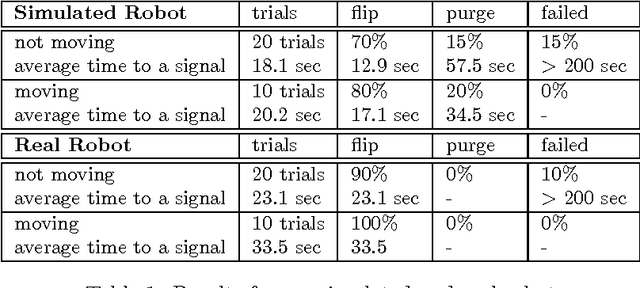

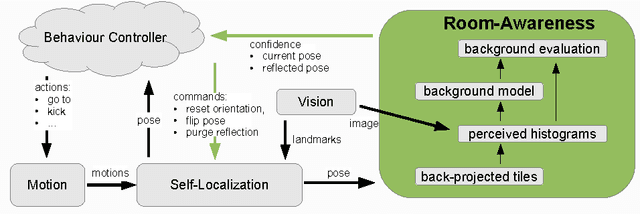

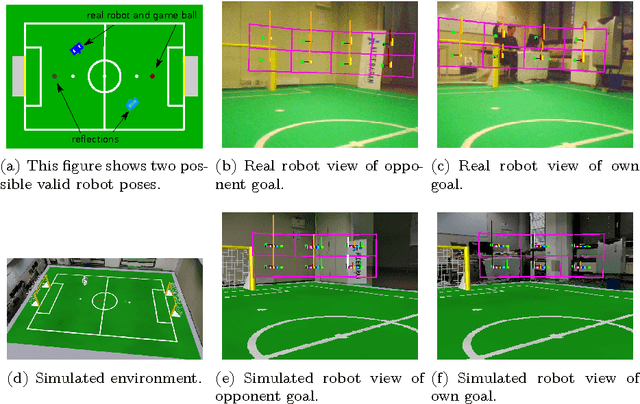

Abstract:Humanoid robots without internal sensors such as a compass tend to lose their orientation after a fall. Furthermore, re-initialisation is often ambiguous due to symmetric man-made environments. The room-awareness module proposed here is inspired by the results of psychological experiments and improves existing self-localization strategies by mapping and matching the visual background with colour histograms. The matching algorithm uses a particle-filter to generate hypotheses of the viewing directions independent of the self-localization algorithm and generates confidence values for various possible poses. The robot's behaviour controller uses those confidence values to control self-localization algorithm to converge to the most likely pose and prevents the algorithm from getting stuck in local minima. Experiments with a symmetric Standard Platform League RoboCup playing field with a simulated and a real humanoid NAO robot show the significant improvement of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge