Joachim Hertzberg

Uncertainty-Resilient Active Intention Recognition for Robotic Assistants

Aug 26, 2025Abstract:Purposeful behavior in robotic assistants requires the integration of multiple components and technological advances. Often, the problem is reduced to recognizing explicit prompts, which limits autonomy, or is oversimplified through assumptions such as near-perfect information. We argue that a critical gap remains unaddressed -- specifically, the challenge of reasoning about the uncertain outcomes and perception errors inherent to human intention recognition. In response, we present a framework designed to be resilient to uncertainty and sensor noise, integrating real-time sensor data with a combination of planners. Centered around an intention-recognition POMDP, our approach addresses cooperative planning and acting under uncertainty. Our integrated framework has been successfully tested on a physical robot with promising results.

Acting and Planning with Hierarchical Operational Models on a Mobile Robot: A Study with RAE+UPOM

Jul 15, 2025Abstract:Robotic task execution faces challenges due to the inconsistency between symbolic planner models and the rich control structures actually running on the robot. In this paper, we present the first physical deployment of an integrated actor-planner system that shares hierarchical operational models for both acting and planning, interleaving the Reactive Acting Engine (RAE) with an anytime UCT-like Monte Carlo planner (UPOM). We implement RAE+UPOM on a mobile manipulator in a real-world deployment for an object collection task. Our experiments demonstrate robust task execution under action failures and sensor noise, and provide empirical insights into the interleaved acting-and-planning decision making process.

Online Knowledge Integration for 3D Semantic Mapping: A Survey

Nov 27, 2024Abstract:Semantic mapping is a key component of robots operating in and interacting with objects in structured environments. Traditionally, geometric and knowledge representations within a semantic map have only been loosely integrated. However, recent advances in deep learning now allow full integration of prior knowledge, represented as knowledge graphs or language concepts, into sensor data processing and semantic mapping pipelines. Semantic scene graphs and language models enable modern semantic mapping approaches to incorporate graph-based prior knowledge or to leverage the rich information in human language both during and after the mapping process. This has sparked substantial advances in semantic mapping, leading to previously impossible novel applications. This survey reviews these recent developments comprehensively, with a focus on online integration of knowledge into semantic mapping. We specifically focus on methods using semantic scene graphs for integrating symbolic prior knowledge and language models for respective capture of implicit common-sense knowledge and natural language concepts

Towards Intention Recognition for Robotic Assistants Through Online POMDP Planning

Nov 26, 2024

Abstract:Intention recognition, or the ability to anticipate the actions of another agent, plays a vital role in the design and development of automated assistants that can support humans in their daily tasks. In particular, industrial settings pose interesting challenges that include potential distractions for a decision-maker as well as noisy or incomplete observations. In such a setting, a robotic assistant tasked with helping and supporting a human worker must interleave information gathering actions with proactive tasks of its own, an approach that has been referred to as active goal recognition. In this paper we describe a partially observable model for online intention recognition, show some preliminary experimental results and discuss some of the challenges present in this family of problems.

Mesh-based Object Tracking for Dynamic Semantic 3D Scene Graphs via Ray Tracing

Aug 09, 2024

Abstract:In this paper, we present a novel method for 3D geometric scene graph generation using range sensors and RGB cameras. We initially detect instance-wise keypoints with a YOLOv8s model to compute 6D pose estimates of known objects by solving PnP. We use a ray tracing approach to track a geometric scene graph consisting of mesh models of object instances. In contrast to classical point-to-point matching, this leads to more robust results, especially under occlusions between objects instances. We show that using this hybrid strategy leads to robust self-localization, pre-segmentation of the range sensor data and accurate pose tracking of objects using the same environmental representation. All detected objects are integrated into a semantic scene graph. This scene graph then serves as a front end to a semantic mapping framework to allow spatial reasoning.

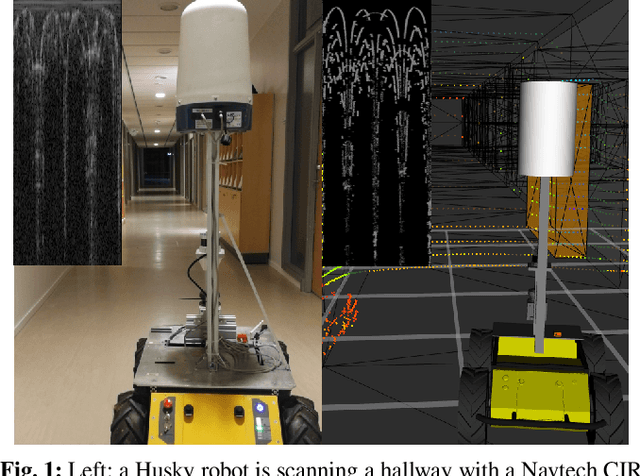

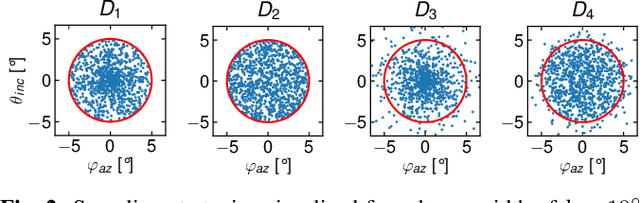

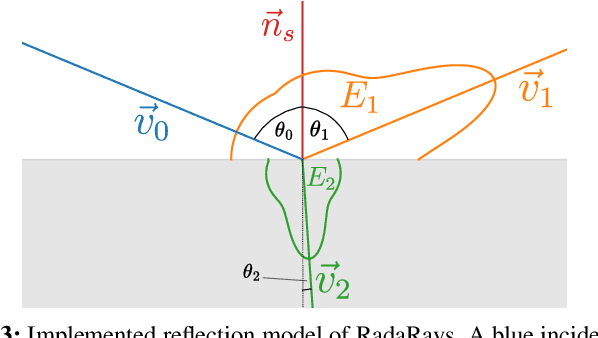

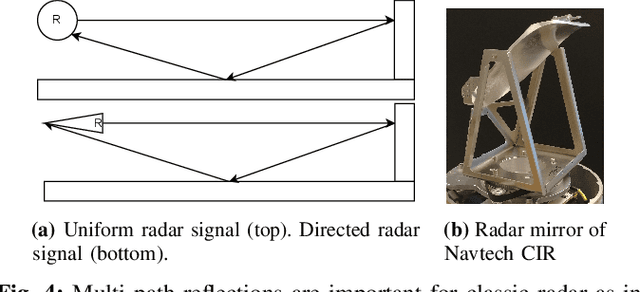

RadaRays: Real-time Simulation of Rotating FMCW Radar for Mobile Robotics via Hardware-accelerated Ray Tracing

Oct 05, 2023

Abstract:RadaRays allows for the accurate modeling and simulation of rotating FMCW radar sensors in complex environments, including the simulation of reflection, refraction, and scattering of radar waves. Our software is able to handle large numbers of objects and materials, making it suitable for use in a variety of mobile robotics applications. We demonstrate the effectiveness of RadaRays through a series of experiments and show that it can more accurately reproduce the behavior of FMCW radar sensors in a variety of environments, compared to the ray casting-based lidar-like simulations that are commonly used in simulators for autonomous driving such as CARLA. Our experiments additionally serve as valuable reference point for researchers to evaluate their own radar simulations. By using RadaRays, developers can significantly reduce the time and cost associated with prototyping and testing FMCW radar-based algorithms. We also provide a Gazebo plugin that makes our work accessible to the mobile robotics community.

MICP-L: Fast parallel simulative Range Sensor to Mesh registration for Robot Localization

Oct 25, 2022Abstract:Triangle mesh-based maps have proven to be a powerful 3D representation of the environment, allowing robots to navigate using universal methods, indoors as well as in challenging outdoor environments with tunnels, hills and varying slopes. However, any robot that navigates autonomously necessarily requires stable, accurate, and continuous localization in such a mesh map where it plans its paths and missions. We present MICP-L, a novel and very fast \textit{Mesh ICP Localization} method that can register one or more range sensors directly on a triangle mesh map to continuously localize a robot, determining its 6D pose in the map. Correspondences between a range sensor and the mesh are found through simulations accelerated with the latest RTX hardware. With MICP-L, a correction can be performed quickly and in parallel even with combined data from different range sensor models. With this work, we aim to significantly advance the development in the field of mesh-based environment representation for autonomous robotic applications. MICP-L is open source and fully integrated with ROS and tf.

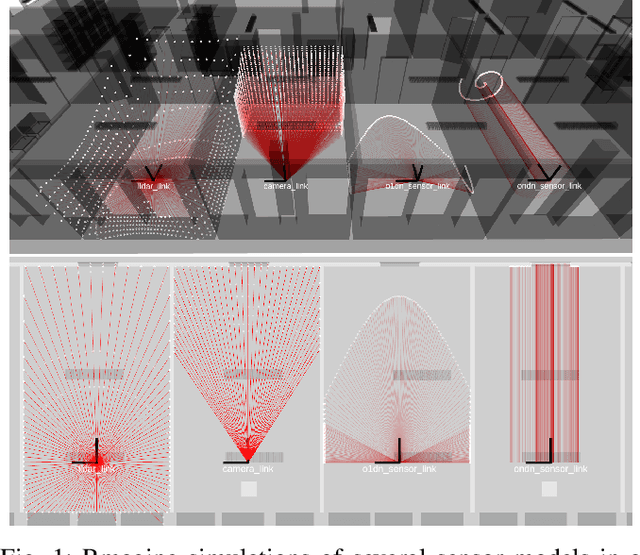

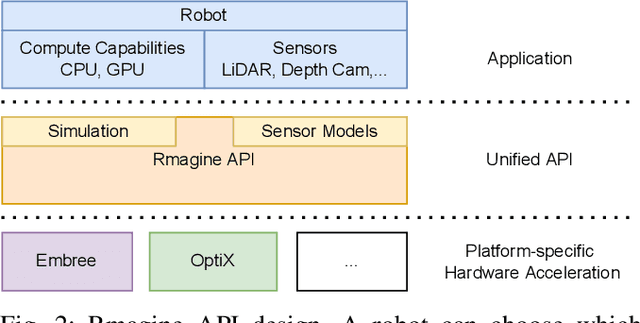

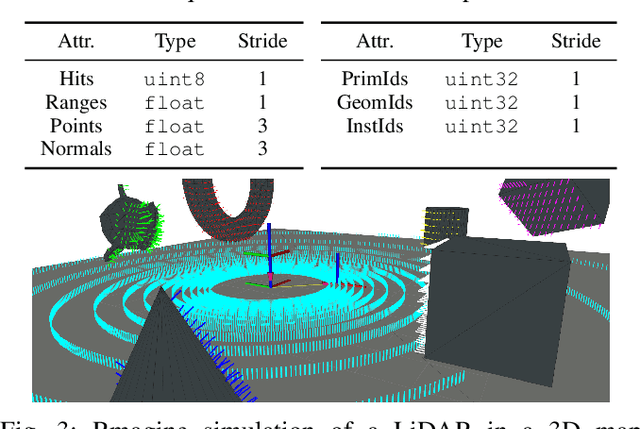

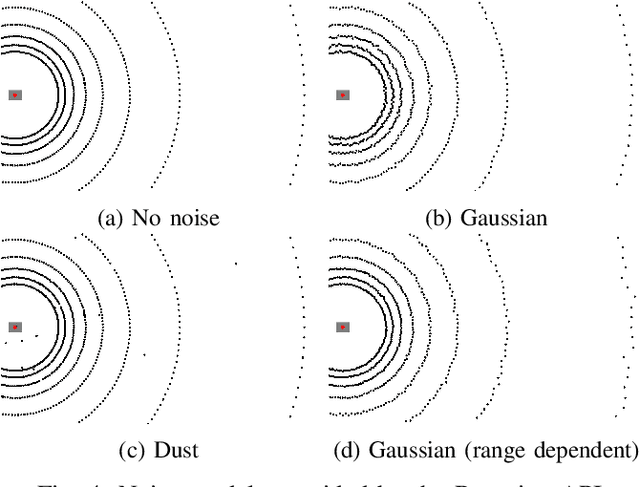

Rmagine: 3D Range Sensor Simulation in Polygonal Maps via Raytracing for Embedded Hardware on Mobile Robots

Sep 27, 2022

Abstract:Sensor simulation has emerged as a promising and powerful technique to find solutions to many real-world robotic tasks like localization and pose tracking.However, commonly used simulators have high hardware requirements and are therefore used mostly on high-end computers. In this paper, we present an approach to simulate range sensors directly on embedded hardware of mobile robots that use triangle meshes as environment map. This library called Rmagine allows a robot to simulate sensor data for arbitrary range sensors directly on board via raytracing. Since robots typically only have limited computational resources, the Rmagine aims at being flexible and lightweight, while scaling well even to large environment maps. It runs on several platforms like Laptops or embedded computing boards like Nvidia Jetson by putting an unified API over the specific proprietary libraries provided by the hardware manufacturers. This work is designed to support the future development of robotic applications depending on simulation of range data that could previously not be computed in reasonable time on mobile systems.

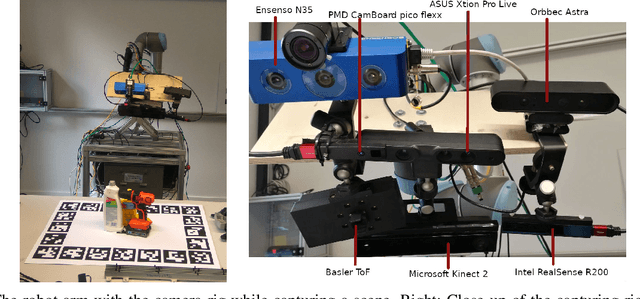

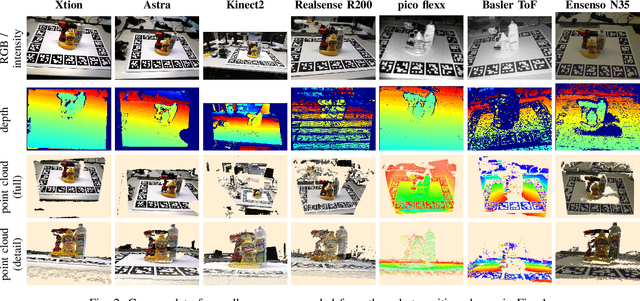

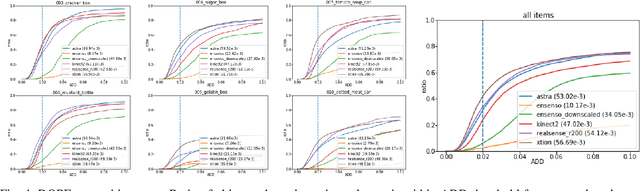

YCB-M: A Multi-Camera RGB-D Dataset for Object Recognition and 6DoF Pose Estimation

Apr 24, 2020

Abstract:While a great variety of 3D cameras have been introduced in recent years, most publicly available datasets for object recognition and pose estimation focus on one single camera. In this work, we present a dataset of 32 scenes that have been captured by 7 different 3D cameras, totaling 49,294 frames. This allows evaluating the sensitivity of pose estimation algorithms to the specifics of the used camera and the development of more robust algorithms that are more independent of the camera model. Vice versa, our dataset enables researchers to perform a quantitative comparison of the data from several different cameras and depth sensing technologies and evaluate their algorithms before selecting a camera for their specific task. The scenes in our dataset contain 20 different objects from the common benchmark YCB object and model set [1], [2]. We provide full ground truth 6DoF poses for each object, per-pixel segmentation, 2D and 3D bounding boxes and a measure of the amount of occlusion of each object. We have also performed an initial evaluation of the cameras using our dataset on a state-of-the-art object recognition and pose estimation system [3].

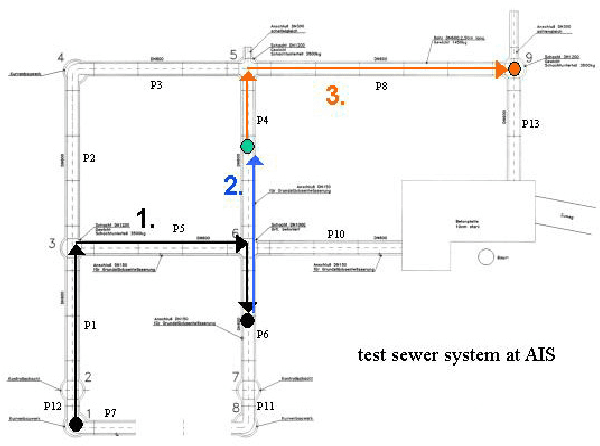

Dynamic replanning in uncertain environments for a sewer inspection robot

Dec 11, 2004

Abstract:The sewer inspection robot MAKRO is an autonomous multi-segment robot with worm-like shape driven by wheels. It is currently under development in the project MAKRO-PLUS. The robot has to navigate autonomously within sewer systems. Its first tasks will be to take water probes, analyze it onboard, and measure positions of manholes and pipes to detect polluted-loaded sewage and to improve current maps of sewer systems. One of the challenging problems is the controller software, which should enable the robot to navigate in the sewer system and perform the inspection tasks autonomously, not inflicting any self-damage. This paper focuses on the route planning and replanning aspect of the robot. The robots software has four different levels, of which the planning system is the highest level, and the remaining three are controller levels each with a different degree of abstraction. The planner coordinates the sequence of actions that are to be successively executed by the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge