Martin Atzmueller

A Scene Graph Backed Approach to Open Set Semantic Mapping

Feb 03, 2026Abstract:While Open Set Semantic Mapping and 3D Semantic Scene Graphs (3DSSGs) are established paradigms in robotic perception, deploying them effectively to support high-level reasoning in large-scale, real-world environments remains a significant challenge. Most existing approaches decouple perception from representation, treating the scene graph as a derivative layer generated post hoc. This limits both consistency and scalability. In contrast, we propose a mapping architecture where the 3DSSG serves as the foundational backend, acting as the primary knowledge representation for the entire mapping process. Our approach leverages prior work on incremental scene graph prediction to infer and update the graph structure in real-time as the environment is explored. This ensures that the map remains topologically consistent and computationally efficient, even during extended operations in large-scale settings. By maintaining an explicit, spatially grounded representation that supports both flat and hierarchical topologies, we bridge the gap between sub-symbolic raw sensor data and high-level symbolic reasoning. Consequently, this provides a stable, verifiable structure that knowledge-driven frameworks, ranging from knowledge graphs and ontologies to Large Language Models (LLMs), can directly exploit, enabling agents to operate with enhanced interpretability, trustworthiness, and alignment to human concepts.

LIEREx: Language-Image Embeddings for Robotic Exploration

Feb 02, 2026Abstract:Semantic maps allow a robot to reason about its surroundings to fulfill tasks such as navigating known environments, finding specific objects, and exploring unmapped areas. Traditional mapping approaches provide accurate geometric representations but are often constrained by pre-designed symbolic vocabularies. The reliance on fixed object classes makes it impractical to handle out-of-distribution knowledge not defined at design time. Recent advances in Vision-Language Foundation Models, such as CLIP, enable open-set mapping, where objects are encoded as high-dimensional embeddings rather than fixed labels. In LIEREx, we integrate these VLFMs with established 3D Semantic Scene Graphs to enable target-directed exploration by an autonomous agent in partially unknown environments.

* This preprint has not undergone peer review or any post-submission improvements or corrections. The Version of Record of this article is published in KI - Künstliche Intelligenz, and is available online at https://doi.org/10.1007/s13218-026-00902-6

ExPrIS: Knowledge-Level Expectations as Priors for Object Interpretation from Sensor Data

Jan 21, 2026Abstract:While deep learning has significantly advanced robotic object recognition, purely data-driven approaches often lack semantic consistency and fail to leverage valuable, pre-existing knowledge about the environment. This report presents the ExPrIS project, which addresses this challenge by investigating how knowledge-level expectations can serve as to improve object interpretation from sensor data. Our approach is based on the incremental construction of a 3D Semantic Scene Graph (3DSSG). We integrate expectations from two sources: contextual priors from past observations and semantic knowledge from external graphs like ConceptNet. These are embedded into a heterogeneous Graph Neural Network (GNN) to create an expectation-biased inference process. This method moves beyond static, frame-by-frame analysis to enhance the robustness and consistency of scene understanding over time. The report details this architecture, its evaluation, and outlines its planned integration on a mobile robotic platform.

* This preprint has not undergone peer review or any post-submission improvements or corrections. The Version of Record of this article is published in KI - Künstliche Intelligenz, and is available online at https://doi.org/10.1007/s13218-026-00901-7

Saliency Map-Guided Knowledge Discovery for Subclass Identification with LLM-Based Symbolic Approximations

Nov 10, 2025Abstract:This paper proposes a novel neuro-symbolic approach for sensor signal-based knowledge discovery, focusing on identifying latent subclasses in time series classification tasks. The approach leverages gradient-based saliency maps derived from trained neural networks to guide the discovery process. Multiclass time series classification problems are transformed into binary classification problems through label subsumption, and classifiers are trained for each of these to yield saliency maps. The input signals, grouped by predicted class, are clustered under three distinct configurations. The centroids of the final set of clusters are provided as input to an LLM for symbolic approximation and fuzzy knowledge graph matching to discover the underlying subclasses of the original multiclass problem. Experimental results on well-established time series classification datasets demonstrate the effectiveness of our saliency map-driven method for knowledge discovery, outperforming signal-only baselines in both clustering and subclass identification.

ProtoMask: Segmentation-Guided Prototype Learning

Oct 01, 2025Abstract:XAI gained considerable importance in recent years. Methods based on prototypical case-based reasoning have shown a promising improvement in explainability. However, these methods typically rely on additional post-hoc saliency techniques to explain the semantics of learned prototypes. Multiple critiques have been raised about the reliability and quality of such techniques. For this reason, we study the use of prominent image segmentation foundation models to improve the truthfulness of the mapping between embedding and input space. We aim to restrict the computation area of the saliency map to a predefined semantic image patch to reduce the uncertainty of such visualizations. To perceive the information of an entire image, we use the bounding box from each generated segmentation mask to crop the image. Each mask results in an individual input in our novel model architecture named ProtoMask. We conduct experiments on three popular fine-grained classification datasets with a wide set of metrics, providing a detailed overview on explainability characteristics. The comparison with other popular models demonstrates competitive performance and unique explainability features of our model. https://github.com/uos-sis/quanproto

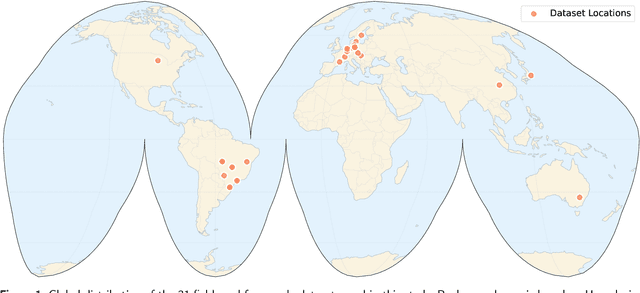

Kriging prior Regression: A Case for Kriging-Based Spatial Features with TabPFN in Soil Mapping

Sep 11, 2025Abstract:Machine learning and geostatistics are two fundamentally different frameworks for predicting and spatially mapping soil properties. Geostatistics leverages the spatial structure of soil properties, while machine learning captures the relationship between available environmental features and soil properties. We propose a hybrid framework that enriches ML with spatial context through engineering of 'spatial lag' features from ordinary kriging. We call this approach 'kriging prior regression' (KpR), as it follows the inverse logic of regression kriging. To evaluate this approach, we assessed both the point and probabilistic prediction performance of KpR, using the TabPFN model across six fieldscale datasets from LimeSoDa. These datasets included soil organic carbon, clay content, and pH, along with features derived from remote sensing and in-situ proximal soil sensing. KpR with TabPFN demonstrated reliable uncertainty estimates and more accurate predictions in comparison to several other spatial techniques (e.g., regression/residual kriging with TabPFN), as well as to established non-spatial machine learning algorithms (e.g., random forest). Most notably, it significantly improved the average R2 by around 30% compared to machine learning algorithms without spatial context. This improvement was due to the strong prediction performance of the TabPFN algorithm itself and the complementary spatial information provided by KpR features. TabPFN is particularly effective for prediction tasks with small sample sizes, common in precision agriculture, whereas KpR can compensate for weak relationships between sensing features and soil properties when proximal soil sensing data are limited. Hence, we conclude that KpR with TabPFN is a very robust and versatile modelling framework for digital soil mapping in precision agriculture.

Modern Neural Networks for Small Tabular Datasets: The New Default for Field-Scale Digital Soil Mapping?

Aug 13, 2025

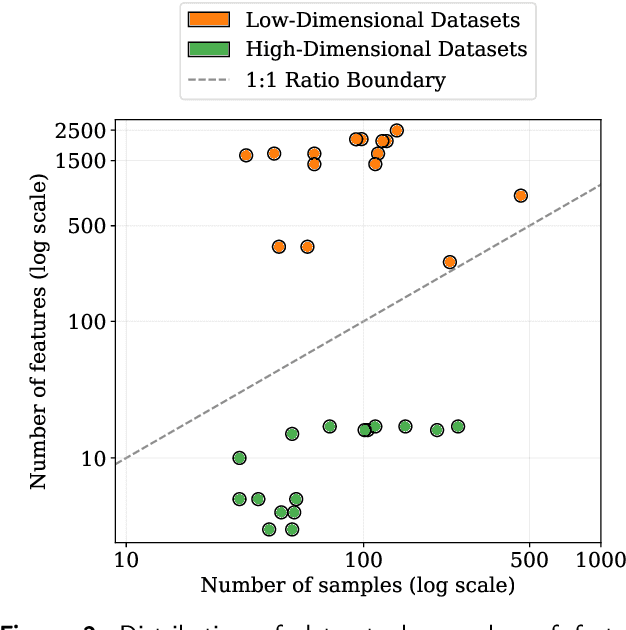

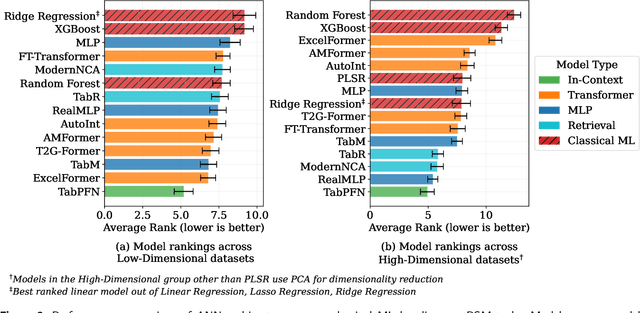

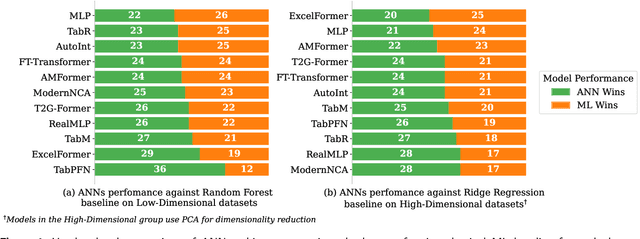

Abstract:In the field of pedometrics, tabular machine learning is the predominant method for predicting soil properties from remote and proximal soil sensing data, forming a central component of digital soil mapping. At the field-scale, this predictive soil modeling (PSM) task is typically constrained by small training sample sizes and high feature-to-sample ratios in soil spectroscopy. Traditionally, these conditions have proven challenging for conventional deep learning methods. Classical machine learning algorithms, particularly tree-based models like Random Forest and linear models such as Partial Least Squares Regression, have long been the default choice for field-scale PSM. Recent advances in artificial neural networks (ANN) for tabular data challenge this view, yet their suitability for field-scale PSM has not been proven. We introduce a comprehensive benchmark that evaluates state-of-the-art ANN architectures, including the latest multilayer perceptron (MLP)-based models (TabM, RealMLP), attention-based transformer variants (FT-Transformer, ExcelFormer, T2G-Former, AMFormer), retrieval-augmented approaches (TabR, ModernNCA), and an in-context learning foundation model (TabPFN). Our evaluation encompasses 31 field- and farm-scale datasets containing 30 to 460 samples and three critical soil properties: soil organic matter or soil organic carbon, pH, and clay content. Our results reveal that modern ANNs consistently outperform classical methods on the majority of tasks, demonstrating that deep learning has matured sufficiently to overcome the long-standing dominance of classical machine learning for PSM. Notably, TabPFN delivers the strongest overall performance, showing robustness across varying conditions. We therefore recommend the adoption of modern ANNs for field-scale PSM and propose TabPFN as the new default choice in the toolkit of every pedometrician.

Acting and Planning with Hierarchical Operational Models on a Mobile Robot: A Study with RAE+UPOM

Jul 15, 2025Abstract:Robotic task execution faces challenges due to the inconsistency between symbolic planner models and the rich control structures actually running on the robot. In this paper, we present the first physical deployment of an integrated actor-planner system that shares hierarchical operational models for both acting and planning, interleaving the Reactive Acting Engine (RAE) with an anytime UCT-like Monte Carlo planner (UPOM). We implement RAE+UPOM on a mobile manipulator in a real-world deployment for an object collection task. Our experiments demonstrate robust task execution under action failures and sensor noise, and provide empirical insights into the interleaved acting-and-planning decision making process.

Comprehensive Evaluation of Prototype Neural Networks

Jul 09, 2025Abstract:Prototype models are an important method for explainable artificial intelligence (XAI) and interpretable machine learning. In this paper, we perform an in-depth analysis of a set of prominent prototype models including ProtoPNet, ProtoPool and PIPNet. For their assessment, we apply a comprehensive set of metrics. In addition to applying standard metrics from literature, we propose several new metrics to further complement the analysis of model interpretability. In our experimentation, we apply the set of prototype models on a diverse set of datasets including fine-grained classification, Non-IID settings and multi-label classification to further contrast the performance. Furthermore, we also provide our code as an open-source library, which facilitates simple application of the metrics itself, as well as extensibility - providing the option for easily adding new metrics and models. https://github.com/uos-sis/quanproto

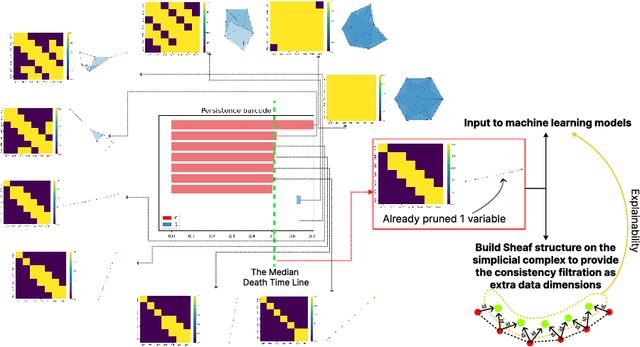

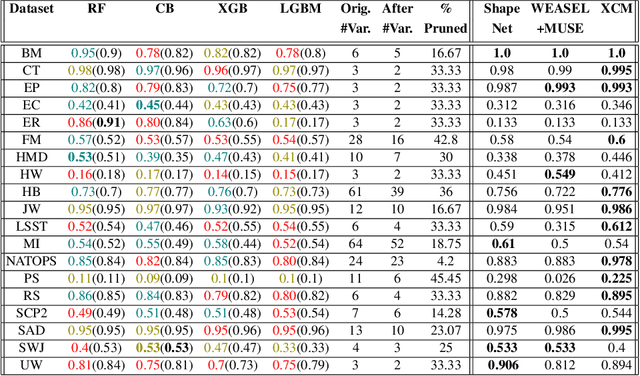

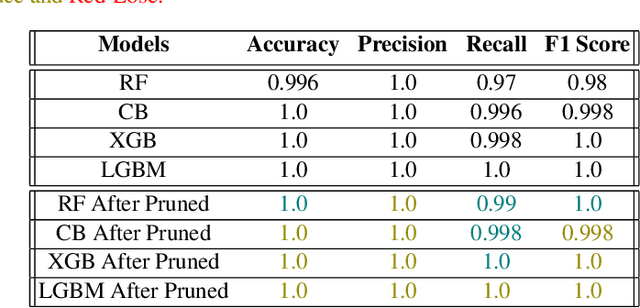

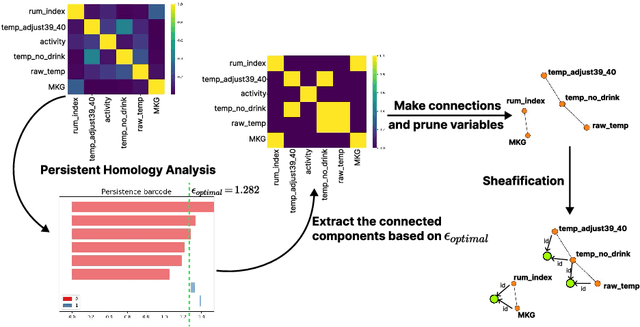

PHEATPRUNER: Interpretable Data-centric Feature Selection for Multivariate Time Series Classification through Persistent Homology

Apr 25, 2025

Abstract:Balancing performance and interpretability in multivariate time series classification is a significant challenge due to data complexity and high dimensionality. This paper introduces PHeatPruner, a method integrating persistent homology and sheaf theory to address these challenges. Persistent homology facilitates the pruning of up to 45% of the applied variables while maintaining or enhancing the accuracy of models such as Random Forest, CatBoost, XGBoost, and LightGBM, all without depending on posterior probabilities or supervised optimization algorithms. Concurrently, sheaf theory contributes explanatory vectors that provide deeper insights into the data's structural nuances. The approach was validated using the UEA Archive and a mastitis detection dataset for dairy cows. The results demonstrate that PHeatPruner effectively preserves model accuracy. Furthermore, our results highlight PHeatPruner's key features, i.e. simplifying complex data and offering actionable insights without increasing processing time or complexity. This method bridges the gap between complexity reduction and interpretability, suggesting promising applications in various fields.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge