Dario Jozinović

Intra-domain and cross-domain transfer learning for time series data -- How transferable are the features?

Jan 12, 2022

Abstract:In practice, it is very demanding and sometimes impossible to collect datasets of tagged data large enough to successfully train a machine learning model, and one possible solution to this problem is transfer learning. This study aims to assess how transferable are the features between different domains of time series data and under which conditions. The effects of transfer learning are observed in terms of predictive performance of the models and their convergence rate during training. In our experiment, we use reduced data sets of 1,500 and 9,000 data instances to mimic real world conditions. Using the same scaled-down datasets, we trained two sets of machine learning models: those that were trained with transfer learning and those that were trained from scratch. Four machine learning models were used for the experiment. Transfer of knowledge was performed within the same domain of application (seismology), as well as between mutually different domains of application (seismology, speech, medicine, finance). We observe the predictive performance of the models and the convergence rate during the training. In order to confirm the validity of the obtained results, we repeated the experiments seven times and applied statistical tests to confirm the significance of the results. The general conclusion of our study is that transfer learning is very likely to either increase or not negatively affect the predictive performance of the model or its convergence rate. The collected data is analysed in more details to determine which source and target domains are compatible for transfer of knowledge. We also analyse the effect of target dataset size and the selection of model and its hyperparameters on the effects of transfer learning.

Multivariate Time Series Regression with Graph Neural Networks

Jan 10, 2022

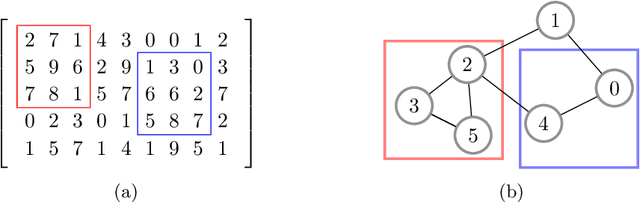

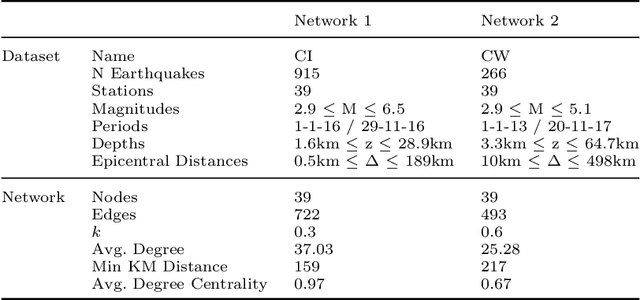

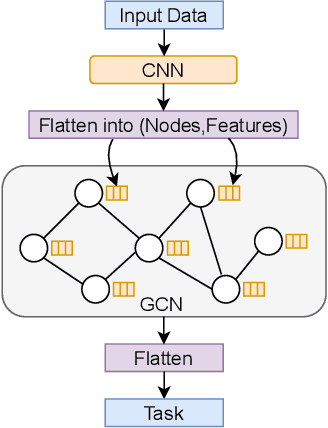

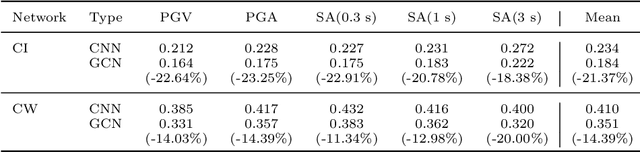

Abstract:Machine learning, with its advances in Deep Learning has shown great potential in analysing time series in the past. However, in many scenarios, additional information is available that can potentially improve predictions, by incorporating it into the learning methods. This is crucial for data that arises from e.g., sensor networks that contain information about sensor locations. Then, such spatial information can be exploited by modeling it via graph structures, along with the sequential (time) information. Recent advances in adapting Deep Learning to graphs have shown promising potential in various graph-related tasks. However, these methods have not been adapted for time series related tasks to a great extent. Specifically, most attempts have essentially consolidated around Spatial-Temporal Graph Neural Networks for time series forecasting with small sequence lengths. Generally, these architectures are not suited for regression or classification tasks that contain large sequences of data. Therefore, in this work, we propose an architecture capable of processing these long sequences in a multivariate time series regression task, using the benefits of Graph Neural Networks to improve predictions. Our model is tested on two seismic datasets that contain earthquake waveforms, where the goal is to predict intensity measurements of ground shaking at a set of stations. Our findings demonstrate promising results of our approach, which are discussed in depth with an additional ablation study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge