Leonid Schwenke

Saliency Maps are Ambiguous: Analysis of Logical Relations on First and Second Order Attributions

Jan 23, 2025

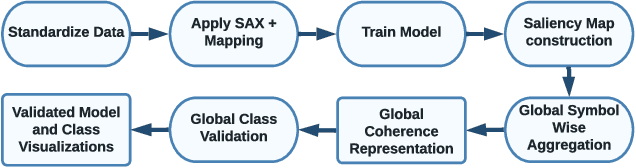

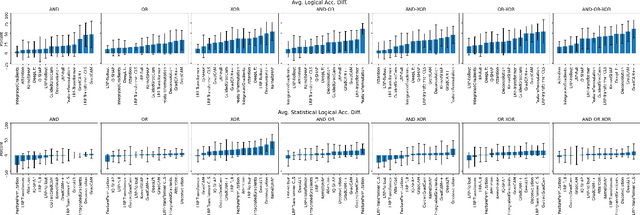

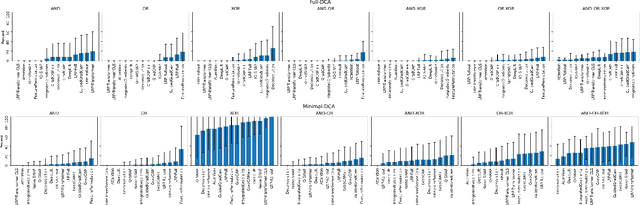

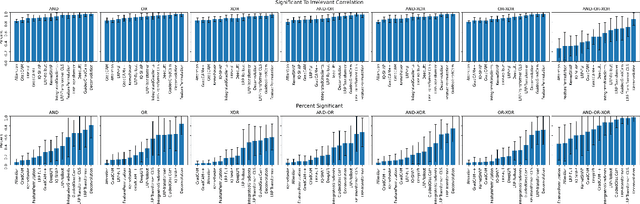

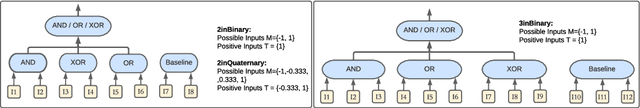

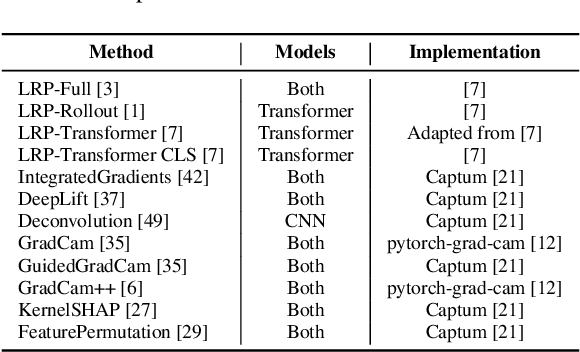

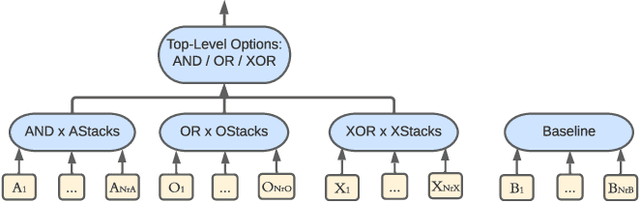

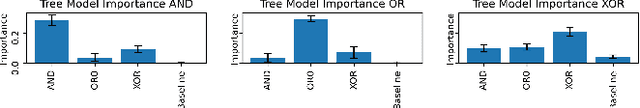

Abstract:Recent work uncovered potential flaws in \eg attribution or heatmap based saliency methods. A typical flaw is a confirmations bias, where the scores are compared to human expectation. Since measuring the quality of saliency methods is hard due to missing ground truth model reasoning, finding general limitations is also hard. This is further complicated, because masking-based evaluation on complex data can easily introduce a bias, as most methods cannot fully ignore inputs. In this work, we extend our previous analysis on the logical dataset framework ANDOR, where we showed that all analysed saliency methods fail to grasp all needed classification information for all possible scenarios. Specifically, this paper extends our previous work using analysis on more datasets, in order to better understand in which scenarios the saliency methods fail. Further, we apply the Global Coherence Representation as an additional evaluation method in order to enable actual input omission.

Saliency Methods are Encoders: Analysing Logical Relations Towards Interpretation

Dec 17, 2024

Abstract:With their increase in performance, neural network architectures also become more complex, necessitating explainability. Therefore, many new and improved methods are currently emerging, which often generate so-called saliency maps in order to improve interpretability. Those methods are often evaluated by visual expectations, yet this typically leads towards a confirmation bias. Due to a lack of a general metric for explanation quality, non-accessible ground truth data about the model's reasoning and the large amount of involved assumptions, multiple works claim to find flaws in those methods. However, this often leads to unfair comparison metrics. Additionally, the complexity of most datasets (mostly images or text) is often so high, that approximating all possible explanations is not feasible. For those reasons, this paper introduces a test for saliency map evaluation: proposing controlled experiments based on all possible model reasonings over multiple simple logical datasets. Using the contained logical relationships, we aim to understand how different saliency methods treat information in different class discriminative scenarios (e.g. via complementary and redundant information). By introducing multiple new metrics, we analyse propositional logical patterns towards a non-informative attribution score baseline to find deviations of typical expectations. Our results show that saliency methods can encode classification relevant information into the ordering of saliency scores.

Real or Virtual? Using Brain Activity Patterns to differentiate Attended Targets during Augmented Reality Scenarios

Jan 12, 2021

Abstract:Augmented Reality is the fusion of virtual components and our real surroundings. The simultaneous visibility of generated and natural objects often requires users to direct their selective attention to a specific target that is either real or virtual. In this study, we investigated whether this target is real or virtual by using machine learning techniques to classify electroencephalographic (EEG) data collected in Augmented Reality scenarios. A shallow convolutional neural net classified 3 second data windows from 20 participants in a person-dependent manner with an average accuracy above 70\% if the testing data and training data came from different trials. Person-independent classification was possible above chance level for 6 out of 20 participants. Thus, the reliability of such a Brain-Computer Interface is high enough for it to be treated as a useful input mechanism for Augmented Reality applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge