Jinsheng Wei

FlyKites: Human-centric Interactive Exploration and Assistance under Limited Communication

Sep 19, 2025Abstract:Fleets of autonomous robots have been deployed for exploration of unknown scenes for features of interest, e.g., subterranean exploration, reconnaissance, search and rescue missions. During exploration, the robots may encounter un-identified targets, blocked passages, interactive objects, temporary failure, or other unexpected events, all of which require consistent human assistance with reliable communication for a time period. This however can be particularly challenging if the communication among the robots is severely restricted to only close-range exchange via ad-hoc networks, especially in extreme environments like caves and underground tunnels. This paper presents a novel human-centric interactive exploration and assistance framework called FlyKites, for multi-robot systems under limited communication. It consists of three interleaved components: (I) the distributed exploration and intermittent communication (called the "spread mode"), where the robots collaboratively explore the environment and exchange local data among the fleet and with the operator; (II) the simultaneous optimization of the relay topology, the operator path, and the assignment of robots to relay roles (called the "relay mode"), such that all requested assistance can be provided with minimum delay; (III) the human-in-the-loop online execution, where the robots switch between different roles and interact with the operator adaptively. Extensive human-in-the-loop simulations and hardware experiments are performed over numerous challenging scenes.

MoRoCo: Multi-operator-robot Coordination, Interaction and Exploration under Restricted Communication

Aug 11, 2025Abstract:Fleets of autonomous robots are increasingly deployed alongside multiple human operators to explore unknown environments, identify salient features, and perform complex tasks in scenarios such as subterranean exploration, reconnaissance, and search-and-rescue missions. In these contexts, communication is often severely limited to short-range exchanges via ad-hoc networks, posing challenges to coordination. While recent studies have addressed multi-robot exploration under communication constraints, they largely overlook the essential role of human operators and their real-time interaction with robotic teams. Operators may demand timely updates on the exploration progress and robot status, reprioritize or cancel tasks dynamically, or request live video feeds and control access. Conversely, robots may seek human confirmation for anomalous events or require help recovering from motion or planning failures. To enable such bilateral, context-aware interactions under restricted communication, this work proposes MoRoCo, a unified framework for online coordination and exploration in multi-operator, multi-robot systems. MoRoCo enables the team to adaptively switch among three coordination modes: spread mode for parallelized exploration with intermittent data sharing, migrate mode for coordinated relocation, and chain mode for maintaining high-bandwidth connectivity through multi-hop links. These transitions are managed through distributed algorithms via only local communication. Extensive large-scale human-in-the-loop simulations and hardware experiments validate the necessity of incorporating human robot interactions and demonstrate that MoRoCo enables efficient, reliable coordination under limited communication, marking a significant step toward robust human-in-the-loop multi-robot autonomy in challenging environments.

iHERO: Interactive Human-oriented Exploration and Supervision Under Scarce Communication

May 21, 2024

Abstract:Exploration of unknown scenes before human entry is essential for safety and efficiency in numerous scenarios, e.g., subterranean exploration, reconnaissance, search and rescue missions. Fleets of autonomous robots are particularly suitable for this task, via concurrent exploration, multi-sensory perception and autonomous navigation. Communication however among the robots can be severely restricted to only close-range exchange via ad-hoc networks. Although some recent works have addressed the problem of collaborative exploration under restricted communication, the crucial role of the human operator has been mostly neglected. Indeed, the operator may: (i) require timely update regarding the exploration progress and fleet status; (ii) prioritize certain regions; and (iii) dynamically move within the explored area; To facilitate these requests, this work proposes an interactive human-oriented online coordination framework for collaborative exploration and supervision under scarce communication (iHERO). The robots switch smoothly and optimally among fast exploration, intermittent exchange of map and sensory data, and return to the operator for status update. It is ensured that these requests are fulfilled online interactively with a pre-specified latency. Extensive large-scale human-in-the-loop simulations and hardware experiments are performed over numerous challenging scenes, which signify its performance such as explored area and efficiency, and validate its potential applicability to real-world scenarios.

Prior Information based Decomposition and Reconstruction Learning for Micro-Expression Recognition

Mar 03, 2023

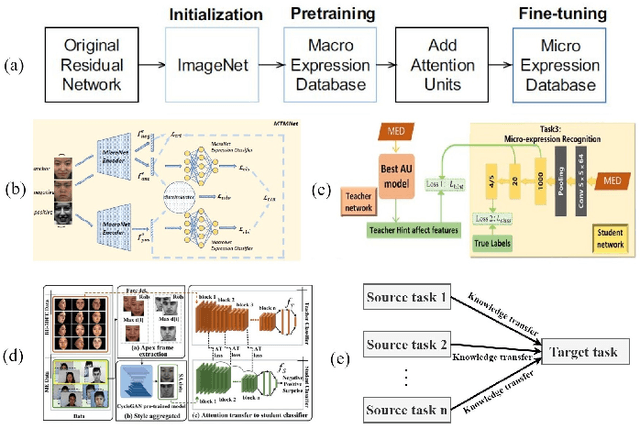

Abstract:Micro-expression recognition (MER) draws intensive research interest as micro-expressions (MEs) can infer genuine emotions. Prior information can guide the model to learn discriminative ME features effectively. However, most works focus on researching the general models with a stronger representation ability to adaptively aggregate ME movement information in a holistic way, which may ignore the prior information and properties of MEs. To solve this issue, driven by the prior information that the category of ME can be inferred by the relationship between the actions of facial different components, this work designs a novel model that can conform to this prior information and learn ME movement features in an interpretable way. Specifically, this paper proposes a Decomposition and Reconstruction-based Graph Representation Learning (DeRe-GRL) model to effectively learn high-level ME features. DeRe-GRL includes two modules: Action Decomposition Module (ADM) and Relation Reconstruction Module (RRM), where ADM learns action features of facial key components and RRM explores the relationship between these action features. Based on facial key components, ADM divides the geometric movement features extracted by the graph model-based backbone into several sub-features, and learns the map matrix to map these sub-features into multiple action features; then, RRM learns weights to weight all action features to build the relationship between action features. The experimental results demonstrate the effectiveness of the proposed modules, and the proposed method achieves competitive performance.

Geometric Graph Representation with Learnable Graph Structure and Adaptive AU Constraint for Micro-Expression Recognition

May 01, 2022

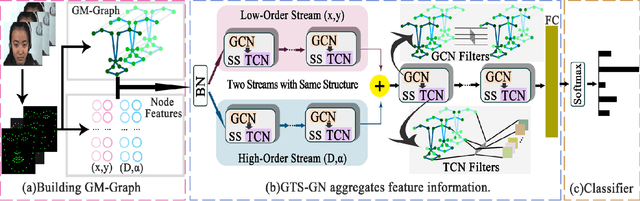

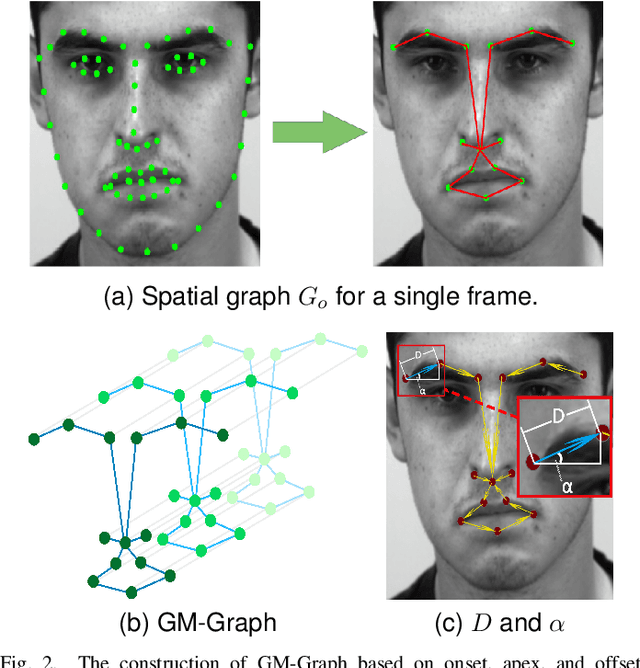

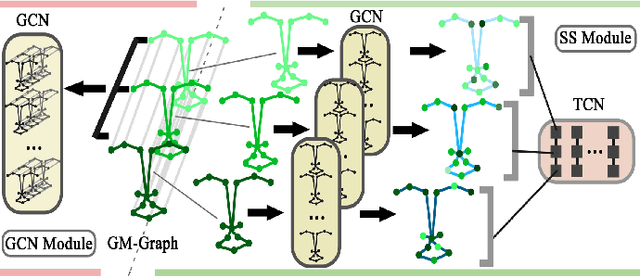

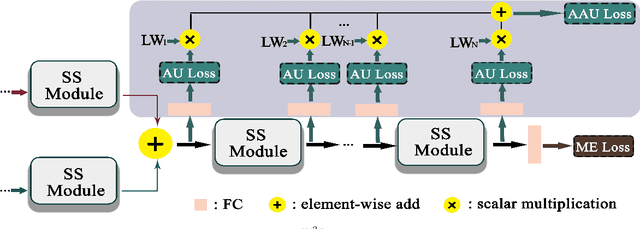

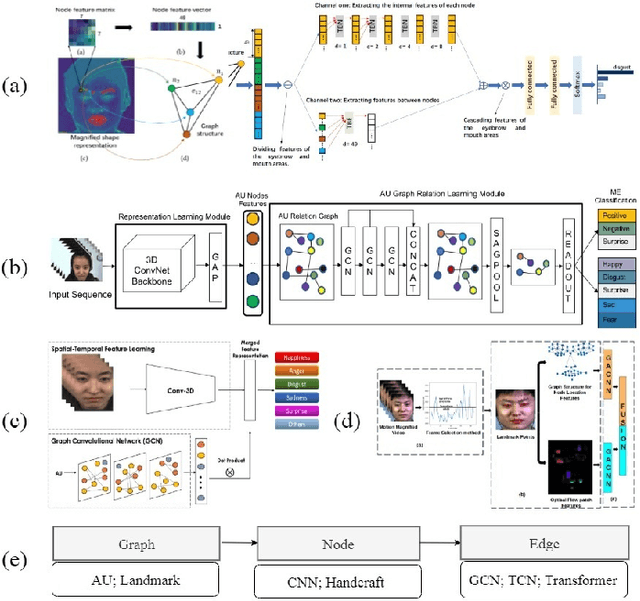

Abstract:Micro-expression recognition (MER) is valuable because the involuntary nature of micro-expressions (MEs) can reveal genuine emotions. Most works recognize MEs by taking RGB videos or images as input. In fact, the activated facial regions in ME images are very small and the subtle motion can be easily submerged in the unrelated information. Facial landmarks are a low-dimensional and compact modality, which leads to much lower computational cost and can potentially concentrate more on ME-related features. However, the discriminability of landmarks for MER is not clear. Thus, this paper explores the contribution of facial landmarks and constructs a new framework to efficiently recognize MEs with sole facial landmark information. Specially, we design a separate structure module to separately aggregate the spatial and temporal information in the geometric movement graph based on facial landmarks, and a Geometric Two-Stream Graph Network is constructed to aggregate the low-order geometric information and high-order semantic information of facial landmarks. Furthermore, two core components are proposed to enhance features. Specifically, a semantic adjacency matrix can automatically model the relationship between nodes even long-distance nodes in a self-learning fashion; and an Adaptive Action Unit loss is introduced to guide the learning process such that the learned features are forced to have a synchronized pattern with facial action units. Notably, this work tackles MER only utilizing geometric features, processed based on a graph model, which provides a new idea with much higher efficiency to promote MER. The experimental results demonstrate that the proposed method can achieve competitive or even superior performance with a significantly reduced computational cost, and facial landmarks can significantly contribute to MER and are worth further study for efficient ME analysis.

Deep Learning based Micro-expression Recognition: A Survey

Jul 06, 2021

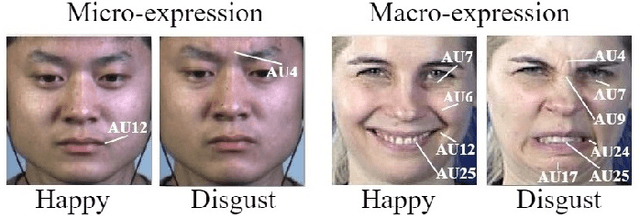

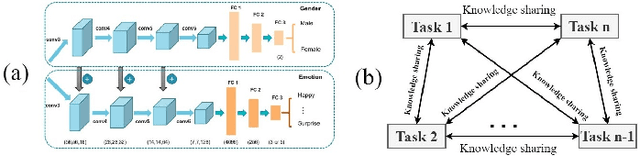

Abstract:Micro-expressions (MEs) are involuntary facial movements revealing people's hidden feelings in high-stake situations and have practical importance in medical treatment, national security, interrogations and many human-computer interaction systems. Early methods for MER mainly based on traditional appearance and geometry features. Recently, with the success of deep learning (DL) in various fields, neural networks have received increasing interests in MER. Different from macro-expressions, MEs are spontaneous, subtle, and rapid facial movements, leading to difficult data collection, thus have small-scale datasets. DL based MER becomes challenging due to above ME characters. To data, various DL approaches have been proposed to solve the ME issues and improve MER performance. In this survey, we provide a comprehensive review of deep micro-expression recognition (MER), including datasets, deep MER pipeline, and the bench-marking of most influential methods. This survey defines a new taxonomy for the field, encompassing all aspects of MER based on DL. For each aspect, the basic approaches and advanced developments are summarized and discussed. In addition, we conclude the remaining challenges and and potential directions for the design of robust deep MER systems. To the best of our knowledge, this is the first survey of deep MER methods, and this survey can serve as a reference point for future MER research.

A comparative study on movement feature in different directions for micro-expression recognition

Feb 16, 2021

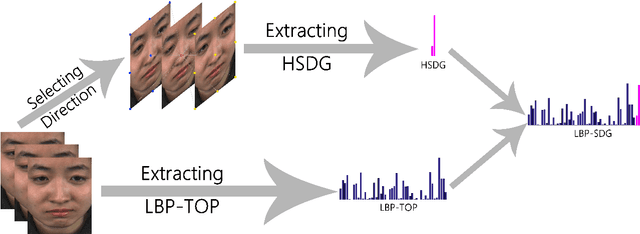

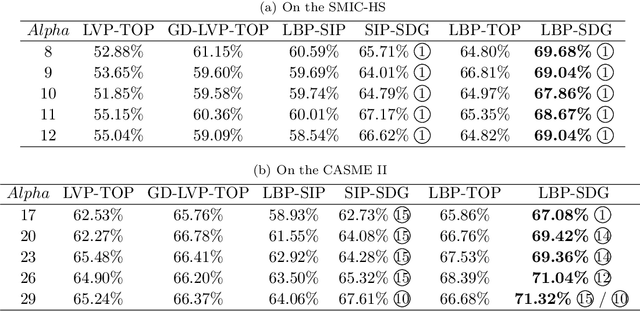

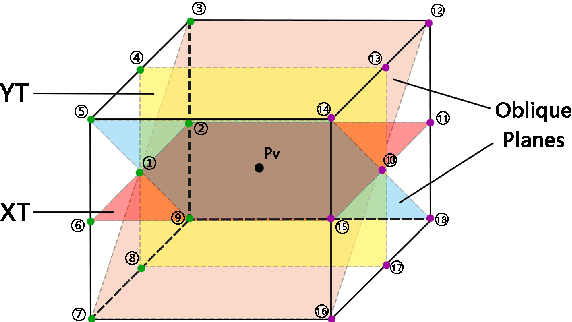

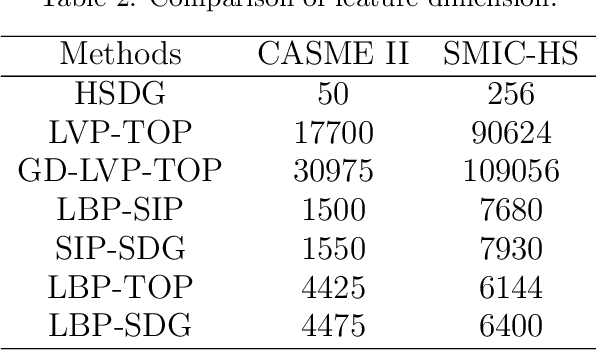

Abstract:Micro-expression can reflect people's real emotions. Recognizing micro-expressions is difficult because they are small motions and have a short duration. As the research is deepening into micro-expression recognition, many effective features and methods have been proposed. To determine which direction of movement feature is easier for distinguishing micro-expressions, this paper selects 18 directions (including three types of horizontal, vertical and oblique movements) and proposes a new low-dimensional feature called the Histogram of Single Direction Gradient (HSDG) to study this topic. In this paper, HSDG in every direction is concatenated with LBP-TOP to obtain the LBP with Single Direction Gradient (LBP-SDG) and analyze which direction of movement feature is more discriminative for micro-expression recognition. As with some existing work, Euler Video Magnification (EVM) is employed as a preprocessing step. The experiments on the CASME II and SMIC-HS databases summarize the effective and optimal directions and demonstrate that HSDG in an optimal direction is discriminative, and the corresponding LBP-SDG achieves state-of-the-art performance using EVM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge