Jinlin Zhu

Towards Efficiently Evaluating the Robustness of Deep Neural Networks in IoT Systems: A GAN-based Method

Nov 19, 2021

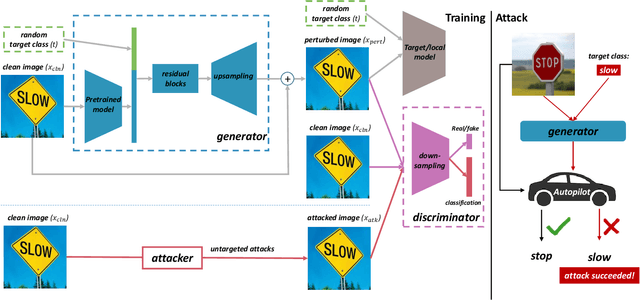

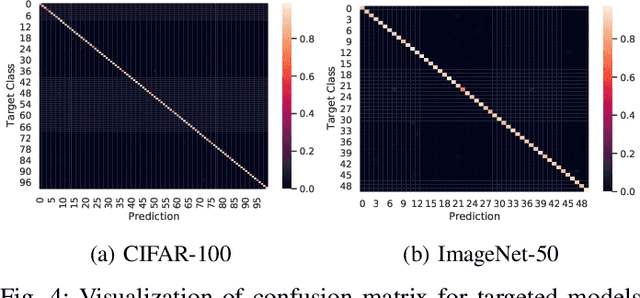

Abstract:Intelligent Internet of Things (IoT) systems based on deep neural networks (DNNs) have been widely deployed in the real world. However, DNNs are found to be vulnerable to adversarial examples, which raises people's concerns about intelligent IoT systems' reliability and security. Testing and evaluating the robustness of IoT systems becomes necessary and essential. Recently various attacks and strategies have been proposed, but the efficiency problem remains unsolved properly. Existing methods are either computationally extensive or time-consuming, which is not applicable in practice. In this paper, we propose a novel framework called Attack-Inspired GAN (AI-GAN) to generate adversarial examples conditionally. Once trained, it can generate adversarial perturbations efficiently given input images and target classes. We apply AI-GAN on different datasets in white-box settings, black-box settings and targeted models protected by state-of-the-art defenses. Through extensive experiments, AI-GAN achieves high attack success rates, outperforming existing methods, and reduces generation time significantly. Moreover, for the first time, AI-GAN successfully scales to complex datasets e.g. CIFAR-100 and ImageNet, with about $90\%$ success rates among all classes.

AI-GAN: Attack-Inspired Generation of Adversarial Examples

Feb 06, 2020

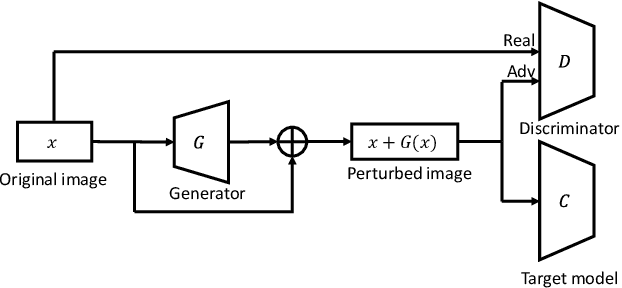

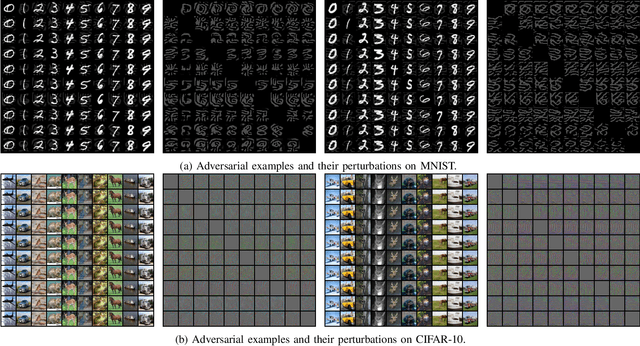

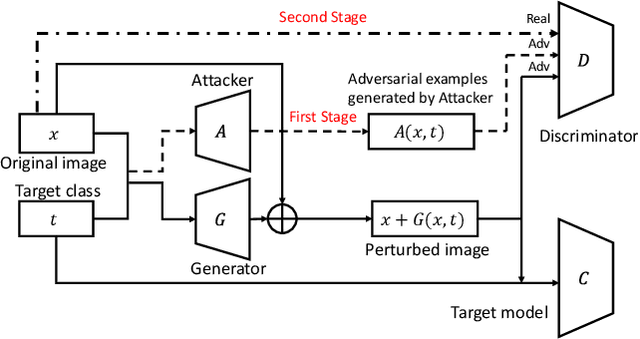

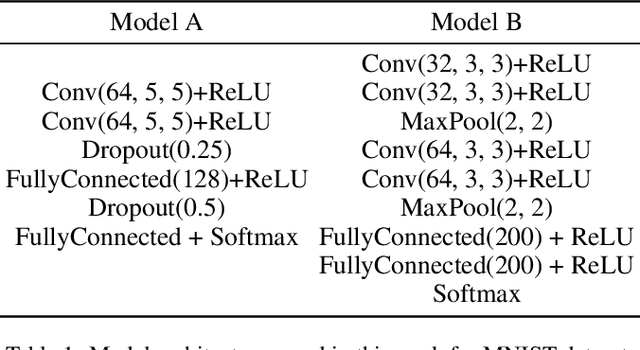

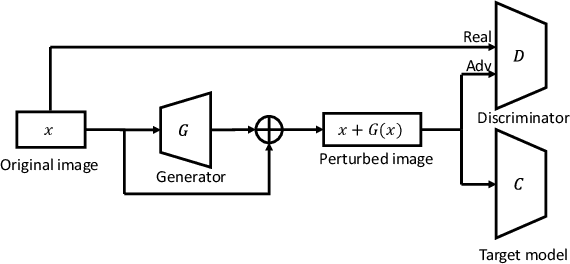

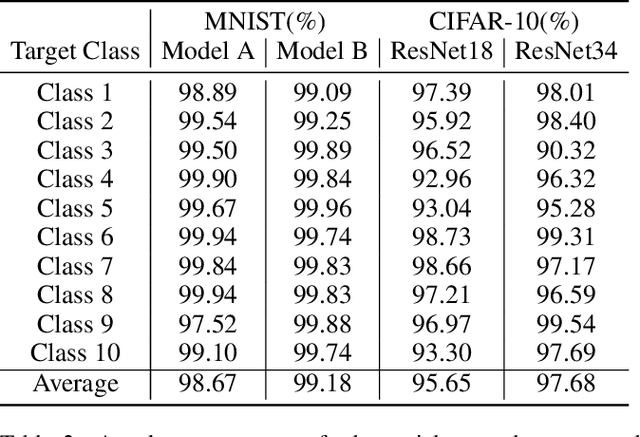

Abstract:Adversarial examples that can fool deep models are mainly crafted by adding small perturbations imperceptible to human eyes. There are various optimization-based methods in the literature to generate adversarial perturbations, most of which are time-consuming. AdvGAN, a method proposed by Xiao~\emph{et al.}~in IJCAI~2018, employs Generative Adversarial Networks (GAN) to generate adversarial perturbation with original images as inputs, which is faster than optimization-based methods at inference time. AdvGAN, however, fixes the target classes in the training and we find it difficult to train AdvGAN when it is modified to take original images and target classes as inputs. In this paper, we propose \mbox{Attack-Inspired} GAN (\mbox{AI-GAN}) with a different training strategy to solve this problem. \mbox{AI-GAN} is a two-stage method, in which we use projected gradient descent (PGD) attack to inspire the training of GAN in the first stage and apply standard training of GAN in the second stage. Once trained, the Generator can approximate the conditional distribution of adversarial instances and generate \mbox{imperceptible} adversarial perturbations given different target classes. We conduct experiments and evaluate the performance of \mbox{AI-GAN} on MNIST and \mbox{CIFAR-10}. Compared with AdvGAN, \mbox{AI-GAN} achieves higher attack success rates with similar perturbation magnitudes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge