Jie Xing

OCSU: Optical Chemical Structure Understanding for Molecule-centric Scientific Discovery

Jan 26, 2025Abstract:Understanding the chemical structure from a graphical representation of a molecule is a challenging image caption task that would greatly benefit molecule-centric scientific discovery. Variations in molecular images and caption subtasks pose a significant challenge in both image representation learning and task modeling. Yet, existing methods only focus on a specific caption task that translates a molecular image into its graph structure, i.e., OCSR. In this paper, we propose the Optical Chemical Structure Understanding (OCSU) task, which extends OCSR to molecular image caption from motif level to molecule level and abstract level. We present two approaches for that, including an OCSR-based method and an end-to-end OCSR-free method. The proposed Double-Check achieves SOTA OCSR performance on real-world patent and journal article scenarios via attentive feature enhancement for local ambiguous atoms. Cascading with SMILES-based molecule understanding methods, it can leverage the power of existing task-specific models for OCSU. While Mol-VL is an end-to-end optimized VLM-based model. An OCSU dataset, Vis-CheBI20, is built based on the widely used CheBI20 dataset for training and evaluation. Extensive experimental results on Vis-CheBI20 demonstrate the effectiveness of the proposed approaches. Improving OCSR capability can lead to a better OCSU performance for OCSR-based approach, and the SOTA performance of Mol-VL demonstrates the great potential of end-to-end approach.

Automated Segmentation of Lesions in Ultrasound Using Semi-pixel-wise Cycle Generative Adversarial Nets

May 27, 2019

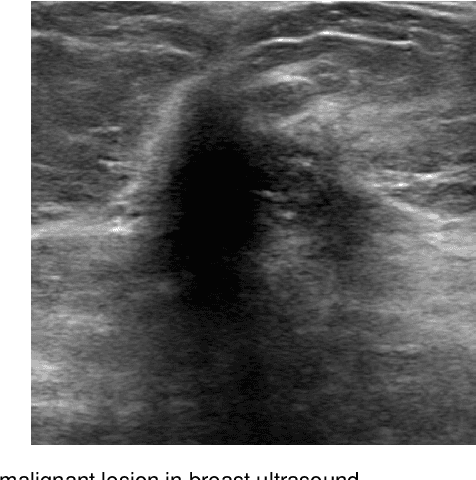

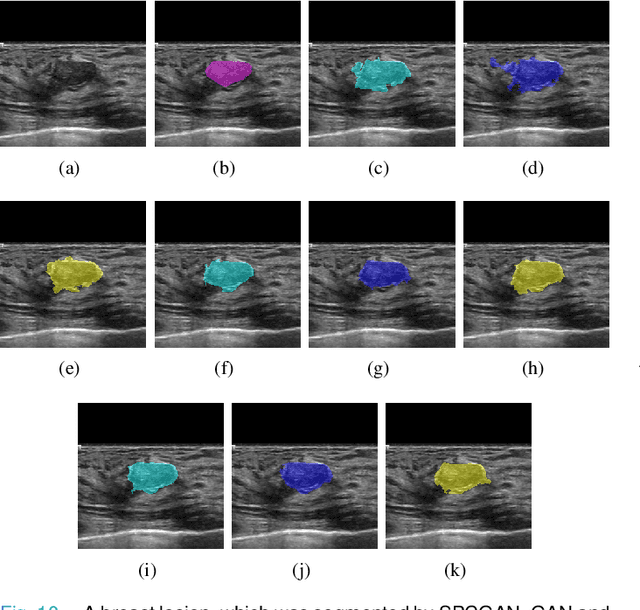

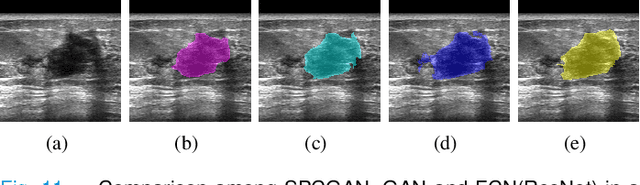

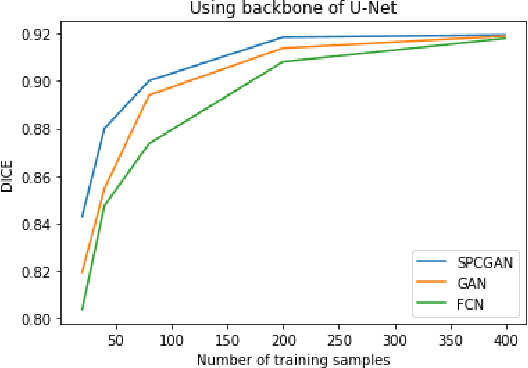

Abstract:Breast cancer is the most common invasive cancer with the highest cancer occurrence in females. Handheld ultrasound is one of the most efficient ways to identify and diagnose the breast cancer. The area and the shape information of a lesion is very helpful for clinicians to make diagnostic decisions. In this study we propose a new deep-learning scheme, semi-pixel-wise cycle generative adversarial net (SPCGAN) for segmenting the lesion in 2D ultrasound. The method takes the advantage of a fully connected convolutional neural network (FCN) and a generative adversarial net to segment a lesion by using prior knowledge. We compared the proposed method to a fully connected neural network and the level set segmentation method on a test dataset consisting of 32 malignant lesions and 109 benign lesions. Our proposed method achieved a Dice similarity coefficient (DSC) of 0.92 while FCN and the level set achieved 0.90 and 0.79 respectively. Particularly, for malignant lesions, our method increases the DSC (0.90) of the fully connected neural network to 0.93 significantly (p$<$0.001). The results show that our SPCGAN can obtain robust segmentation results and may be used to relieve the radiologists' burden for annotation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge