Jiayi Tan

FIRL: Fast Imitation and Policy Reuse Learning

Mar 01, 2022

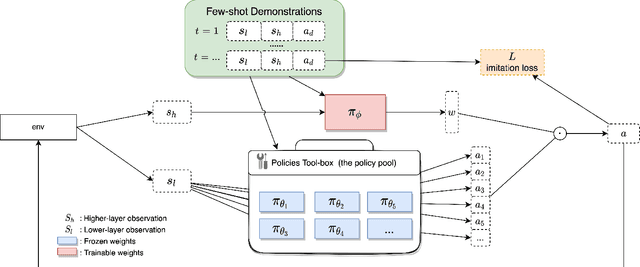

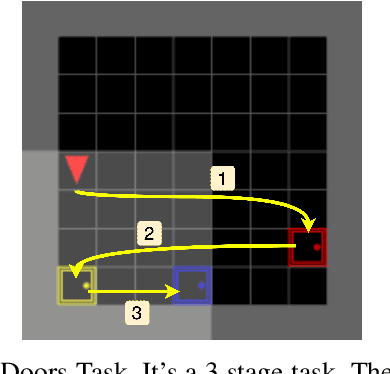

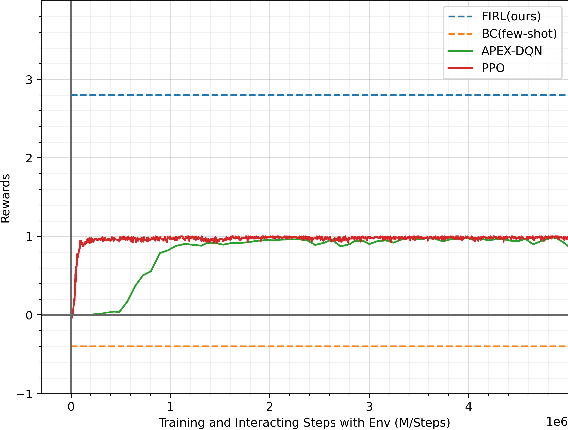

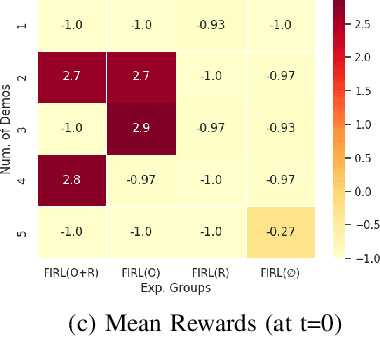

Abstract:Intelligent robotics policies have been widely researched for challenging applications such as opening doors, washing dishes, and table organization. We refer to a "Policy Pool", containing skills that be easily accessed and reused. There are researches to leverage the pool, such as policy reuse, modular learning, assembly learning, transfer learning, hierarchical reinforcement learning (HRL), etc. However, most methods generally do not perform well in learning efficiency and require large datasets for training. This work focuses on enabling fast learning based on the policy pool. It should learn fast enough in one-shot or few-shot by avoiding learning from scratch. We also allow it to interact and learn from humans, but the training period should be within minutes. We propose FIRL, Fast (one-shot) Imitation, and Policy Reuse Learning. Instead of learning a new skill from scratch, it performs the one-shot imitation learning on the higher layer under a 2-layer hierarchical mechanism. Our method reduces a complex task learning to a simple regression problem that it could solve in a few offline iterations. The agent could have a good command of a new task given a one-shot demonstration. We demonstrate this method on the OpenDoors mini-grid environment, and the code is available on http://www.github.com/yiwc/firl.

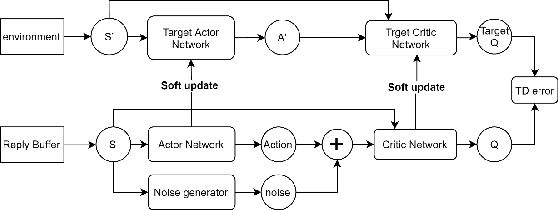

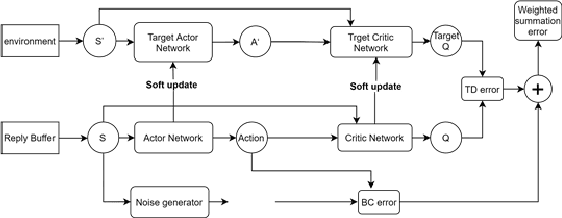

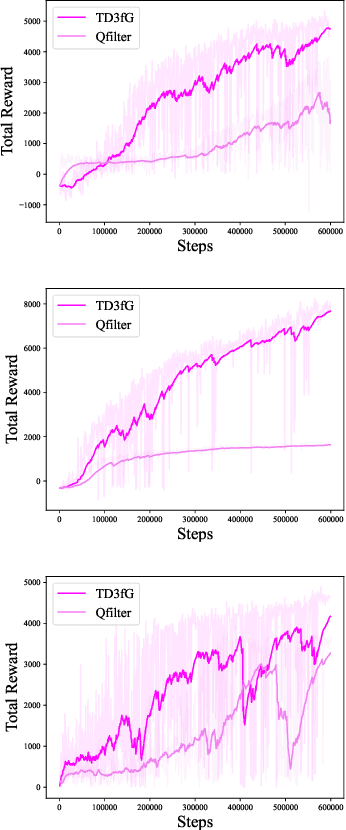

Improving Learning from Demonstrations by Learning from Experience

Nov 16, 2021

Abstract:How to make imitation learning more general when demonstrations are relatively limited has been a persistent problem in reinforcement learning (RL). Poor demonstrations lead to narrow and biased date distribution, non-Markovian human expert demonstration makes it difficult for the agent to learn, and over-reliance on sub-optimal trajectories can make it hard for the agent to improve its performance. To solve these problems we propose a new algorithm named TD3fG that can smoothly transition from learning from experts to learning from experience. Our algorithm achieves good performance in the MUJOCO environment with limited and sub-optimal demonstrations. We use behavior cloning to train the network as a reference action generator and utilize it in terms of both loss function and exploration noise. This innovation can help agents extract a priori knowledge from demonstrations while reducing the detrimental effects of the poor Markovian properties of the demonstrations. It has a better performance compared to the BC+ fine-tuning and DDPGfD approach, especially when the demonstrations are relatively limited. We call our method TD3fG meaning TD3 from a generator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge