Jianmin Zhang

Dynamic Ensemble Bayesian Filter for Robust Control of a Human Brain-machine Interface

Apr 22, 2022

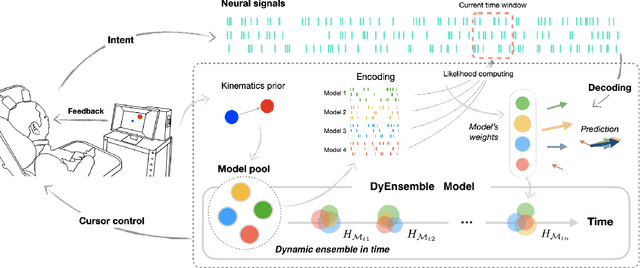

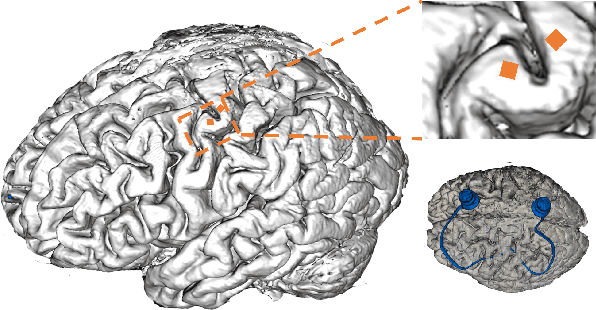

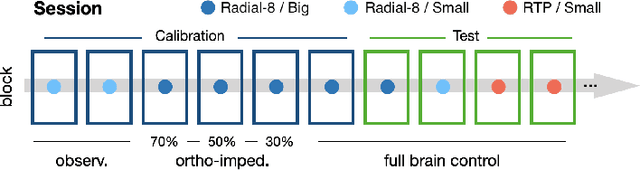

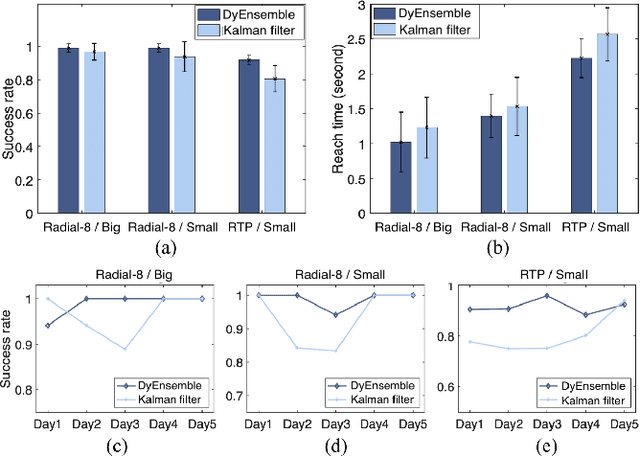

Abstract:Objective: Brain-machine interfaces (BMIs) aim to provide direct brain control of devices such as prostheses and computer cursors, which have demonstrated great potential for mobility restoration. One major limitation of current BMIs lies in the unstable performance in online control due to the variability of neural signals, which seriously hinders the clinical availability of BMIs. Method: To deal with the neural variability in online BMI control, we propose a dynamic ensemble Bayesian filter (DyEnsemble). DyEnsemble extends Bayesian filters with a dynamic measurement model, which adjusts its parameters in time adaptively with neural changes. This is achieved by learning a pool of candidate functions and dynamically weighting and assembling them according to neural signals. In this way, DyEnsemble copes with variability in signals and improves the robustness of online control. Results: Online BMI experiments with a human participant demonstrate that, compared with the velocity Kalman filter, DyEnsemble significantly improves the control accuracy (increases the success rate by 13.9% and reduces the reach time by 13.5% in the random target pursuit task) and robustness (performs more stably over different experiment days). Conclusion: Our results demonstrate the superiority of DyEnsemble in online BMI control. Significance: DyEnsemble frames a novel and flexible framework for robust neural decoding, which is beneficial to different neural decoding applications.

Towards a Neural Network Approach to Abstractive Multi-Document Summarization

Apr 24, 2018

Abstract:Till now, neural abstractive summarization methods have achieved great success for single document summarization (SDS). However, due to the lack of large scale multi-document summaries, such methods can be hardly applied to multi-document summarization (MDS). In this paper, we investigate neural abstractive methods for MDS by adapting a state-of-the-art neural abstractive summarization model for SDS. We propose an approach to extend the neural abstractive model trained on large scale SDS data to the MDS task. Our approach only makes use of a small number of multi-document summaries for fine tuning. Experimental results on two benchmark DUC datasets demonstrate that our approach can outperform a variety of baseline neural models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge