Jevgenij Krivochiza

Demonstrator Testbed for Effective Precoding in MEO Multibeam Satellites

Aug 27, 2025Abstract:The use of communication satellites in medium Earth orbit (MEO) is foreseen to provide quasi-global broadband Internet connectivity in the coming networking ecosystems. Multi-user multiple-input single-output (MU-MISO) digital signal processing techniques, such as precoding, emerge as appealing technological enablers in the forward link of multi-beam satellite systems operating in full frequency reuse (FFR). However, the orbit dynamics of MEO satellites pose additional challenges that must be carefully evaluated and addressed. This work presents the design of an in-lab testbed based on software-defined radio (SDR) platforms and the corresponding adaptations required for efficient precoding in a MEO scenario. The setup incorporates a precise orbit model and the radiation pattern of a custom-designed direct radiating array (DRA). We analyze the main impairments affecting precoding performance, including Doppler shifts and payload phase noise, and propose a synchronization loop to mitigate these effects. Preliminary experimental results validate the feasibility and effectiveness of the proposed solution.

Optimal Linear Precoding Under Realistic Satellite Communications Scenarios

Aug 16, 2024Abstract:In this paper, optimal linear precoding for the multibeam geostationary earth orbit (GEO) satellite with the multi-user (MU) multiple-input-multiple-output (MIMO) downlink scenario is addressed. Multiple-user interference is one of the major issues faced by the satellites serving the multiple users operating at the common time-frequency resource block in the downlink channel. To mitigate this issue, the optimal linear precoders are implemented at the gateways (GWs). The precoding computation is performed by utilizing the channel state information obtained at user terminals (UTs). The optimal linear precoders are derived considering beamformer update and power control with an iterative per-antenna power optimization algorithm with a limited required number of iterations. The efficacy of the proposed algorithm is validated using the In-Lab experiment for 16X16 precoding with multi-beam satellite for transmitting and receiving the precoded data with digital video broadcasting satellite-second generation extension (DVB- S2X) standard for the GW and the UTs. The software defined radio platforms are employed for emulating the GWs, UTs, and satellite links. The validation is supported by comparing the proposed optimal linear precoder with full frequency reuse (FFR), and minimum mean square error (MMSE) schemes. The experimental results demonstrate that with the optimal linear precoders it is possible to successfully cancel the inter-user interference in the simulated satellite FFR link. Thus, optimal linear precoding brings gains in terms of enhanced signal-to-noise-and-interference ratio, and increased system throughput and spectral efficiency.

ML-based PBCH symbol detection and equalization for 5G Non-Terrestrial Networks

Sep 26, 2023Abstract:This paper delves into the application of Machine Learning (ML) techniques in the realm of 5G Non-Terrestrial Networks (5G-NTN), particularly focusing on symbol detection and equalization for the Physical Broadcast Channel (PBCH). As 5G-NTN gains prominence within the 3GPP ecosystem, ML offers significant potential to enhance wireless communication performance. To investigate these possibilities, we present ML-based models trained with both synthetic and real data from a real 5G over-the-satellite testbed. Our analysis includes examining the performance of these models under various Signal-to-Noise Ratio (SNR) scenarios and evaluating their effectiveness in symbol enhancement and channel equalization tasks. The results highlight the ML performance in controlled settings and their adaptability to real-world challenges, shedding light on the potential benefits of the application of ML in 5G-NTN.

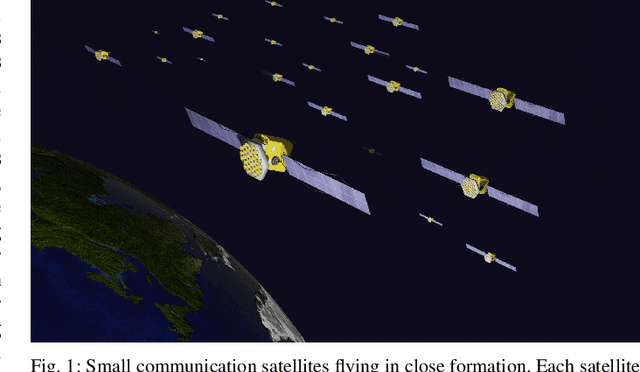

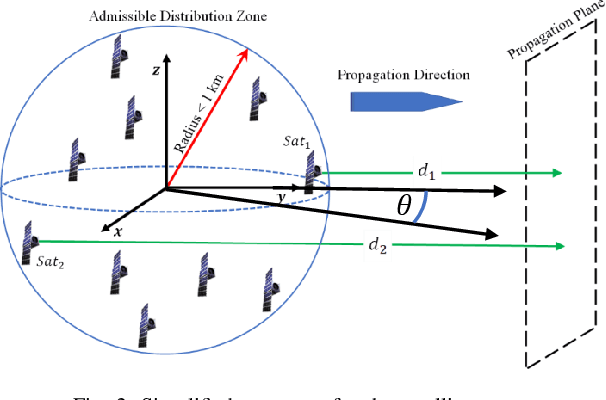

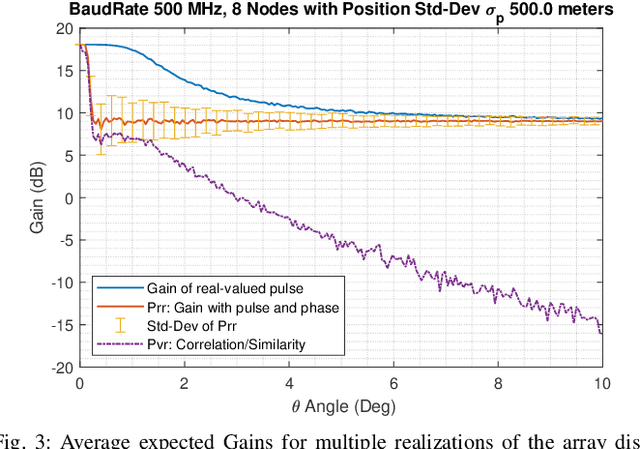

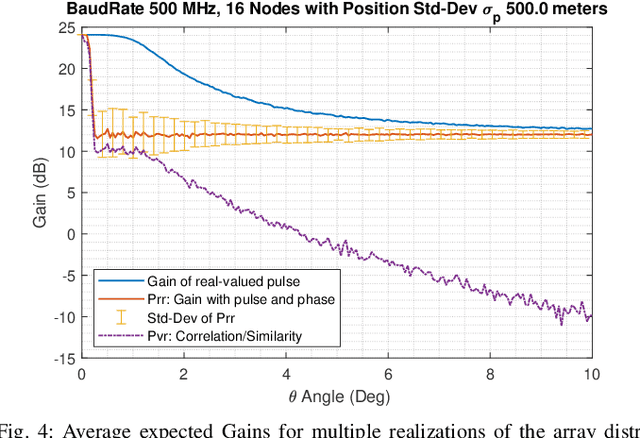

Harnessing the Power of Swarm Satellite Networks with Wideband Distributed Beamforming

Jul 10, 2023

Abstract:The space communications industry is challenged to develop a technology that can deliver broadband services to user terminals equipped with miniature antennas, such as handheld devices. One potential solution to establish links with ground users is the deployment of massive antennas in one single spacecraft. However, this is not cost-effective. Aligning with recent \emph{NewSpace} activities directed toward miniaturization, mass production, and a significant reduction in spacecraft launch costs, an alternative could be distributed beamforming from multiple satellites. In this context, we propose a distributed beamforming modeling technique for wideband signals. We also consider the statistical behavior of the relative geometry of the swarm nodes. The paper assesses the proposed technique via computer simulations, providing interesting results on the beamforming gains in terms of power and the security of the communication against potential eavesdroppers at non-intended pointing angles. This approach paves the way for further exploration of wideband distributed beamforming from satellite swarms in several future communication applications.

Architectures and Synchronization Techniques for Distributed Satellite Systems: A Survey

Mar 16, 2022

Abstract:Cohesive Distributed Satellite Systems (CDSS) is a key enabling technology for the future of remote sensing and communication missions. However, they have to meet strict synchronization requirements before their use is generalized. When clock or local oscillator signals are generated locally at each of the distributed nodes, achieving exact synchronization in absolute phase, frequency, and time is a complex problem. In addition, satellite systems have significant resource constraints, especially for small satellites, which are envisioned to be part of the future CDSS. Thus, the development of precise, robust, and resource-efficient synchronization techniques is essential for the advancement of future CDSS. In this context, this survey aims to summarize and categorize the most relevant results on synchronization techniques for DSS. First, some important architecture and system concepts are defined. Then, the synchronization methods reported in the literature are reviewed and categorized. This article also provides an extensive list of applications and examples of synchronization techniques for DSS in addition to the most significant advances in other operations closely related to synchronization, such as inter-satellite ranging and relative position. The survey also provides a discussion on emerging data-driven synchronization techniques based on ML. Finally, a compilation of current research activities and potential research topics is proposed, identifying problems and open challenges that can be useful for researchers in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge