Marcele O. K. Mendonca

Supervised Learning Based Real-Time Adaptive Beamforming On-board Multibeam Satellites

Nov 02, 2023

Abstract:Satellite communications (SatCom) are crucial for global connectivity, especially in the era of emerging technologies like 6G and narrowing the digital divide. Traditional SatCom systems struggle with efficient resource management due to static multibeam configurations, hindering quality of service (QoS) amidst dynamic traffic demands. This paper introduces an innovative solution - real-time adaptive beamforming on multibeam satellites with software-defined payloads in geostationary orbit (GEO). Utilizing a Direct Radiating Array (DRA) with circular polarization in the 17.7 - 20.2 GHz band, the paper outlines DRA design and a supervised learning-based algorithm for on-board beamforming. This adaptive approach not only meets precise beam projection needs but also dynamically adjusts beamwidth, minimizes sidelobe levels (SLL), and optimizes effective isotropic radiated power (EIRP).

Flexible Payload Configuration for Satellites using Machine Learning

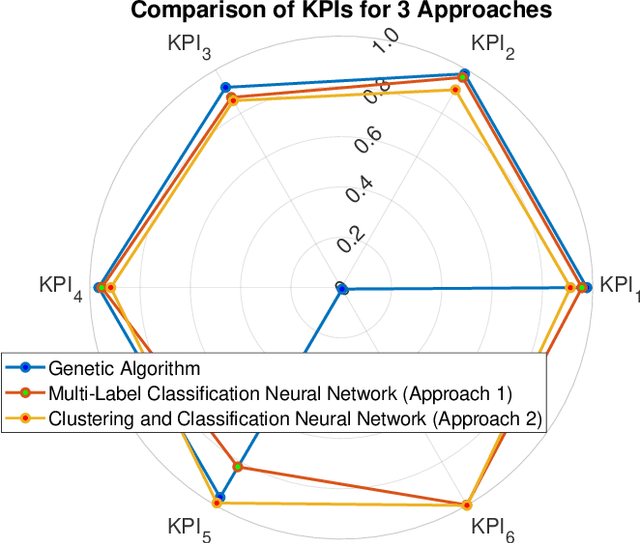

Oct 18, 2023Abstract:Satellite communications, essential for modern connectivity, extend access to maritime, aeronautical, and remote areas where terrestrial networks are unfeasible. Current GEO systems distribute power and bandwidth uniformly across beams using multi-beam footprints with fractional frequency reuse. However, recent research reveals the limitations of this approach in heterogeneous traffic scenarios, leading to inefficiencies. To address this, this paper presents a machine learning (ML)-based approach to Radio Resource Management (RRM). We treat the RRM task as a regression ML problem, integrating RRM objectives and constraints into the loss function that the ML algorithm aims at minimizing. Moreover, we introduce a context-aware ML metric that evaluates the ML model's performance but also considers the impact of its resource allocation decisions on the overall performance of the communication system.

ML-based PBCH symbol detection and equalization for 5G Non-Terrestrial Networks

Sep 26, 2023Abstract:This paper delves into the application of Machine Learning (ML) techniques in the realm of 5G Non-Terrestrial Networks (5G-NTN), particularly focusing on symbol detection and equalization for the Physical Broadcast Channel (PBCH). As 5G-NTN gains prominence within the 3GPP ecosystem, ML offers significant potential to enhance wireless communication performance. To investigate these possibilities, we present ML-based models trained with both synthetic and real data from a real 5G over-the-satellite testbed. Our analysis includes examining the performance of these models under various Signal-to-Noise Ratio (SNR) scenarios and evaluating their effectiveness in symbol enhancement and channel equalization tasks. The results highlight the ML performance in controlled settings and their adaptability to real-world challenges, shedding light on the potential benefits of the application of ML in 5G-NTN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge